blocks one by one. The advantages of block partition rest on two

points. One is that a large task for a whole image is reduced to

many smaller pieces, which are processed by multiple

CPU

cores

simultaneously by parallel computing. The other aspect con-

cerns the temporal file. Because data volume corresponding to

a block is not large, the temporal processing results for a block

can be saved in the random-access memory (

RAM

) instead of in a

temporal file, although complicated calculations are required to

determine the results. Thus, a temporal file is not needed.

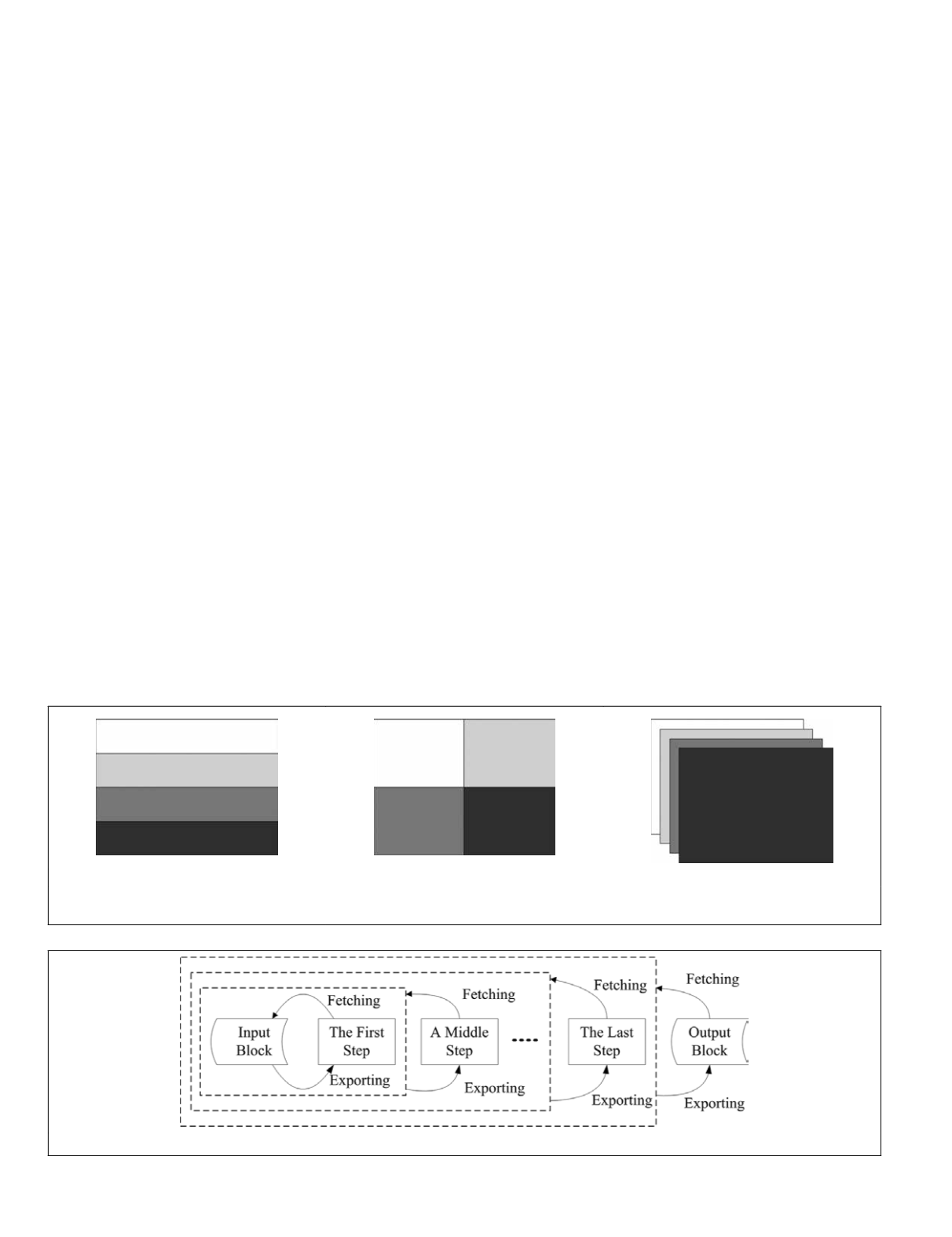

The strategies of block partition are shown in Figure 3. An

entire coverage in output bounds can be divided into strips

(Figure 3a), tiles (Figure 3b), or bands (Figure 3c). One of

these three strategies can be chosen according to the require-

ments. In practice, a tile is the form that is most commonly

used in data decomposition for parallel computing. In this

paper, the tested algorithms adopt different configurations of

block partition. De-correlation stretch employs two strate-

gies, i.e., tile (size: 64 × 64) and band based strategies, in its

different steps. The others adopt tile based strategy, but the

sizes of tiles are different: 64 × 64 for filtering, 128 × 128 for

DEM

extractions, and 512 × 128 for geometric correction, im-

age fusion, and image mosaics. The configurations of block

partition in this paper are based on the characteristics of each

algorithm, block size of file used to store final results, and

parallel performance achieved by us. These configurations

are relatively optimal selections. The rationale of choosing

partition strategy is worth discussing, but is out of the scope

of this paper.

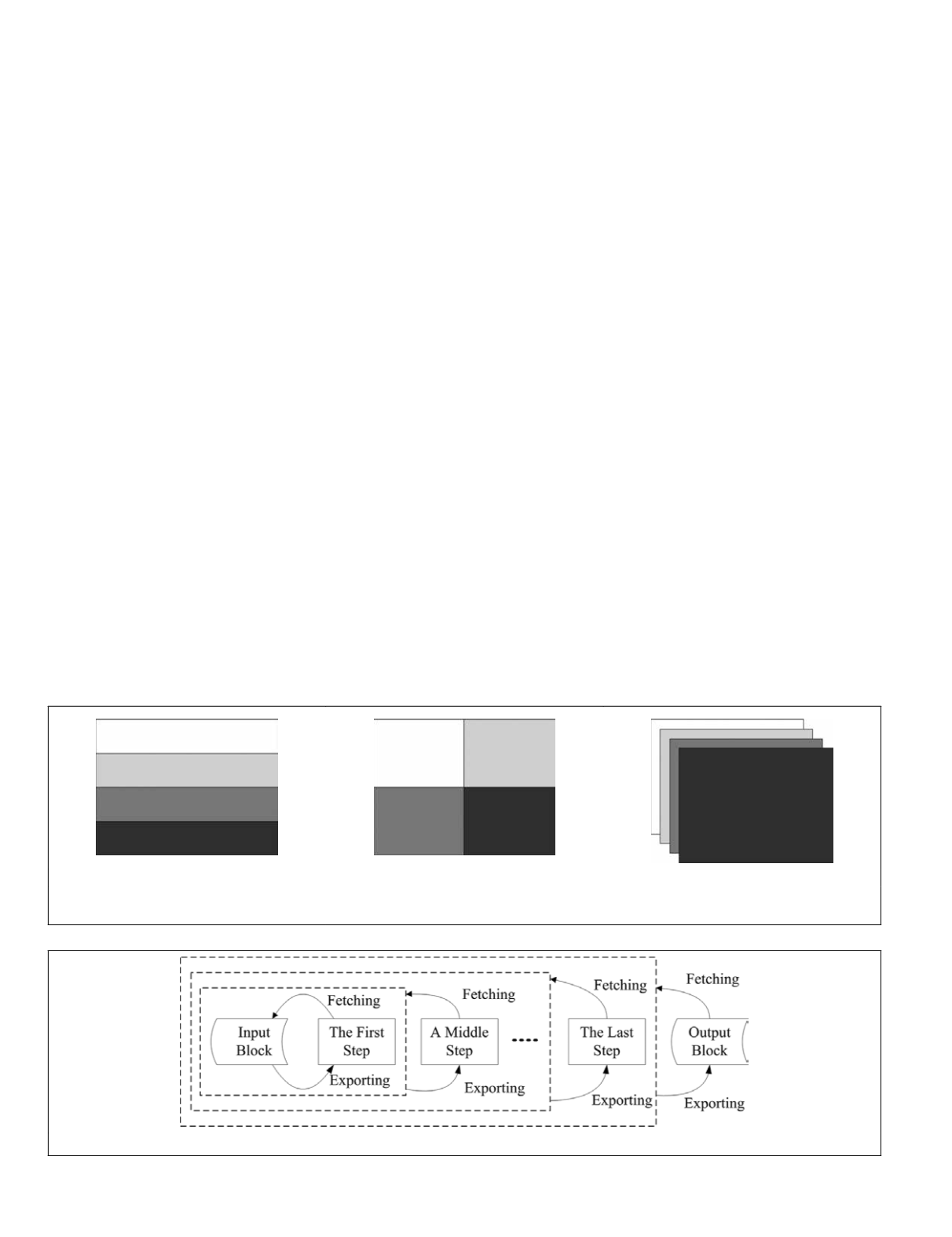

A Recursive Procedure to Generate Final Results

If several steps are required to generate the final results,

the recursive procedure shown in Figure 4 for fetching and

processing data will be used, where each step is the same as

described in the following cases. The geometric correction,

which includes two steps, namely geometric transformation

and interpolating, is an example. The strategy is similar to

that described in the literature (Christophe

et al.

, 2011).

According to the characteristics of typical algorithms in

remote sensing based mapping, not only the intensity value

of the

(i, j)

pixel but also the global statistical information or

neighboring pixels of location

(i, j)

are used for location

(i, j)

in certain cases;

(i, j)

represents the pixel location. In general,

three cases exist in which the necessary data are required to

enable the entire processing of a block (Nicolescu and Jonker,

2002). The case in which only the corresponding pixels are

used and the case in which both the corresponding and neigh-

boring pixels are used are shown in Figure 5a and Figure

5b, respectively. For instance, the former includes geometric

transformation, and the latter includes interpolating, convo-

lution, etc. For the case in which the corresponding pixels

and the global statistical information are used, the global

information is generated at the beginning of a procedure. The

principal component analysis (

PCA

) projection and the de-

correlation stretch are examples where the global information

is the mean value, the standard deviation value for each band

and covariance matrix, etc.

The Parallel Processing Mechanism

Parallel processing is the concept of using multiple comput-

ers or

CPU

cores to reduce the time needed to solve a heavy

computational problem, operating on the principle that

large problems can often be divided into smaller ones and

then solved concurrently (Han

et al.

, 2009). In the course of

parallelization of a procedure, high parallelism is a primary

goal, which is usually evaluated by the index, i.e., speedup.

Because the final results of these tested algorithms can be

generated and saved in the form of blocks, the parallel com-

puting technique makes it possible for multiple blocks to be

processed concurrently on multiple

CPU

cores.

In this paper, the message passing interface (

MPI

) is used as

the parallel environment. The

MPI

is a message-passing appli-

cation-programmer interface packaged together with protocol

and semantic specifications for how its features must behave

in any implementation (Gropp

et al.

, 1996). The

MPI

features

high performance, scalability, and portability and is the most

common library used in high performance computing.

In a multi-core computer, one

CPU

core (or virtual core us-

ing hyper-threading technology widely used by an Intel

®

CPU

)

(a)

(b)

(c)

Figure 3. Three types of strategies of block partition: (a) Strip-based decomposition, (b) Tile-based decomposition, and (c) Band-based

decomposition.

Figure 4. The recursive procedure for fetching and exporting.

376

May 2015

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING