used to place both the rope and the proposed front landing

gear object together in a single image from the same perspec-

tive.

CAD

models of both the front and rear landing gears were

created using measurements taken from the front and rear

landing gears of an extant Lockheed Electra 10E, construction

number 1042, and information from historical documents.

These

CAD

models were subsequently overlaid on histori-

cal photographs of the subject Lockheed Electra Model 10E

to verify visually that a good fit was obtained. A

CAD

model

overlay was then performed on mosaicked, still frame images

taken from the

ROV

video of each proposed landing gear. Both

mosaicked images contained a rope, and using the overlaid

CAD

models and the top-down view of the flat seabed as

references, the diameter of the rope was measured within

the

CAD

software SolidWorks. Separately, a piece of rope was

identified in the

ROV

video when a mechanical claw on the

ROV

grasped the rope. The dimensions of the mechanical claw

were measured and then used to estimate the diameter of the

rope. Finally, the diameter of the rope was compared between

all three sources of information, the proposed front landing

gear, the proposed rear landing gear, and the mechanical claw,

to assess whether all three measurements of the diameter of

the rope were consistent.

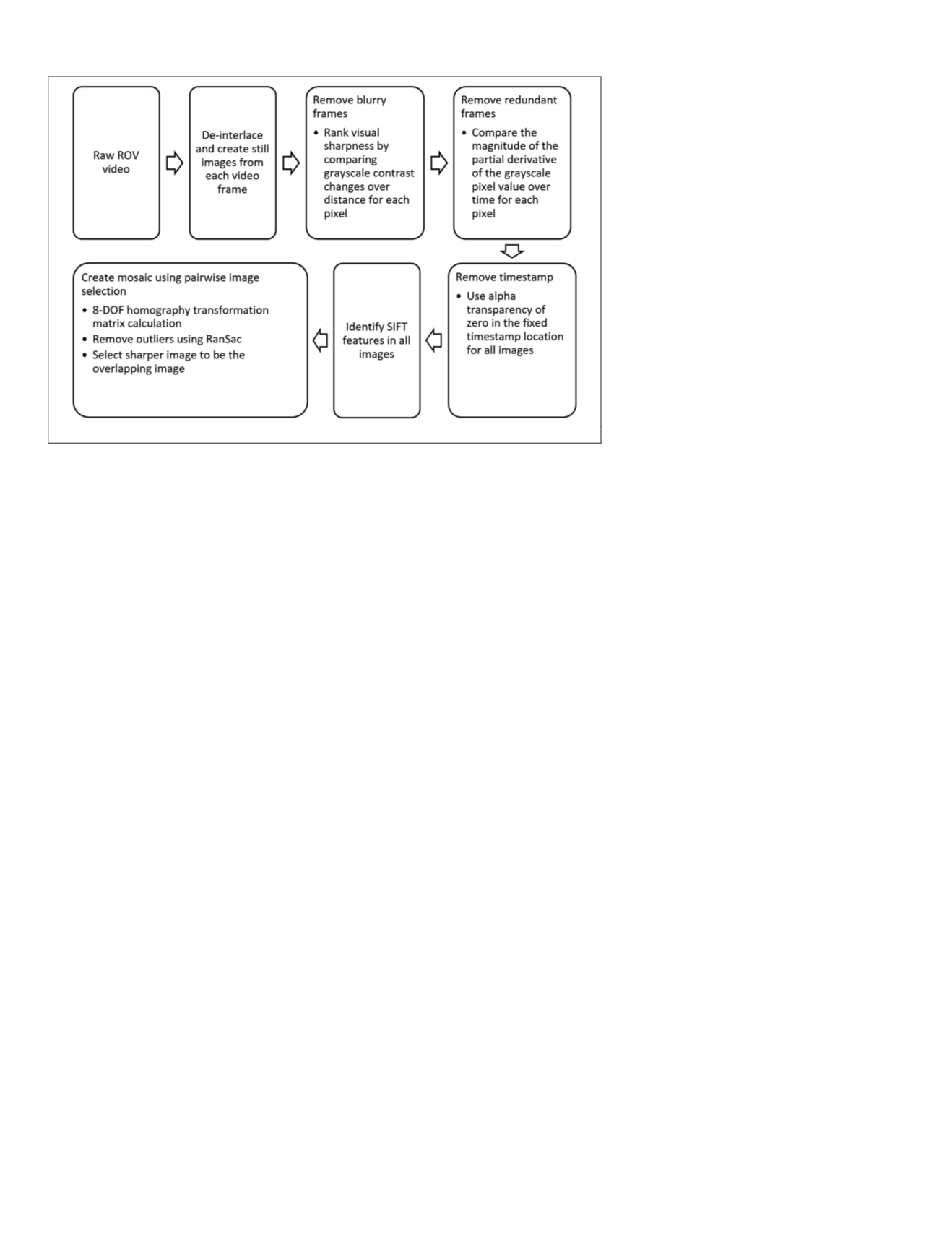

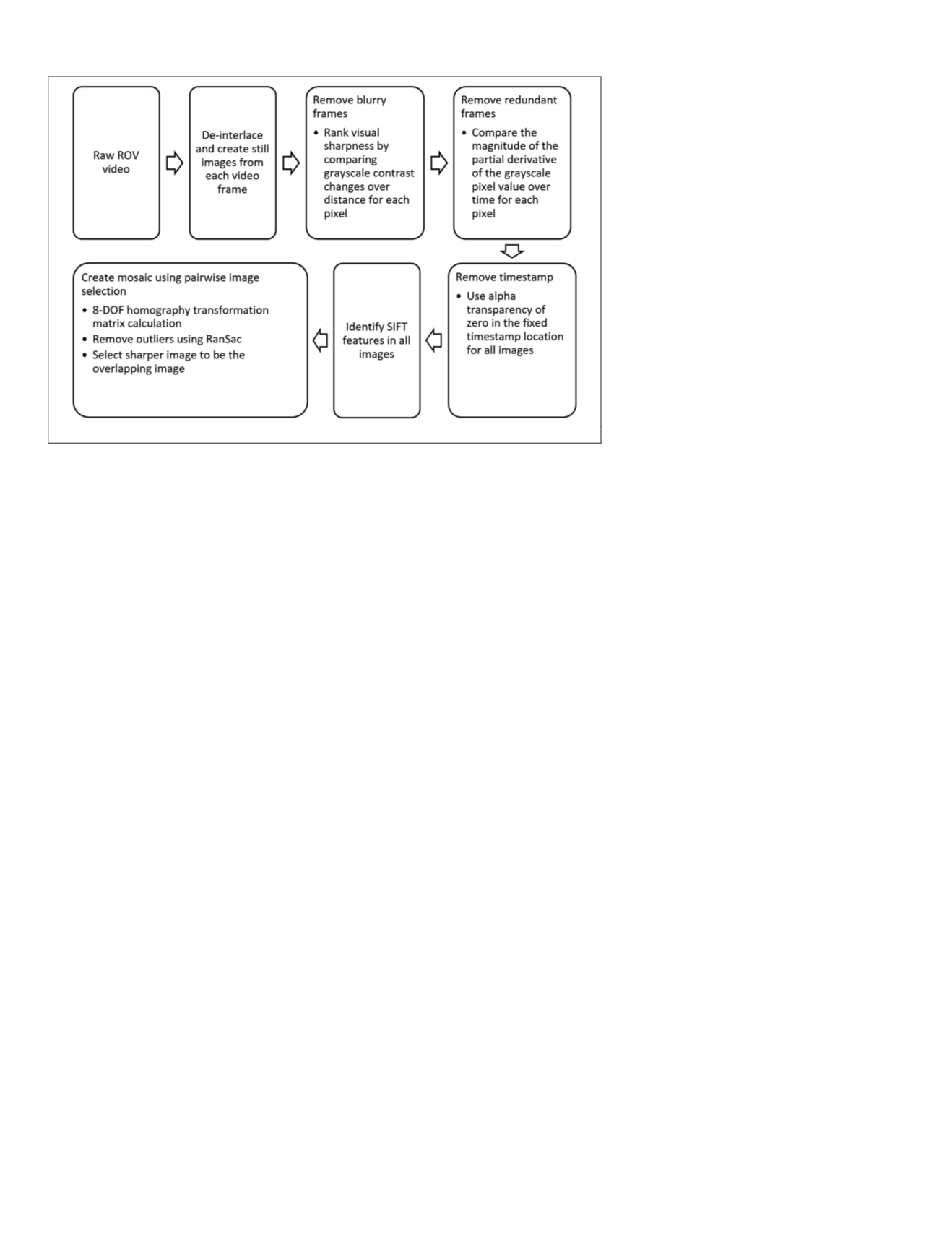

Figure 4 illustrates the overall video imaging and mosaick-

ing techniques utilized to create mosaics containing non-blurry

images from the video with the timestamp removed. These

steps will be described in more detail in the following sections.

Adaptive Sampling of the Video

The video that was the basis of the analysis was recorded

using a Sony FCBH11 high definition color block camera

in MPEG-4 codec using the BT.709

RGB

color space, with a

resolution of 1,440 × 1,080, 29.97 frames per second, and

a video bit rate of 55.7 megabits per second. The camera is

specified to have a focal length between 5.1 mm and 51.0 mm.

Although the focal length at the time of capture is unknown,

it was observed to constant during the video. The camera

path in the video was erratic, which is not uncommon during

underwater videography due to the ebb and flow of water and

the

ROV

, relative to a stationary scene. This causes a majority

of the video to be blurry or pointing in regions of no interest,

and is unusable for further analysis. On occasion, however,

the camera would temporarily be stationary during changes

in direction, such as the instance from ebb to flow, or have

extended stationary periods while it rested on the seabed.

These conditions, typical for

ROV

, neces-

sitated adaptive sampling of the video

to extract sharp still images for stitching

and analysis.

The images were first sampled ac-

cording to visual sharpness to remove

blurry frames. The pixels were convert-

ed to grayscale, and the grayscale pixel

values were compared with neighboring

pixels. For sharp images, changes in con-

trast are abrupt, which are identified by

large grayscale changes of neighboring

pixels. Conversely, blurry images have a

more gradual change in grayscale values

in regions of contrast changes. Thus, a

threshold value for the derivative of the

grayscale pixel value over pixel distance

was used to remove blurry images.

The sharp image dataset was then

sampled to remove redundant frames.

If the camera is stationary, the change

in pixel value over time is small. Thus,

stationary images can be removed by

calculating the magnitude of the partial

derivative of the grayscale pixel value

over time.

Combining Images (Video Mosaicking)

Using the sampled frames from the video, we began to con-

struct the debris field on the ocean floor. The composite image

was acquired by combining several still images using our cus-

tomized implementation of the auto-stitch algorithm (Brown

and Lowe, 2007). For this method to work properly either the

camera has to only rotate or the scene has to be planar. Since

the

ROV

changes position, we rely on the second assumption

that the scene, in this case the seabed, is roughly planar.

Local scale-invariant feature transform (

SIFT

) features in

all images were identified (Lowe, 2004). Timestamps were

present in every frame of the video, which created spurious

features during a subsequent matching step. Due to the time

stamp not occupying a fixed, real-world location, the pixel lo-

cations of the time stamp box were identified in every image,

and any

SIFT

features located within this box were removed.

The remaining features were saved per image. As adjacent

images were paired, corresponding features were loaded and

matched. Using the pixel coordinates of the matched features,

an eight degrees of freedom (8-

DOF

) homography matrix was

calculated using least squares error minimization. Outliers

were eliminated using Random Sample Consensus (

RanSaC

)

algorithm (Fischler and Bolles, 1981). Using the transforma-

tion matrix, the paired frames of the video were stitched, and

corresponding features were united. The stitched image and

the united features were saved to be used during the next

iteration. The overlapping regions of the stitched images

were determined by selecting the image which was visually

sharper. This was achieved by comparing the magnitude of

the gradient image and selecting the higher magnitude image

to lay on top of the lower magnitude image. The advantage

to this method of handling the overlapping regions is that it

minimizes error that is accumulated during each iteration due

to resampling. To improve the visual quality of the stitched

pairs, the time stamp region was modified to have an alpha

transparency of zero. When the images are stitched, this al-

lows for overlapping of non-time stamped pixels of one image

within the transparent region of the other image, thus filling

in regions containing a timestamp with scene data (Figure 5).

The pairwise

SIFT

matching, and transformation process is

inherently a parallel process, in which multiple pairs can be

stitched concurrently on different processor cores. Subsequent

Figure 4. Video processing and mosaicking techniques utilized.

226

March 2016

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING