applications where

UAVs

are used for spraying chemicals on

crops controlled by a

WSN

deployed on the crop field.

Samseemoung

et al.

(2012) designed a low altitude remote

sensing (

LARS

) helicopter, with 6 kg and payload capacity of 5

kg, equipped with a commercial true color camera (

RGB

) and

a color-infrared digital camera (

G-R-NIR

) for monitoring crop

growth and weed infestation in a soybean plantation flying at

altitudes up to 15 m. Also a

LARS

system based on a helicop-

ter, with weight of 6 kg and payload of 5 kg, equipped with

a multispectral imaging system was proposed in Swain

et al.

(2010) and Swain and Zaman (2012) to determine the crop rice

coverage with the aim of predicting rice yield for planning and

expectation. Bendig

et al.

(2013a and 2013b) obtained crop sur-

face models in rice fields based on stereo images with the aim

of analyzing crop growth and health status. The platform is an

octo-copter with payload of 1 kg equipped with a true color

RGB

sensor with a weight of 400 g. In the context of corn yield

prediction, Geipel

et al.

(2014) used a hexa-copter, equipped

with standard navigation sensors (

IMU

,

GNSS

) to acquire

RGB

imagery, which was later ortho-rectified with production of

DEMs

and maps leading to the computation of vegetation indi-

ces. Rice paddies were characterized in Uto

et al.

(2013) based

on a miniature hyperspectral system onboard an

UAV

.

Torres-Sanchez

et al.

(2013a, 2013b, and 2014), Peña-

Barragán

et al.

(2012a and 2012b), and Peña

et al.

(2013) used

a quad-copter, with payload of 1.25 kg, with a lightweight

700 g

CMOS

multispectral sensor with six individual digital

channels and sometimes a commercial high-resolution

RGB

true color camera. Both cameras can be installed separately

onboard for deriving vegetation indices for crop and weed

detection for generating weed coverage maps with the aim of

site-specific treatments in maize crops. The images, stored in

SD

and

CF

cards, are preprocessed for correct channel align-

ment suitable for accurate ortho-rectification and mosaicking

purposes before the map generation. A set of georeferenced

ground control points (

GCP

) is used for such purpose. Small

changes in flight altitudes can produce important differences

in the ortho-images resolutions. A study about accuracy in

wheat fields infested by broad-leaved and grass weeds was

carried out in Gómez-Candón

et al.

(2014), where precision

mapping for farm applications are built using a quad-copter

equipped with a device providing

CIR

images. Different

studies were conducted in Peña et al. (2015) quantifying the

efficacy and limitations of remote images acquired with an

UAV

for detection and discrimination of weeds affected by

the spectral, spatial and temporal resolutions. Crop pests and

monitoring for prevention and cure when infestation has oc-

curred were studied and addressed in Yue

et al.

(2012).

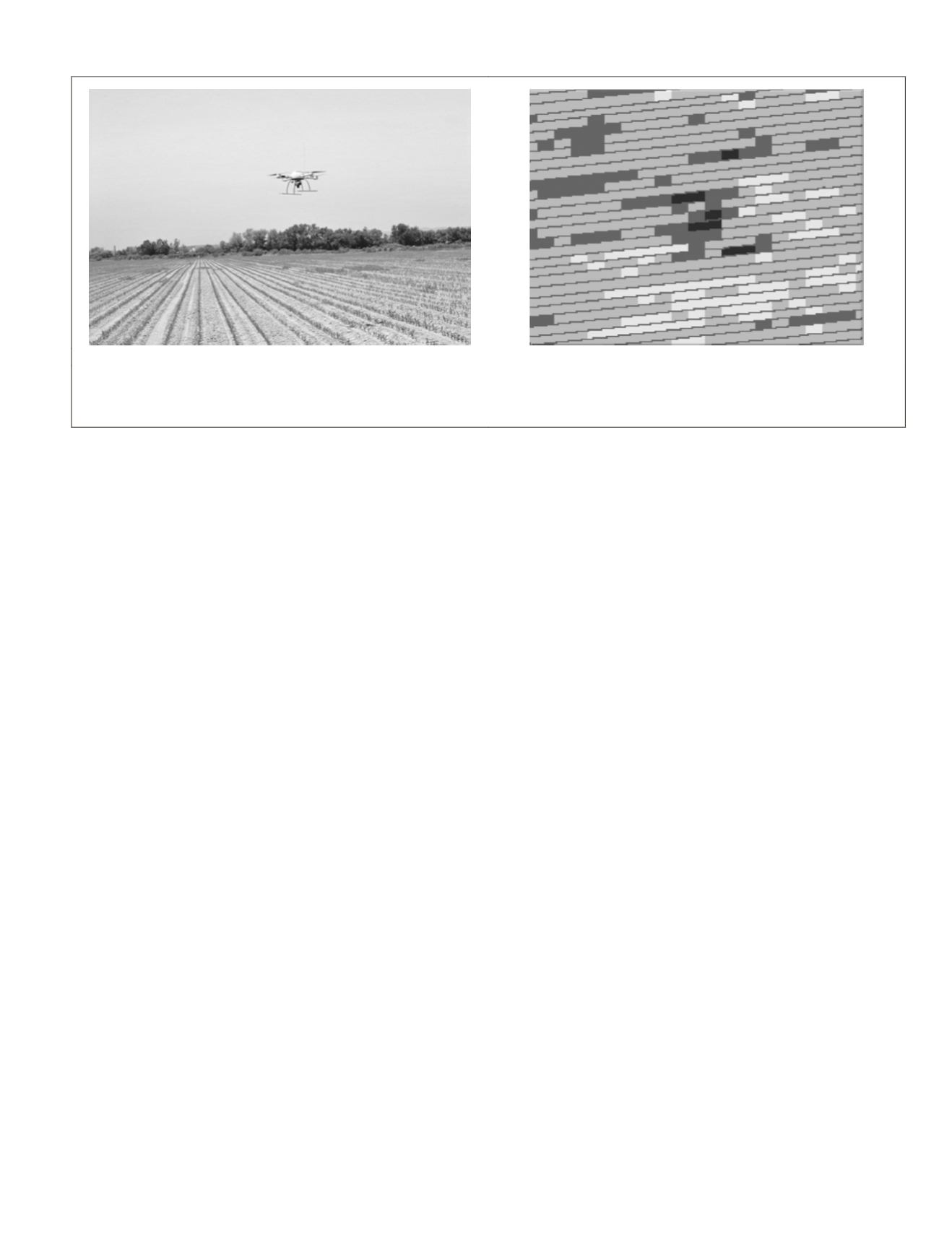

Figure 7a shows an

UAV

quad-rotor, from the Carto

UAV

(2015) company, flying a maize crop field to detect weed

patches in order to design site-specific herbicide treatments.

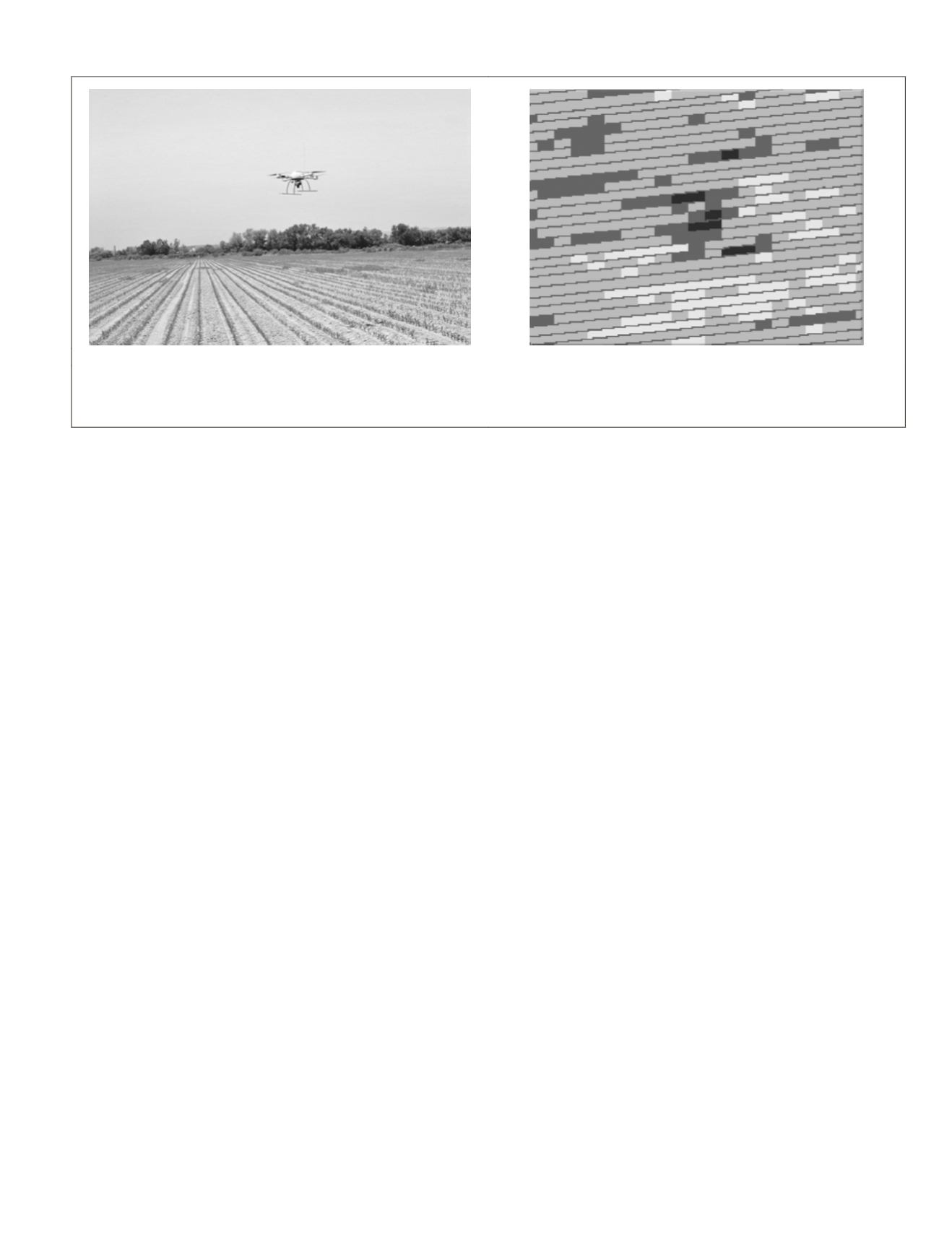

The images are conveniently mosaicked and segmented for

crop row identification and crop versus weeds discrimination

with the aim of building density maps of crops and weeds

coverage. Figure 7b shows a map obtained from

UAV

images

showing three levels of weed infestation (low, moderate, and

high, in an ascending greyscale), crop rows (in grey) and free-

weed zones (in white). The image displayed in Figure 7b is

adapted from Peña

et al.

(2013).

Honkavaara

et al.

(2013) used a multispectral system (see

the Multispectral and Hyperspectral Subsection) for biomass

estimation. Different vegetation densities in wheat and barley

crops were obtained according to the amounts of seeds and

fertilizers applied, which allowed for determination of the

effect of these quantities in the health and growth stage of

crops. Different experiments were also conducted by Jannoura

et al.

(2015) to monitor crop biomass based on

RGB

images

captured by a true color camera onboard a hexa-copter.

Rabatel

et al.

(2014a) proposed various methods to obtain

simultaneously visible and

NIR

bands for agricultural applica-

tions, including weed monitoring. Red (R) and

NIR

data were

obtained from a uniquely modified still camera, which was

achieved by removing the blocking internal

NIR

filter in the

camera, inserting a red long-pass filter in front of the lens and

getting Red and

NIR

as linear combinations of the raw channel

data. In a second system, in the context of the

RHEA

project

and its associated second conference (

RHEA

, 2015), R and

NIR

bands are obtained by a couple of compact still cameras, one

of them being modified as before. A specific image registration

procedure was developed for such purpose (Rabatel and Lab-

bé, 2014b). Aerial images of wheat were acquired by a camera

onboard the

UAV

from AirRobot (2015) company, Figure 2b, as

part of the activities in the

RHEA

project with the aim of pro-

ducing georeferenced maps for follow-up, site-specific treat-

ments in wheat fields. Hunt

et al.

(2010 and 2011) replaced

the internal hot-mirror filter with a red-light-blocking filter to

get data in the near-infrared, green, and blue bands, i.e.,

CIR

bands. Leaf area and green normalized difference vegetation

indices were correlated in fertilized wheat crops.

Aerial reflectance measurements were conducted in Link-

Dolezal

et al.

(2010 and 2012) in winter wheat for crop moni-

toring purposes based on georeferenced images. The

UAV

, with

(a)

(b)

Figure 7. (a)

uav

flying a maize crop field to detect weeds patches for site-specific herbicide treatments, and (b) Weed density map

obtained from

uav

images with three levels of weed infestation (Images courtesy of F. López-Granados and J.M. Peña, Institute for

Sustainable Agriculture,

csic

-Córdoba, Spain; adapted from Peña et al., 2013).

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

April 2015

295