Range-Image: Incorporating Sensor Topology for

Lidar Point Cloud Processing

P. Biasutti, J-F. Aujol, M. Brédif, and A. Bugeau

Abstract

This paper proposes a novel methodology for lidar point

cloud processing that takes advantage of the implicit topol-

ogy of various lidar sensors to derive 2D images from the

point cloud while bringing spatial structure to each point.

The interest of such a methodology is then proved by ad-

dressing the problems of segmentation and disocclusion of

mobile objects in 3D lidar scenes acquired using street-based

Mobile Mapping Systems (

MMS

). Most of the existing lines of

research tackle those problems directly in the 3D space. This

work promotes an alternative approach by using this image

representation of the 3D point cloud, taking advantage of the

fact that the problem of disocclusion has been intensively

studied in the 2D image processing community over the past

decade. Using the image derived from the sensor data by

exploiting the sensor topology, a semi-automatic segmenta-

tion procedure based on depth histograms is presented. Then,

a variational image inpainting technique is introduced to

reconstruct the areas that are occluded by objects. Experi-

ments and validation on real data prove the effectiveness

of this methodology both in terms of accuracy and speed.

Introduction

Over the past decade, street-based Mobile Mapping Systems

(

MMS

) have encountered a large success as the onboard 3D

sensors are able to map full urban environments with very

high accuracy. These systems are now widely used for various

applications from urban surveying to city modeling (Serna

and Marcotegui, 2013; Hervieu

et al

., 2015; El- Halawany

et

al

., 2011; Hervieu and Soheilian, 2013; Goulette

et al

., 2006).

Several systems have been proposed in order to perform

these acquisitions. They mostly consist in optical image. The

pedestrian is correctly segmented and its background is then

reconstructed in a plausible way cameras, 3D LiDAR sensor

and GPS combined with Inertial Measurement Unit (

IMU

),

built on a vehicle for mobility purposes (Paparoditis

et al

.,

2012; Geiger

et al

., 2013). They provide multi-modal data that

can be merged in several ways, such as LiDAR point clouds

colored by optical images or LiDAR depth maps aligned with

optical images. Although these systems lead to very complete

3D mapping of urban scenes by capturing optical and 3D

details (pavements, walls, trees, etc.), providing billions of

3D points and

RGB

pixels per hour of acquisition, they often

require further processing to suit their ultimate usage.

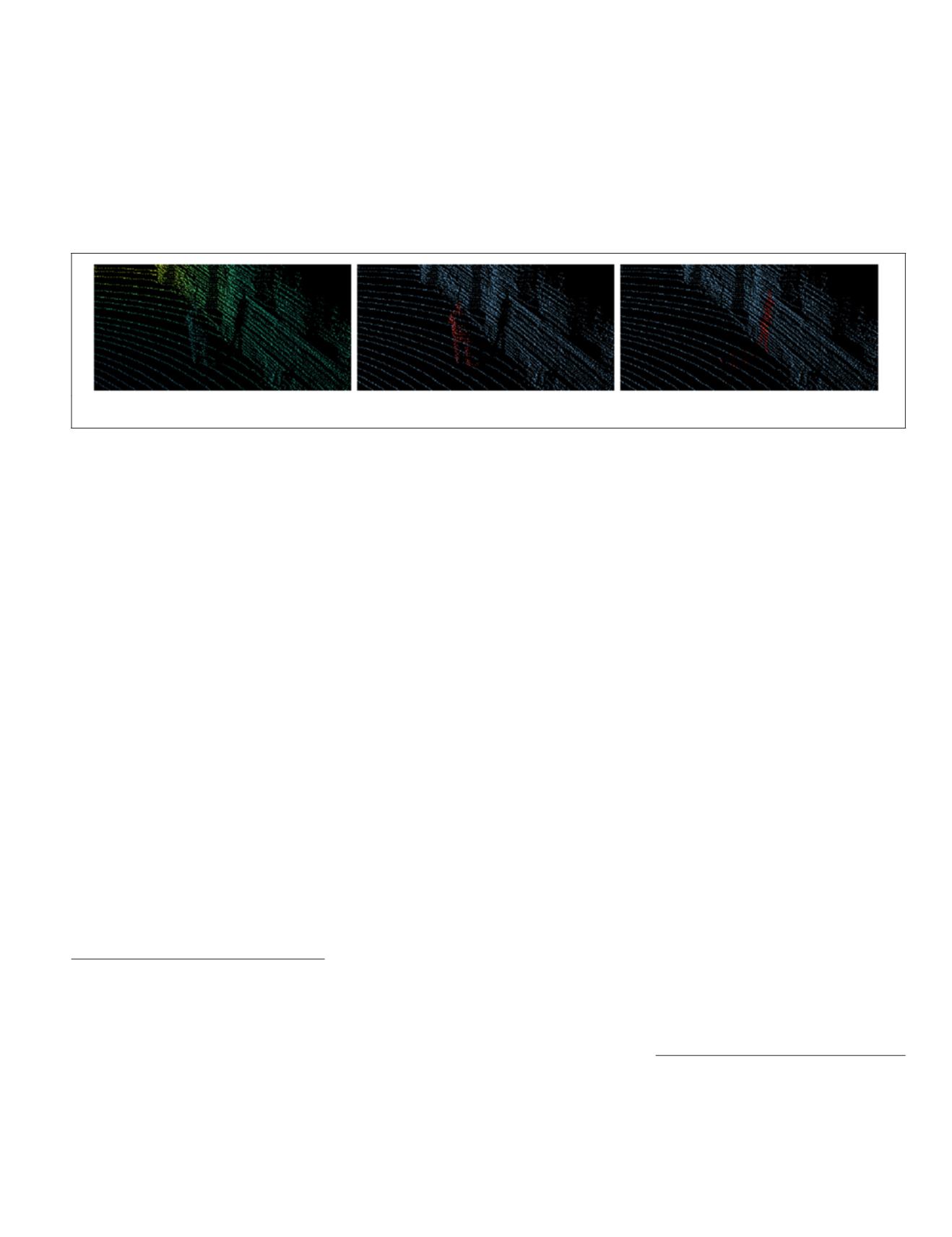

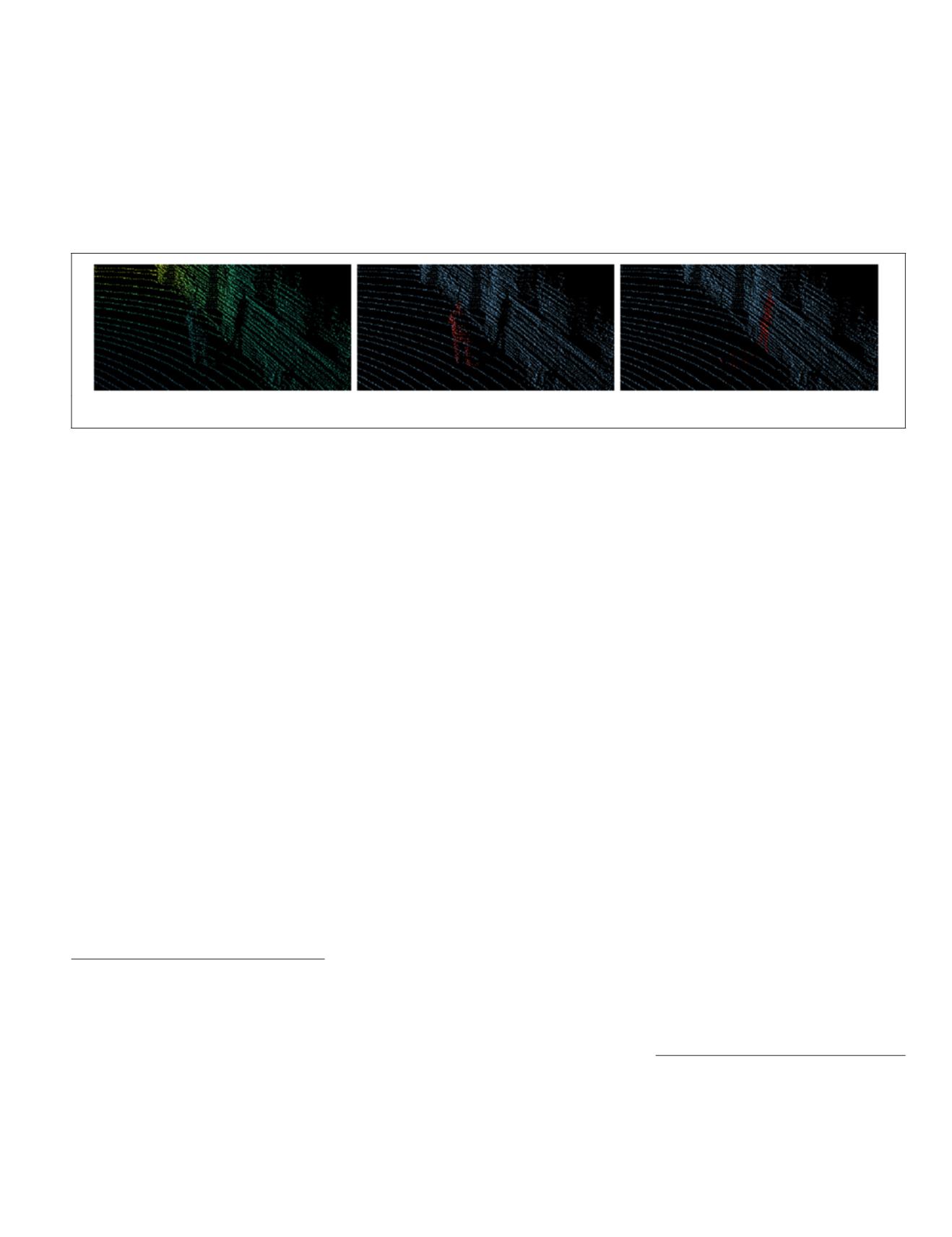

For example,

MMS

tend to acquire mobile objects that are

not persistent to the scene. This result often happens in urban

environments with objects such as cars, pedestrians, traffic

cones, etc. As LiDAR sensors cannot penetrate opaque objects,

those mobile objects cast shadows behind them where no point

has been acquired (Figure 1, left). Therefore, merging optical

data with the point cloud can be ambiguous as the point cloud

might represent objects that are not present in the optical im-

age. Moreover, these shadows are also largely visible when the

point cloud is not viewed from the original acquisition point

of view. This might end up being distracting and confusing for

visualization. Thus, the segmentation of mobile objects and the

reconstruction of their background remain strategic issues in

order to improve the understanding of urban 3D scans.

We argue that working on simplified representations of

the point cloud enables specific problems such as disocclu-

sion to be solved not only using traditional 3D techniques but

also using techniques brought by other communities (image

processing in our case). Exploiting the sensor topology also

brings spatial structure into the point cloud that can be used

for other applications such as segmentation, remeshing, color-

ization, or registration.

P. Biasutti is with the Université de Bordeaux,

IMB

,

CNRS UMR

5251,

INP

, 33400 Talence, France; the Université de Bordeaux,

LaBRI

,

CNRS UMR

5800, 33400 Talence, France; and Université

Paris-Est,

LASTIG MATIS

,

IGN

,

ENSG

, F-94160 Saint-Mandé,

France (

).

J-F. Aujol is with the Université de Bordeaux,

IMB

,

CNRS UMR

5251, INP, 33400 Talence, France.

A. Bugeau is with the Université de Bordeaux,

LaBRI

,

CNRS

UMR

5800, 33400 Talence, France.

M. Brédif is with the Université Paris-Est,

LASTIG MATIS

,

IGN

,

ENSG

, F-94160 Saint-Mandé, France.

Photogrammetric Engineering & Remote Sensing

Vol. 84, No.6, June 2018, pp. 367–375.

0099-1112/18/367–375

© 2018 American Society for Photogrammetry

and Remote Sensing

doi: 10.14358/PERS.84.6.367

Figure 1. Result of the segmentation and the disocclusion of a pedestrian in a point cloud using range images. (left) original point

cloud, (center) segmentation using range image, (right) disocclusion using range.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

June 2018

367