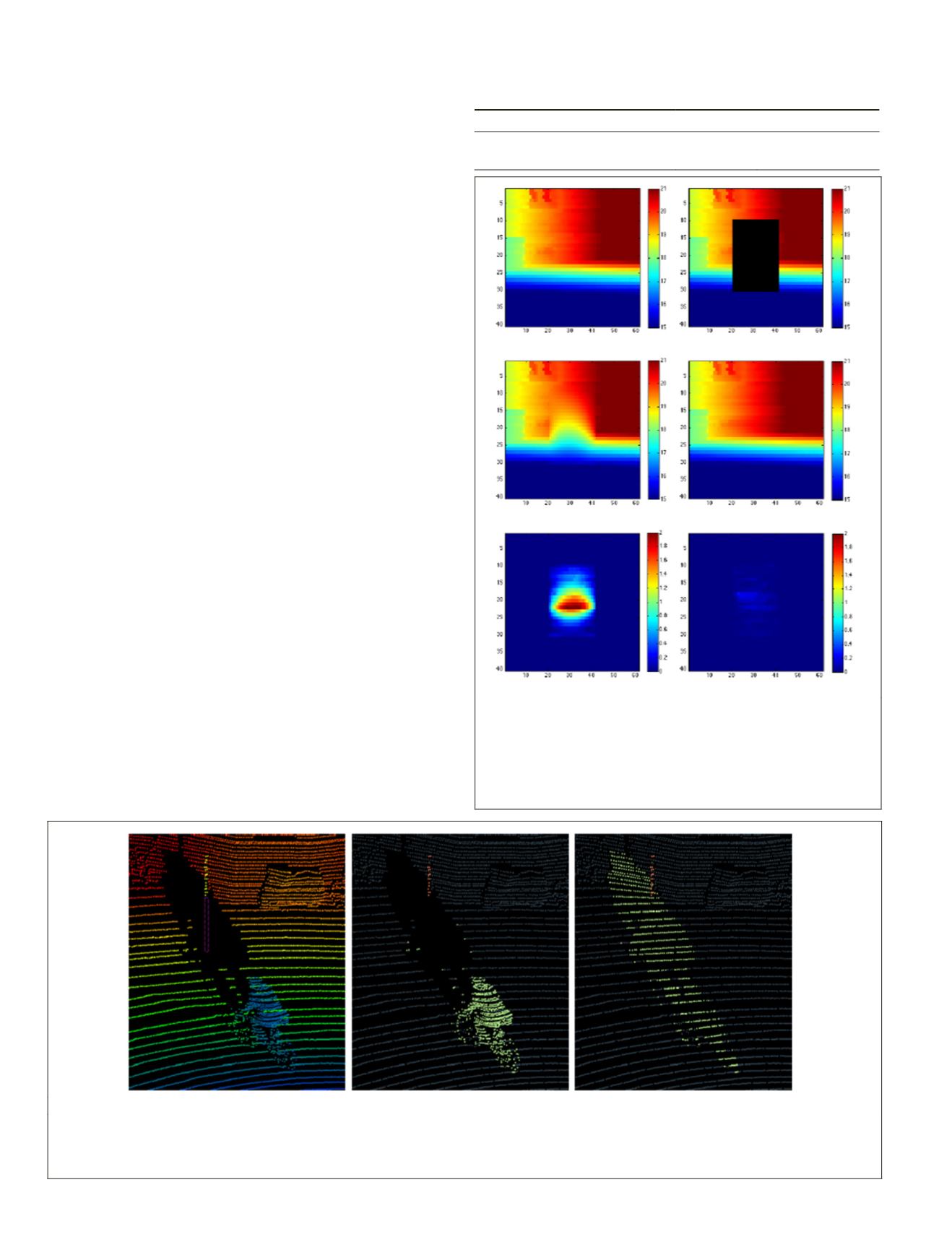

Figure 15 shows an example of disocclusion following this

protocol. The result of our proposed model is visually very

plausible whereas the Gaussian diffusion ends up oversmooth-

ing the reconstructed range image which increases the

MAE

.

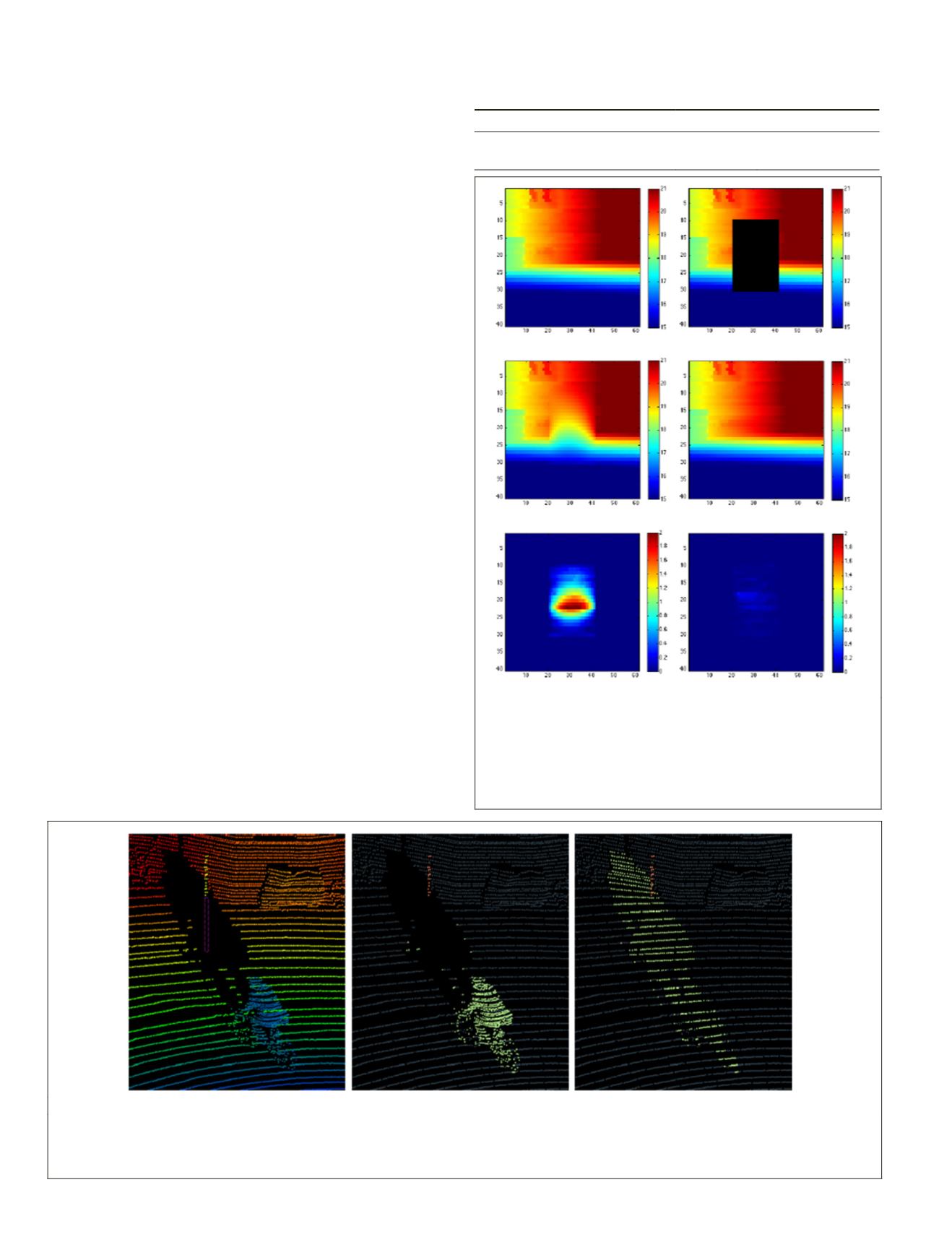

Overlapping Objects

Although the proposed disocclusion method performs well

in realistic scenarios as demonstrated above, in some specific

contexts, the reconstruction quality can be debatable. Indeed,

when two small objects (pedestrians, poles, cars, etc.) over-

lap in front of the 3D sensor (e.g., one object is in front of the

other), the disocclusion of the closest object may not fully

recover the farthest object. Figure 16a shows an example of

such a scenario where the goal is to remove the cyclist (high-

lighted in green). In this case, a pole (Figure 16a, in orange) is

situated between the cyclist and the background. Figure 16b

presents the disocclusion of the cyclist. The background is

reconstructed in a plausible way, however, details of the oc-

cluded part of the pole are not recovered.

Conclusions

In this paper, we have proposed a novel methodology for

LiDAR point cloud processing that relies on the implicit

topology that is brought by most recent LiDAR sensors. Con-

sidering the range image derived from the sensor topology has

enabled a simplified formulation of the problem from having

to determine an unknown number of 3D points to estimating

only the 1D range in the ray directions of a fixed set of range

image pixels. Beyond drastically simplifying the search space,

it also provides directly a reasonable sampling pattern for the

reconstructed point set. Moreover, it also directly provides a

robust estimation of the neighborhood of each point accord-

ing to the acquisition, while improving the computational

time and the memory usage.

To highlight the relevance of this methodology, we have

proposed novel approaches for the segmentation and the

disocclusion of objects in 3D point clouds acquired using

MMS

.

These models take advantage of range images. We have also

proposed an improvement of a classical imaging technique

that takes the nature of the point cloud into account (horizon-

tality prior on the 3D embedding), leading to better results.

The segmentation step can be done online any time a new win-

dow is acquired, leading to great speed improvement, constant

memory requirements and the possibility of online processing

during the acquisition. Moreover, our model is designed to

work semi-automatically with using very few parameters in

(a) (b) (c)

Figure 16. Example of a scene where two objects overlap in the acquisition. (a) is the original point cloud colored with depth

towards sensor with the missing part of a pole highlighted with dashed pink contour, (b) shows the two objects that overlap.

a pole (highlighted in orange) and a cyclist (highlighted in green), (c) shows the disocclusion of the cyclist. Although the

background is reconstructed in a plausible way, details of the occluded part of the pole are missing.

Table 1. Comparison of the average

MAE

(Mean Absolute Error)

on the reconstruction of occluded areas.

Gaussian Proposed model

Average

MAE

(meters)

0.591

0.0279

Standard deviation of

MAEs

0.143

0.0232

(a)

(b)

(c)

(d)

(e)

(f)

Figure 15. Example of results obtained for the quantitative

experiment. (a) is the original point cloud (ground truth), (b)

the artificial occlusion in dark, (c) the disocclusion result with

the Gaussian diffusion, (d) the disocclusion using our method,

(e) the Absolute Difference of the ground truth against the

Gaussian diffusion, (f ) the Absolute Difference of the ground

truth against our method. Scales are given in meters.

374

June 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING