close to the ground (we remind here that the ground truth on

IQmulus was manually labeled and thus subject to errors). In

the second enlarged part, one can see that points belonging to

the windows of the car were not correctly retrieved using our

model. This is due to the fact that the measure in areas where

the beam was highly deviated (e.g., beams that were not

reflected in the same direction as the one they were emitted

along) is not reliable as the range estimation is not realistic.

Therefore, our model through windows fails in areas where

the estimated 3D point is not close to the actual 3D surface.

Note that a similar case appears for the review mirror (Figure

8, left) which is made of specular material that leads to er-

roneous measurements.

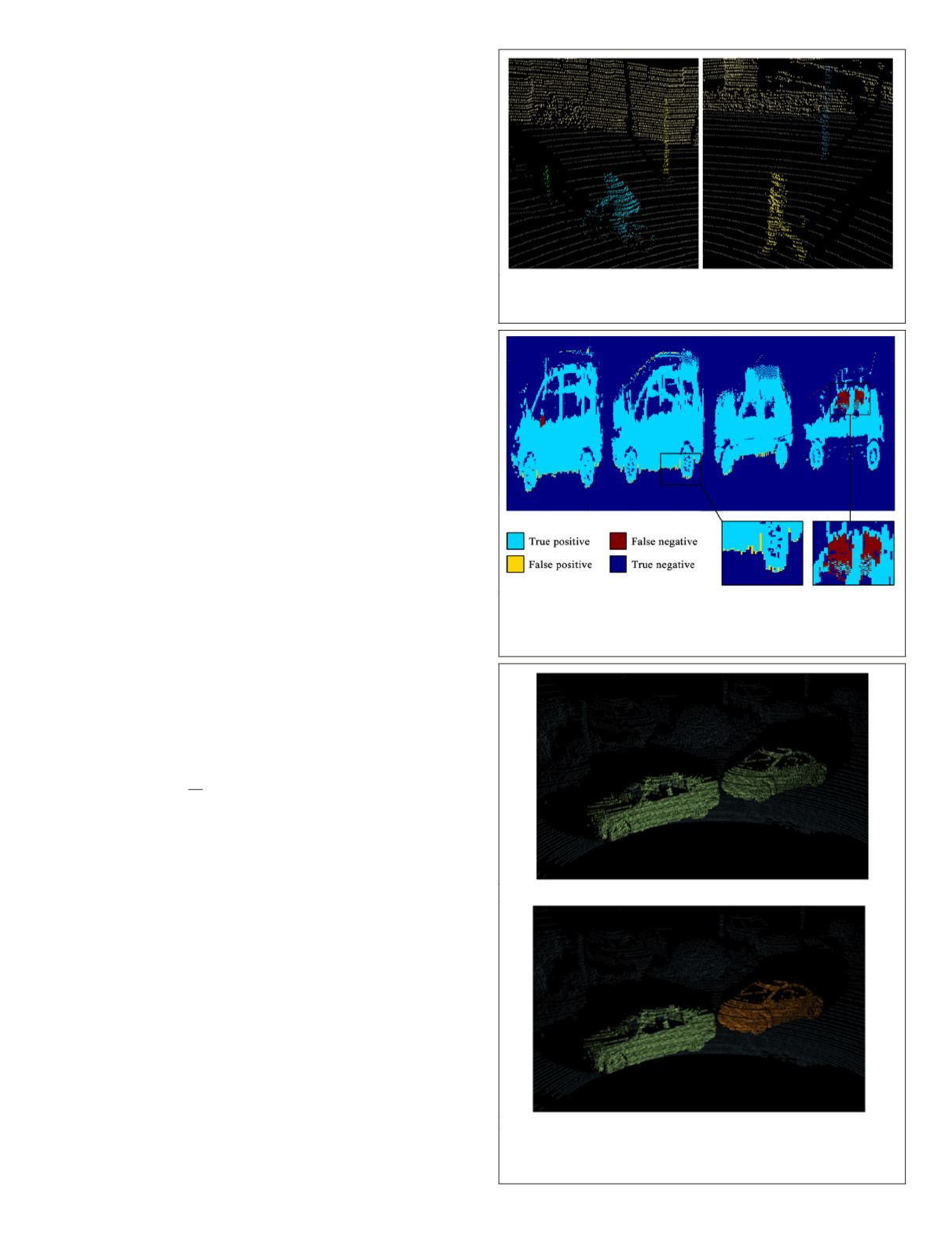

In some extreme cases, the segmentation is not able to sep-

arate objects that are too close from the sensor point of view.

Figure 9a shows a result of the segmentation in a scene where

two cars that are segmented with the same label (symbolized

by the same color). In order to better distinguish the different

objects, one can simply compute the connected components

of the points regarding their 3D neighborhood (that can be

computed using

K-NN

for example). Figure 9b shows the result

of such postprocessing on the same two cars. We can notice

how both cars are distinguished from one other.

Application to Disocclusion

In this section, we show that the problem of disocclusion in a

3D point cloud can be addressed using basic image inpainting

techniques.

Range Map Disocclusion Technique

The segmentation technique introduced above provides labels

that can be manually selected in order to build masks. As

mentioned in the beginning, we propose a variational ap-

proach to the problem of disocclusion of the point cloud that

leverages its range image representation. By considering the

range image representation of the point cloud rather than the

point cloud itself, the problem of disocclusion can be reduced

to the estimation of a set of 1D ranges instead of a set of 3D

points, where each range is associated with the ray direction

of the pulse. The Gaussian diffusion algorithm provides a

very simple algorithm for the disocclusion of objects in 2D

images by solving partial differential equations. This tech-

nique is defined as follows:

∂

∂

− =

( )

×

=

(

)

=

(

)

u

t

R

u

T

u t

x y u x y

∆

Ω

Ω

0 in

in

0

0

,

, ,

,

(3)

having

u

an image defined on

Ω

,

t

being a time range and

∆

the Laplacian operator. As the diffusion is performed in every

direction, the result of this algorithm is often very smooth.

Therefore, the result in 3D lacks of coherence as shown in

Figure 10b.

In this work, we show that the structures that require

disocclusion are likely to evolve smoothly along the

x

W

and

y

W

axes of the real world as defined in Figure 11a. Therefore,

we set

η

for each pixel to be a unitary vector orthogonal to

the projection of z

W

in the

u

R

range image (Figure 11b). This

vector defines the direction in which the diffusion should

be done to respect this prior. Note that most MLS systems

provide georeferenced coordinates of each point that can be

used to define

η

. For example, using a 2D LiDAR sensor that

is orthogonal to the path of the vehicle, one can define

η

as

the projection of the pitch angle of the acquisition vehicle.

We aim at extending the level lines of

u

along

η

. This can

be expressed as (

∇

u

,

η

) = 0. Therefore, we define the energy

F

(

u

) =

½

(

á

∇

u

,

η

ñ

)

2

. The disocclusion is then computed as a

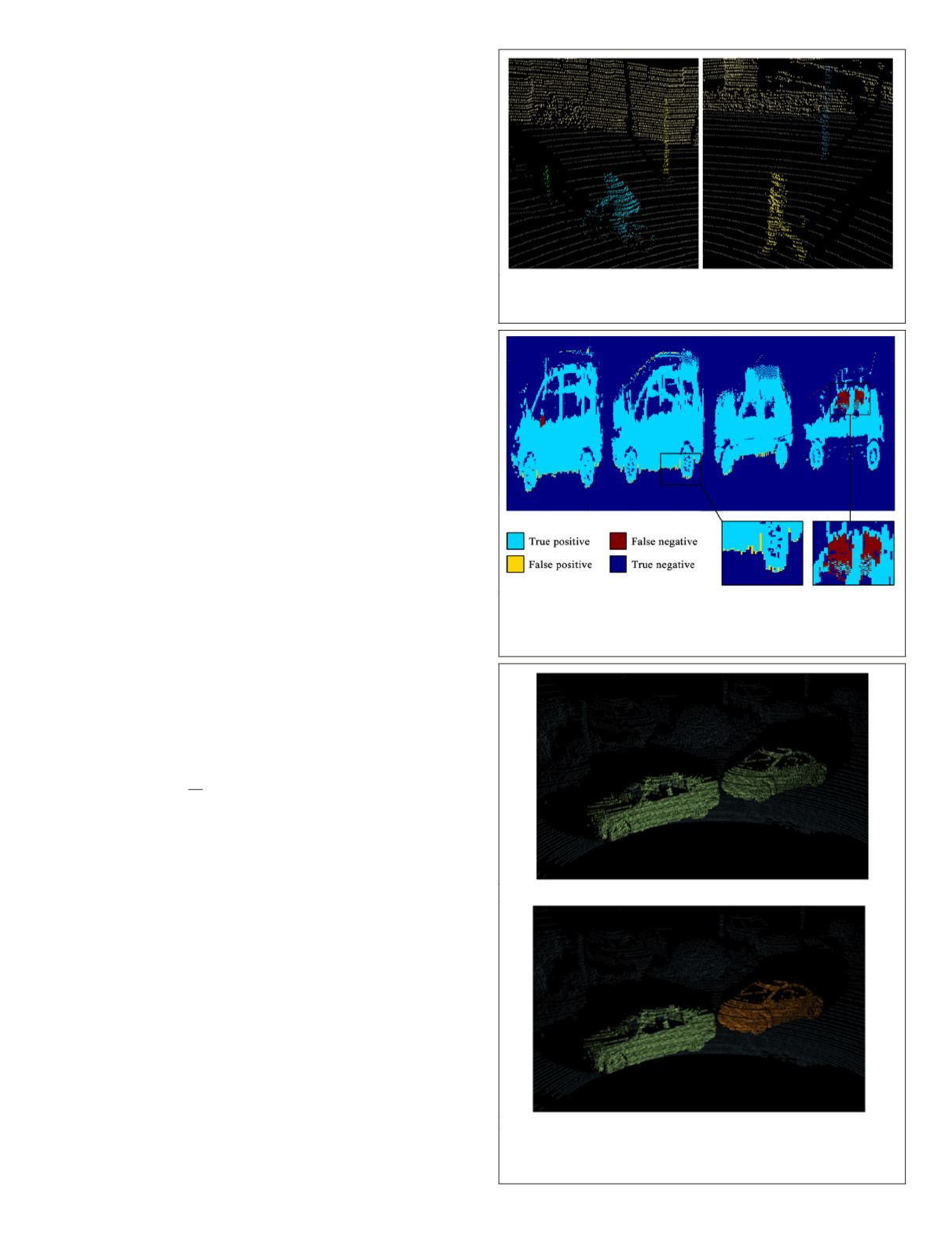

Figure 7. Example of point cloud segmentation using our

model on various scenes. We can note how each label stricly

corresponds to a single object (pedestrian, poles, walls).

Figure 8. Quantitative analysis of the segmentation of cars.

Our segmentation result only slighly differs from the ground

truth in areas close to the ground or for points that were

largely deviated such as points through windows.

(a)

(b)

Figure 9. Result of the segmentation of a point cloud where

two objects end up with the same label (a), and the labeling

after considering the connected components (b).

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

June 2018

371