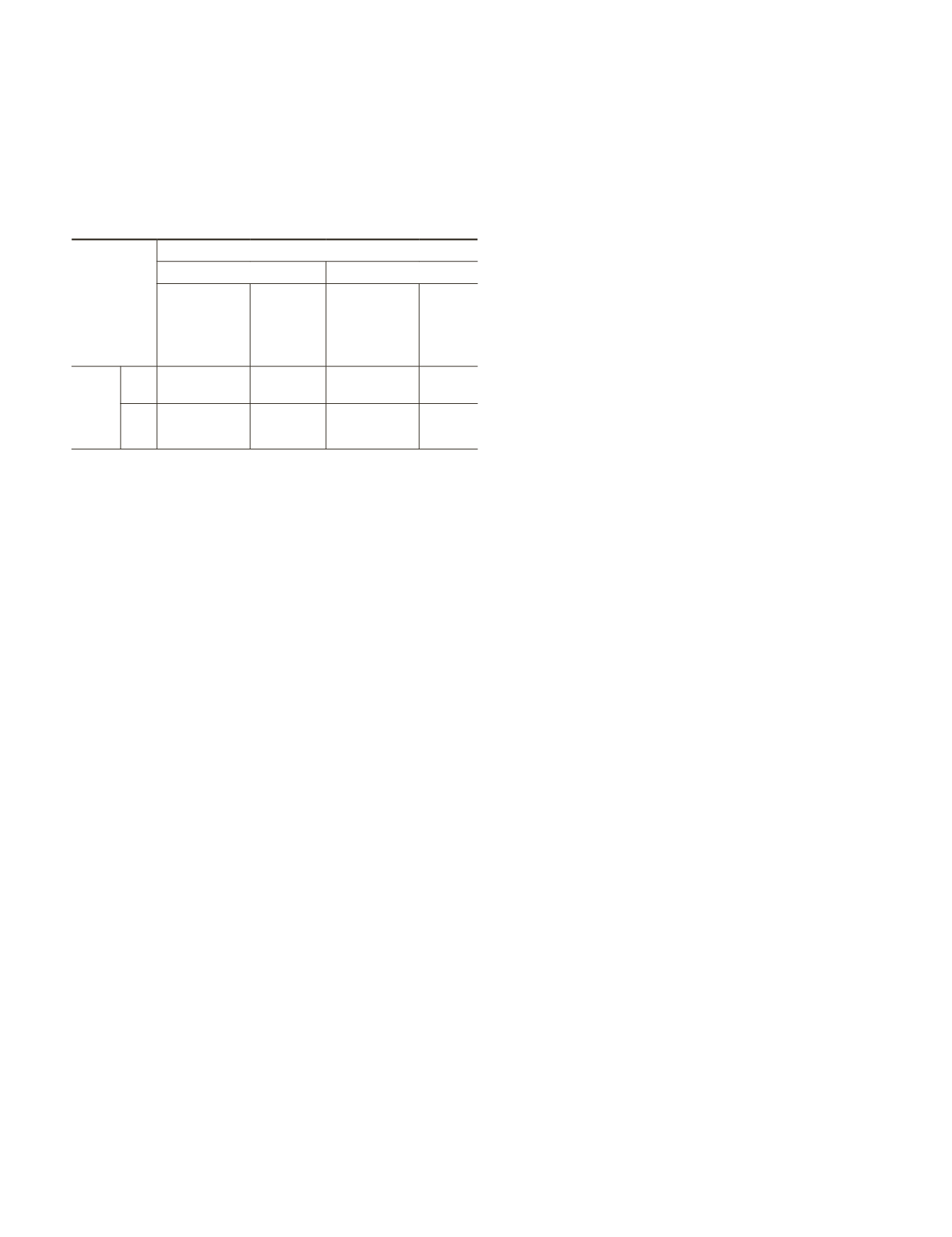

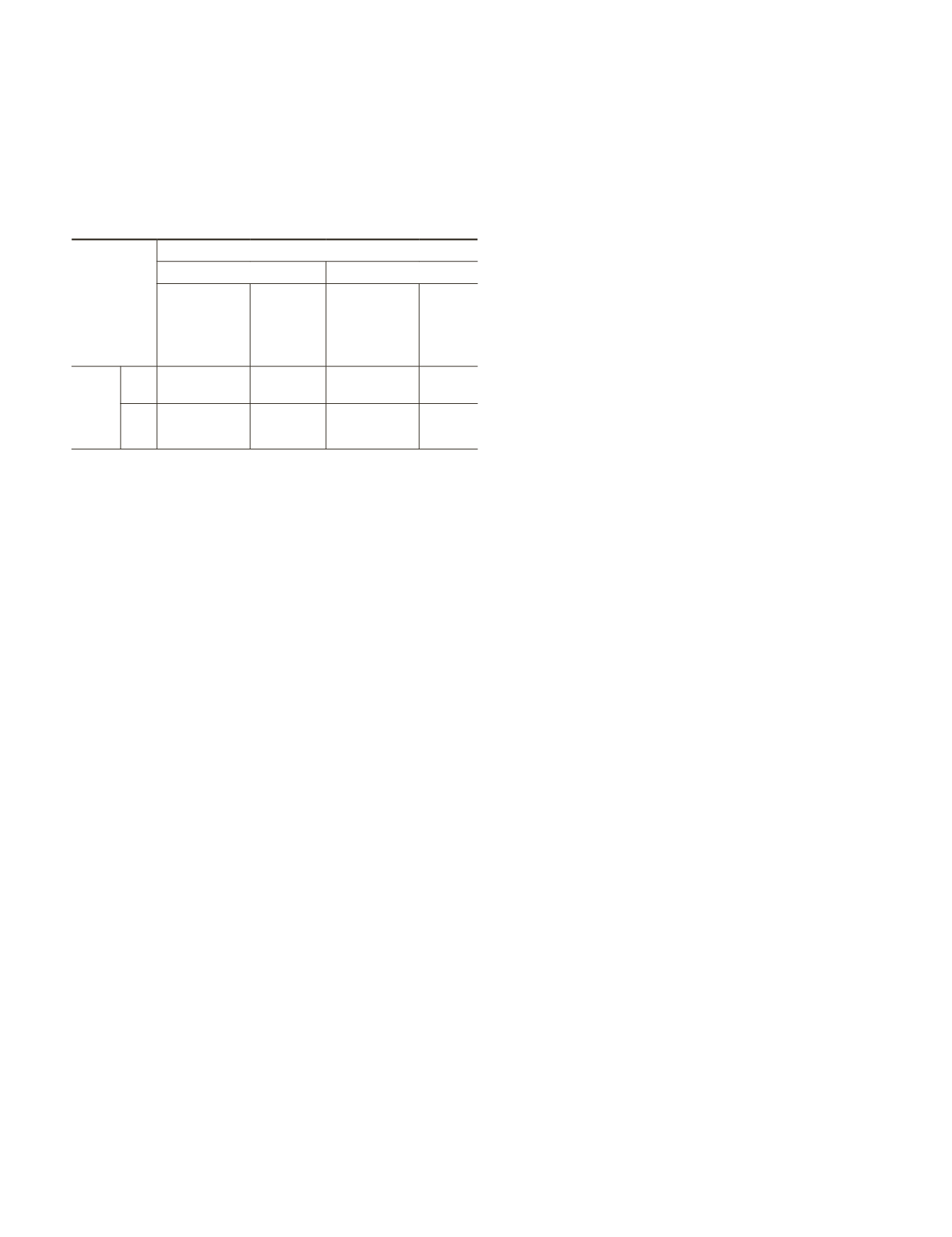

The residual errors in image space were also examined

for the extreme cases in the experiments at 40 m range. The

control points were back-projected to the image space, and

the back-projected positions were compared with the true

positions manually identified in the images acquired by the

surveillance camera and the

PTZ

camera to obtain the image

residuals. The results are summarized in Table 2.

T

able

2. I

mage

R

esiduals

for

the

E

xtreme

C

ases

in

the

E

xperiments

at

40

m

R

ange

Baseline length

0.5 m

1 m

Residual

on the

surveillance

camera image

Residual

on the

PTZ

camera

image

Residual

on the

surveillance

camera image

Residual

on the

PTZ

camera

image

Focal

length

of the

PTZ

camera

10

mm

2.76 pixels 1.29 pixels 2.63 pixels

1.17

pixels

100

mm

2.72 pixels

11.52

pixels

2.42 pixels

11.09

pixels

From Table 2, it can be seen that when the baseline length

increases from 0.5 m to 1 m, the image residuals decrease for

both the 10 mm and 100 mm focal lengths of the

PTZ

camera.

When the focal length of the

PTZ

camera increases from 10

mm to 100 mm, the image residuals on the surveillance cam-

era image decrease for both the 0.5 m and 1 m baselines. It is

not strange to see that the image residuals on the

PTZ

camera

image increase to over 10 pixels in this case, since its focal

length increases by 10 times. The image residuals at different

PTZ

camera pan angles are negligible.

Conclusions and Discussion

We have presented a systematic analysis of the measure-

ment accuracy of a dual camera system with an asymmetric

photogrammetric configuration. We have drawn the following

conclusions from theoretical derivation, Monte-Carlo simula-

tion, and actual experimental analysis.

1. The baseline length was the main factor influencing the

measurement accuracy of the dual camera system. This

influence was more significant over long ranges than

over short ranges.

2. The focal length of the

PTZ

camera influenced the

measurement accuracy. The influence was related to

the surveillance camera’s focal length. When the

PTZ

camera’s focal length was far longer than the surveil-

lance camera’s focal length further increasing of the

former would not improve the accuracy notably.

3. The pan angle of the

PTZ

camera had an influence on the

range measurement accuracy. This influence was more

significant at long range than at short range. The best

accuracy was when the

PTZ

camera pointed to targets

within a specific range to the middle of the baseline.

4. The optimum configuration for greater than 1 percent

measurement accuracy within a normal observation

range (e.g., 60 m) was found using theoretical deriva-

tion, Monte-Carlo simulation, and actual experimental

analysis. The optimum focal length for the surveillance

camera was found to be 8 mm, combined with a

PTZ

camera focal length of greater than 60 mm and a

PTZ

camera pan angle within ±20°, and a baseline length

between the two cameras of over 0.75 m.

To date, a dual camera system with an asymmetric pho-

togrammetric configuration had not been systematically

investigated, due to unusual difficulties such as the geometric

modeling and calibration of cameras with different character-

istics, correspondence between images with large differences

(e.g., in scale,

FOV

, and coverage), camera orientation, camera

coordination and accuracy evaluation of the system. This

paper has focused on the accuracy analysis of the asymmetric

photogrammetric configuration, and these discoveries will

facilitate research and development in other areas of asym-

metric photogrammetry.

The proposed dual camera system is more flexible in the

context of real surveillance than traditional camera networks,

which could lead to a dramatic increase in the deployment

of stereo video surveillance systems. By providing rapid 3

D

information and high-resolution imaging data, the camera

system has enhanced capabilities for tracking moving objects.

In combination with automated image analysis and camera

coordination, this system is suitable for intelligent applica-

tions such as robotic exploration, traffic monitoring and

hazard detection.

Acknowledgments

The work described in this paper was supported by research

grants from the Hong Kong Polytechnic University (Grant

A/C: G-YN12 and A-PL58). The work was also supported by a

grant from the Research Grants Council of Hong Kong (Project

No: PolyU 5330/12E) and a grant from the National Natural

Science Foundation of China (Project No: 91338110).

References

Allen, P., and R. Bajcsy, 1986. Two sensors are better than one: Ex-

ample of vision and touch,

Proceedings of the 3

rd

International

Symposium on Robotics Research

, Gouvieux, France, pp. 48–55.

Di, K., and M. Peng, 2011. Wide baseline mapping for Mars Rovers,

Photogrammetric Engineering & Remote Sensing

, 77(6):609–618.

Di, K., and R. Li, 2007. Topographic mapping capability analysis of

Mars Exploration Rover 2003 mission imagery,

Proceedings of

the 5

th

International Symposium on Mobile Mapping Technology

(MMT 2007), 28-31 May, Padua, Italy, unpaginated CD-ROM.

Fraser, C.S., 1982. Optimization of precision in close-range photo-

grammetry,

Photogrammetric Engineering & Remote Sensing

,

48(4):561–570.

Gao, Z.B., S. Pandya, N.Hosein, M.S. Sacks, and N.H. Hwang, 2000.

Bioprosthetic heart valve leaflet motion monitored by dual

camera stereo photogrammetry,

Journal of Biomechanics

,

33(2):199–207.

Hartley, R., and A. Zisserman, 2004.

Multiple View Geometry in Com-

puter Vision

, Second edition, Cambridge University Press, 670 p.

Iwata, K., Y. Satoh, I. Yoda, and K. Sakaue, 2006. Hybrid camera

surveillance system by using stereo omni-directional system and

robust human detection,

Proceedings of the Pacific-Rim Sym-

posium on Image and Video Technology 2006

, 11-13 December,

Hsinchu, Taiwan, LNCS 4319, pp. 611–620.

Kanade, T., R. Collins, A. Lipton, P. Burt, and L. Wixson, 1998. Ad-

vances in cooperative multi-sensor video surveillance,

Proceed-

ings of the DARPA Image Understanding Workshop

, November,

pp. 3–24.

Li, R., K. Di, L.H. Matthies, R.E. Arvidson, W.M. Folkner, and B.A.

Archinal, 2004. Rover localization and landing-sitemapping

technology for 2003 Mars Exploration Rover mission,

Photo-

grammetric Engineering & Remote Sensing

, 70(1):77–90.

Luhmann, T., 2009. Precision potential of photogrammetric 6DOF

pose estimation with a single camera,

ISPRS Journal of Photo-

grammetry and Remote Sensing

, 64(3):275–284.

Luh, J.Y., and J.A. Klaasen, 1985.A three-dimensional vision by off-

shelf system with multi-camera,

IEEE Transaction on Pattern

Analysis and Machine Intelligence

, PAMI-7, pp. 35–45.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

March 2015

227