MP

= (

NCM

/

NTM

)×100%

(8)

where

NTM

is the number of total matches. One of a key issue

in this formula is to determine correct matches for evaluation.

We hence use both an automatic way to approximately count

this and a manual way to precisely determine this:

1.

Automatic Evaluation

: for frame camera images, a projec-

tive transformation is fitted for each pair of images by

using manually selected and uniformly distributed control

points; then, those matches with localization errors small-

er than 2 pixels based on the consistency of the projective

transformation are regarded as correct matches; for linear

CCD

pushbroom images, the projective transformation is

replaced by quadratic polynomials.

2.

Manual Evaluation Through Sampling

: for each pair of im-

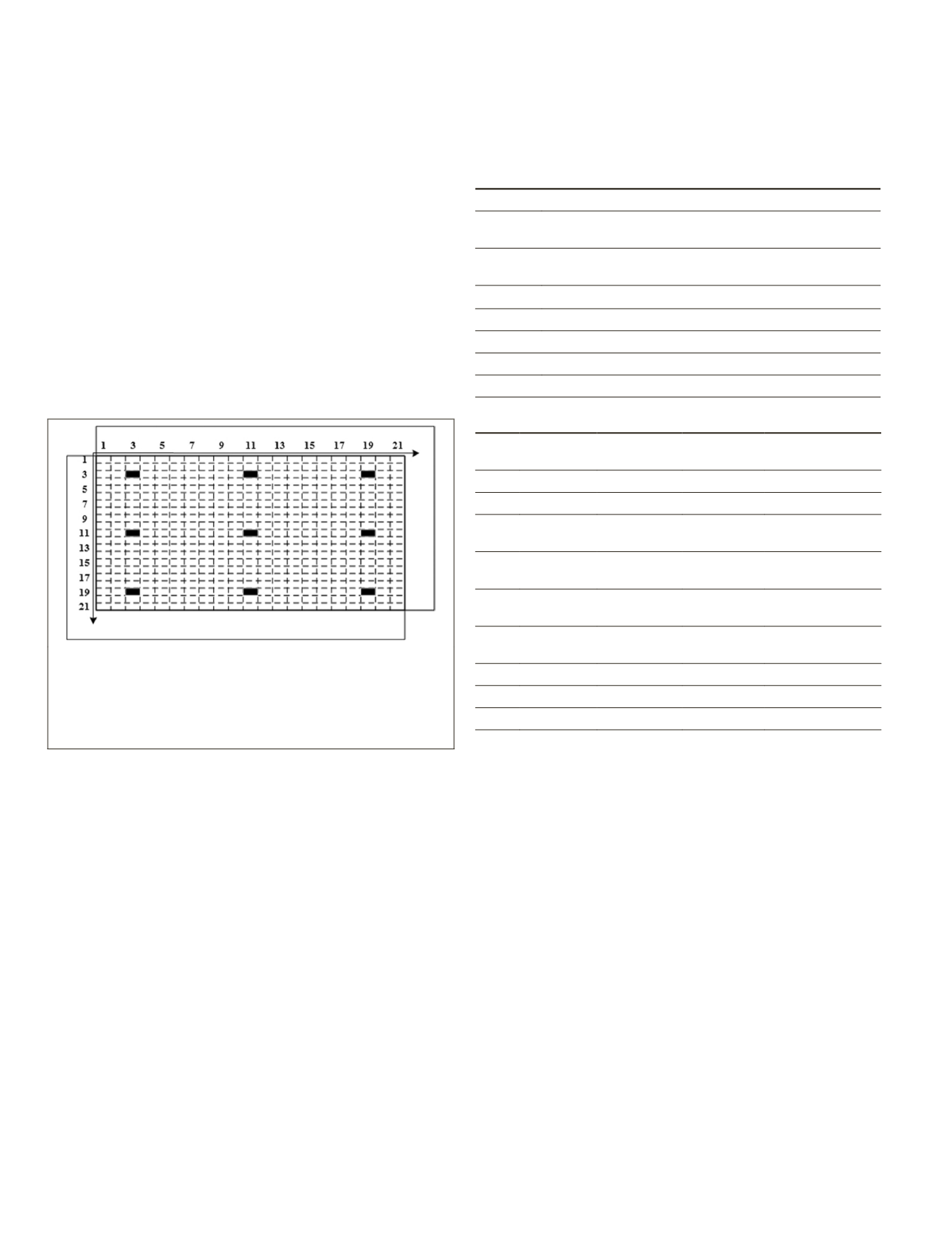

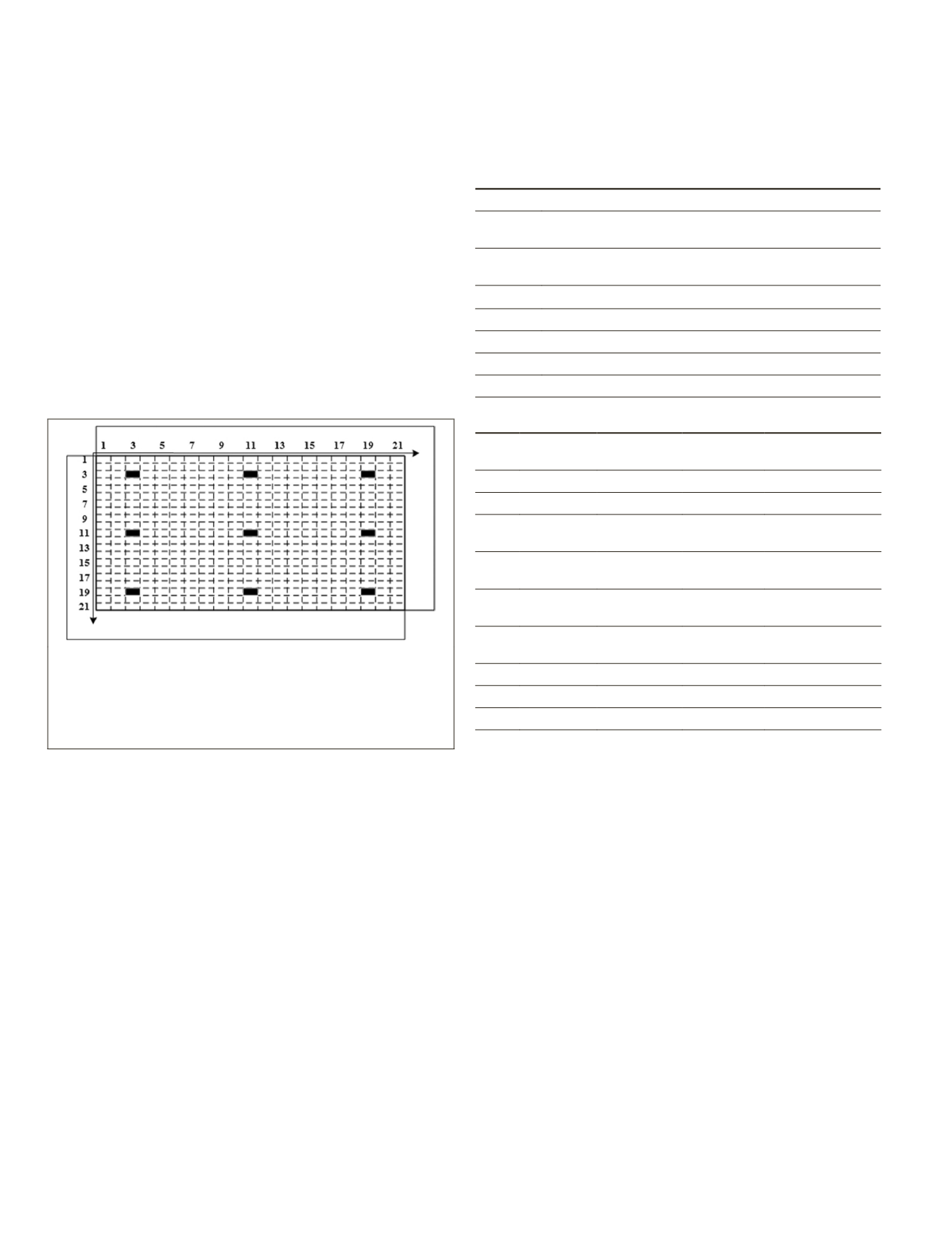

ages, the overlap region is divided into 21×21 sub-regions

as shown in Figure 4. Among the sub-regions, nine even-

distributed sub-regions are selected as samples and all

matches in these samples are checked manually and thus

yield the

MP

quantity.

Figure 4. Point sampling pattern of manual evaluation

method. The overlap region of these two images is divided

into 21×21 sub-regions (dotted box). Among of them, 9 even-

distributed sub-regions (filled sub-regions) are selected as

samples. We compute the

MP

values of each method in these

samples to represent the performance in the whole images.

Thresholds Setting

Table 2 lists all the relevant parameters in the experiments.

The thresholds

k

and

T

p

to compute and find pixels with

high pixel distinctiveness factor values are set as

k

= 0.06 and

T

p

= 0.01×

PF

max

according to the thresholds setting of tradi-

tional pixel distinctiveness based detectors (Schmid

et al

.,

2000).

PF

max

is the maximum of the observed pixel distinc-

tiveness value. The number of sub-regions in overlap region

division

M

×

N

will not affect the final result significantly. It

is empirically set as 5×5.

s

% and

t

% control the saliency of

the detected

LDF

s and seed points, respectively. The setting of

these two thresholds relies on the application. If the applica-

tion requires correspondences with high matching precision

and only a small number of correspondences can meet the

demand, a smaller

s

% is better. Otherwise, if the application

requires a large number of correspondences, the value of

s

%

should be set larger. In our experiments,

s

% is set as 50 per-

cent empirically. Seed matches are used to compute a coarse

transformation between images. A minimum of six matches

are needed to compute the transformation. The reliability of

the matches is more important than the number. Therefore,

a small value of

t

% is acceptable, which is set as 5 percent

empirically in our experiments. Other two parameters

r

and

T

w

play important role in the proposed method. They are

tuned in our study using the trial-and-error approach (Ye

et

al

., 2017). The tuning is based on nine pairs of remote sensing

images with repetitive patterns, including satellite images,

aerial images and

UAV

images as shown in Table 3, which are

not part of our experiment regions.

Table 2. Thresholds of the proposed method.

Thresholds

Description

k

an empirically determined constant for the feature

response computation in the step of

LDFs

detection

T

p

the pixel distinctiveness factor value threshold in the step

of

LDFs

detection

M

×

N

the number of sub-regions in overlap region division

s

% the percentage of the most distinctive points to be

LDFs

t%

the percentage of the most distinctive

LDFs

to be seed points

r

radius of feature support region to compute

SIFT

descriptor

T

w

the

FIPS

detection threshold in the matching step

Table 3. Description of datasets adopted in thresholds tuning.

Image

Pair

Image

Source

GSD

(unit: meter)

Image Size

(unit: pixel)

Test Site

1

ZY-3

3.50

1000×1000 Wuhan, China

2

IKONOS

1.00

1000×1000 Beijing, China

3 QuickBird

0.61

1000×1000

San Francisco,

USA

4 WorldView-2 0.50

1000×1000

San Francisco,

USA

5 WorldView-3 0.30

1000×1000

Buenos Aires,

Argentina

6

Aerial

0.38

1000×1000

Zurich,

Switzerland

7

Aerial

0.10

1000×1000 Taizhou, China

8

UAV

0.01

1000×1000 Guizhou, China

9

UAV

0.01

1000×1000 Nanchang, China

1.

Feature Support Region Radius r

In this part, in order to find a good threshold

r

, all other

thresholds are set as constant:

k

= 0.06,

T

p

= 0.01×

PF

max

,

M

×

N

= 5×5,

s

% = 50%,

t

% = 5%, and

T

w

= 0.004. All the

nine pairs of images described in Table 3 are matched

under different feature support region radius (note image

scale compensated through GSD). The tested feature

support region radius values are shown in Equation 9:

r

= [8, 16, 24, 32, 40, 48, 56, 64, 72, 80, 88, 96]

(9)

The statistic

NCM

and

MP

values are shown in Figure 5.

The experimental results in Figure 5 show that a feature

support region with a small size or large size produces low

NCM

and

MP

values. On one hand, a small feature support

region might be unreliable for descriptors. On the other

hand, a large feature support region may cause the de-

scriptors of correspondence to not distinguishable enough

to be recognized. It is also shown that the overall peak

performance of

NCM

and

MP

have been obtained when the

region radius is set as 48 pixels.

2.

FIPS

Detection Threshold T

w

In order to tune the threshold

T

w

, we compute the feature

repeatability rate (

FRR

) values of the nine pairs of images

in Table 3 under different

T

w

values.

518

August 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING