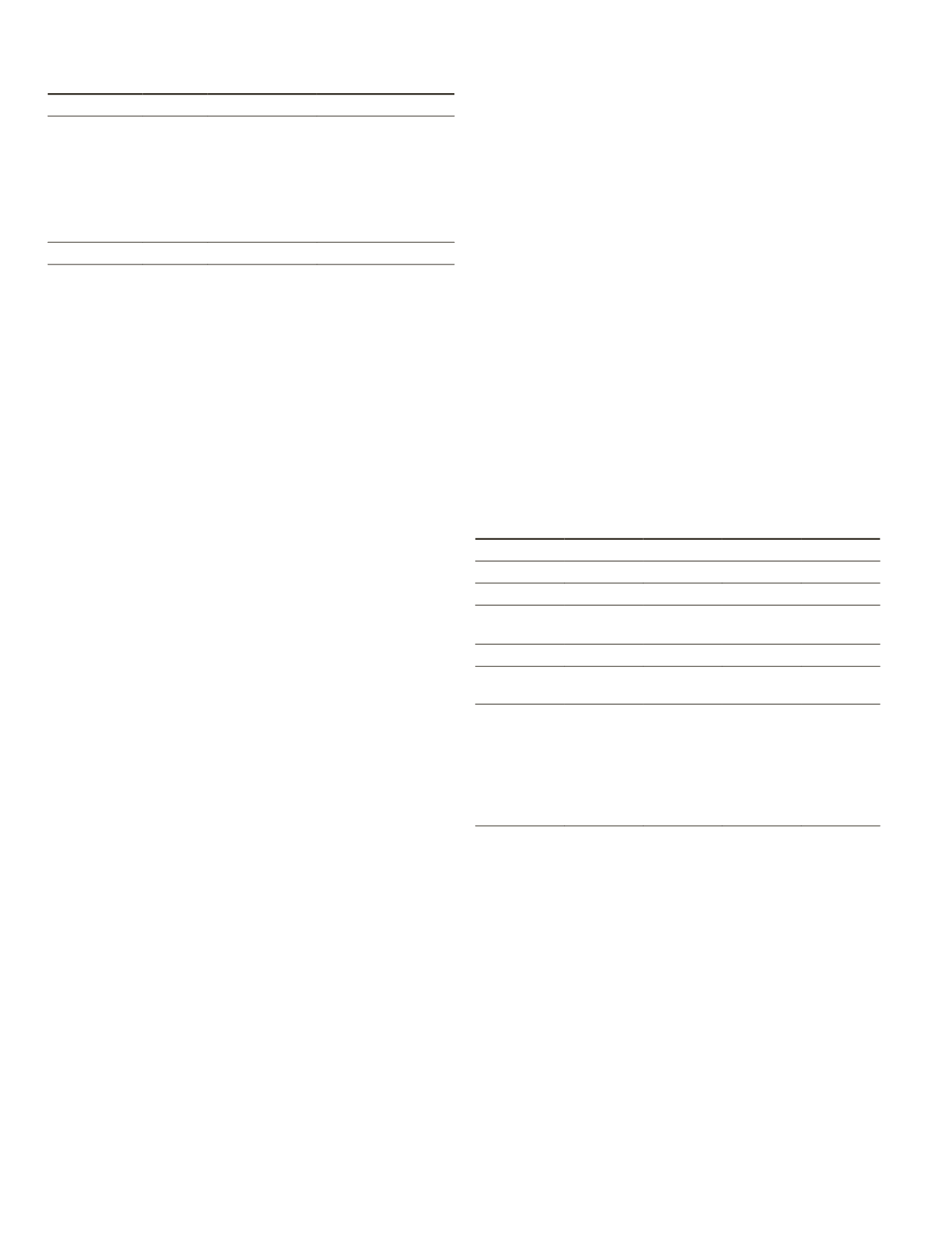

Table 1. WorldView-2 multispectral bands (DigitalGlobe, 2017).

Band Name

Band # *FWHM (nm)

Band Center (nm)

Coastal blue

1

400-450

425

Blue

2

450-510

480

Green

3

510-580

545

Yellow

4

585-625

605

Red

5

630-690

660

Red Edge

6

705-745

725

Near Infrared-1

7

770-895

835

Near Infrared-2

8

860-1,040

950

*FWHM = full width at half maximum.

The Worldview-2 images were orthorectified to the Uni-

versal Transverse Mercator coordinate system, calibrated to

at-sensor radiance values, and atmospherically corrected

to surface reflectance using the

FLAASH

module in the com-

mercial software, Environment for Visualizing Images (

ENVI

)

(Harris Corporation, Melbourne, Florida). All remote sensing

analyses were performed in

ENVI

.

Methodology

Forest Cover Indices and the Normalized Difference Vegetation Index

The Forest Cover Index 1 (FCI1) and Forest Cover Index 2

(FCI2) were developed to separate forest from other land cov-

ers using the following equations:

FCI1 = R

660

* R

725

(1)

FCI2 = R

660

* R

835

(2)

where R

660

, R

725

, and R

835

are reflectance in Red, Red Edge, and

Near Infrared-1 bands, respectively (Table 1). These equations

were developed after visually comparing spectra of tree cover

to those of other vegetation. While spectral profiles for trees

were similar to spectral profiles for other vegetative cover, the

red, red edge, and near infrared reflectance values for trees

were consistently lower. Traditional vegetation indices, sim-

ple ratios, and normalized difference indices do not exploit

the difference between trees and other land covers. We found

that multiplying reflectance in the pairs of bands emphasized

the difference between trees and other vegetative land covers.

The Normalized Difference Vegetation Index (

NDVI

) (Rouse

et al.

, 1974) provides a measure for vegetative vigor and is

frequently used to delineate green vegetation from non-vege-

tation and occasionally used to delineate trees from other land

covers (Peters

et al.

, 2002; White

et al.

, 2016; Bandyopadhyay

et al.

, 2013).

NDVI

was calculated using the following equation:

NDVI

= (R

835

– R

660

)/(R

835

+ R

660

)

(3)

where R

660

and R

835

are reflectance in Red and Near Infrared-1

bands, respectively (Table 1).

In the FCI1 and FCI2 images, darker areas corresponded to

trees and dense vegetation while brighter areas corresponded

to other vegetative features, including agriculture and grasses.

With this knowledge, a binary system was created to mask out

forest cover while retaining other land covers in the FCI1 and

FCI2 images using the steps below. The same steps were also

applied to the

NDVI

images.

1. Different land covers in the image were examined to de-

termine a user-defined threshold value that separated trees

from other vegetation covers.

2. A mask was built to separate out trees below the threshold

value.

3. A 200-pixel (800 m

2

) group minimum and 8-neighbor

sieve

were applied to the resulting image to remove small

clusters of other land cover pixels that should have been

identified as trees. The group minimum is the smallest size

grouping to keep. After reviewing the distribution of land

covers in the test site, a group minimum of 200 pixels was

chosen. An 8-neighbor sieve consists of all of the pixels

immediately adjacent to the original pixel and it removes

the entire group if the pixels are grouped in the same class.

4. A 3 × 3 pixel

clump

was executed to fill in small areas of

other land cover pixels that were incorrectly identified as

trees. This clump size was large enough to fill in errone-

ously identified pixels while retaining many individual

trees and most small tree groups.

5. The resulting output from Step 4 was used to apply a

mask to the original image, which resulted in an image

that masked all of the trees.

Accuracy Assessment

We selected 66 regions of interest (

ROI

) of various sizes within

the study area to assess the accuracy of these indices for

classifying trees from a broad range of other vegetative land

covers for each season of the year (Table 2). Approximately

100,000 pixels were included in these 66

ROIs

; however the

number varied slightly from date to date due to view angles

and clouds. The ‘Tree’ category included 10 conifer and 10

deciduous

ROIs

. The ‘Not-Tree’ category included 30

ROIs

of

annual crops (alfalfa, barley, corn, orchardgrass, rye, ryegrass,

soybean, turf grass, triticale, and wheat), 10 perennial grass

pastures, and 6 golf courses.

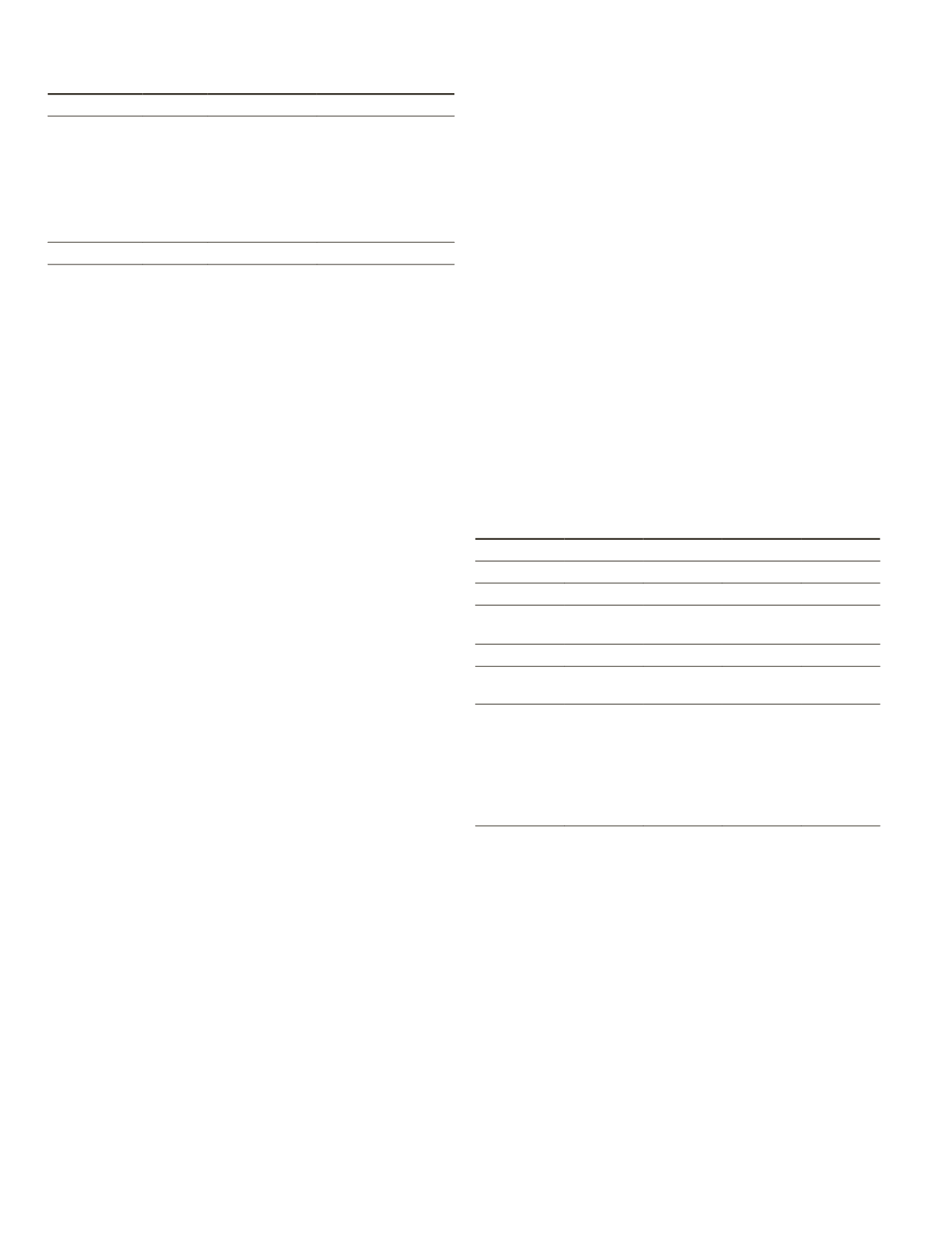

Table 2. Categories and number of

ROIs

selected for each tree

type and other vegetative land cover.

Pixels in ROI

ROI

27-May-12 5-Aug-12 26-Oct-14 18-Jan-13

Tree

Evergreen

1

4,513

3,992

4,301

2,790

Deciduous

2

34,492

31,106

32,900

21,350

Not Tree

Perennial

3

16,628

14,368

15,510

9,946

Annual

4

46,002

41,267

43,644

28,494

1

Evergreen trees are primarily coniferous trees including shortleaf

pines and Virginia pines.

2

Deciduous trees are primarily broadleaf trees including oaks,

maples, hickory, poplars, and sweetgums.

3

Perennial vegetation class included cool season grass pastures,

alfalfa, and turf grasses.

4

Annual crop class included corn, soybeans, and small grains (bar-

ley, oats, rye, triticale, wheat).

The accuracy assessment consisted of a comparison of tree

pixels to other vegetation pixels, which were recoded to “Not

Tree.” The

USDA

maintains records of land uses in the

BARC

,

which provided ground truth for the other vegetation pixels.

Error was calculated for FCI1, FCI2, and

NDVI

by using the

66

ROIs

for the four dates. User’s accuracy is a measure of the

probability that a pixel classified in an image represents that

category on the ground. Producer’s accuracy is the probability

a reference pixel is correctly classified. Overall accuracy looks

at the total number of correctly identified pixels compared to

the total number of reference pixels.

KHAT

is a comparison of

actual agreement between the computer classification of land

cover and reference data and chance agreement between the

computer classification and reference data (Congalton

et al

.,

1983). The

KHAT

statistic, its variance, and Z-statistic for a

single error matrix were computed to determine if agreement

between the remote sensing classification and the surface ref-

erence data was significantly better than random (Congalton

and Green, 2008). Non-vegetative

ROIs

were not selected.

508

August 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING