estimation, non-maximum suppression, and hysteresis

thresholding) and can detect weak edges without introduc-

ing spurious edges (Li

et al

., 2010). For edges detected using

the

EDSION

, a local re-weight strategy (Arbelaez

et al

., 2009)

is used to detect the local maximum near the endpoints of

the edges until the edge intersects with a neighborhood edge.

Finally, the closed edges are obtained to form segments.

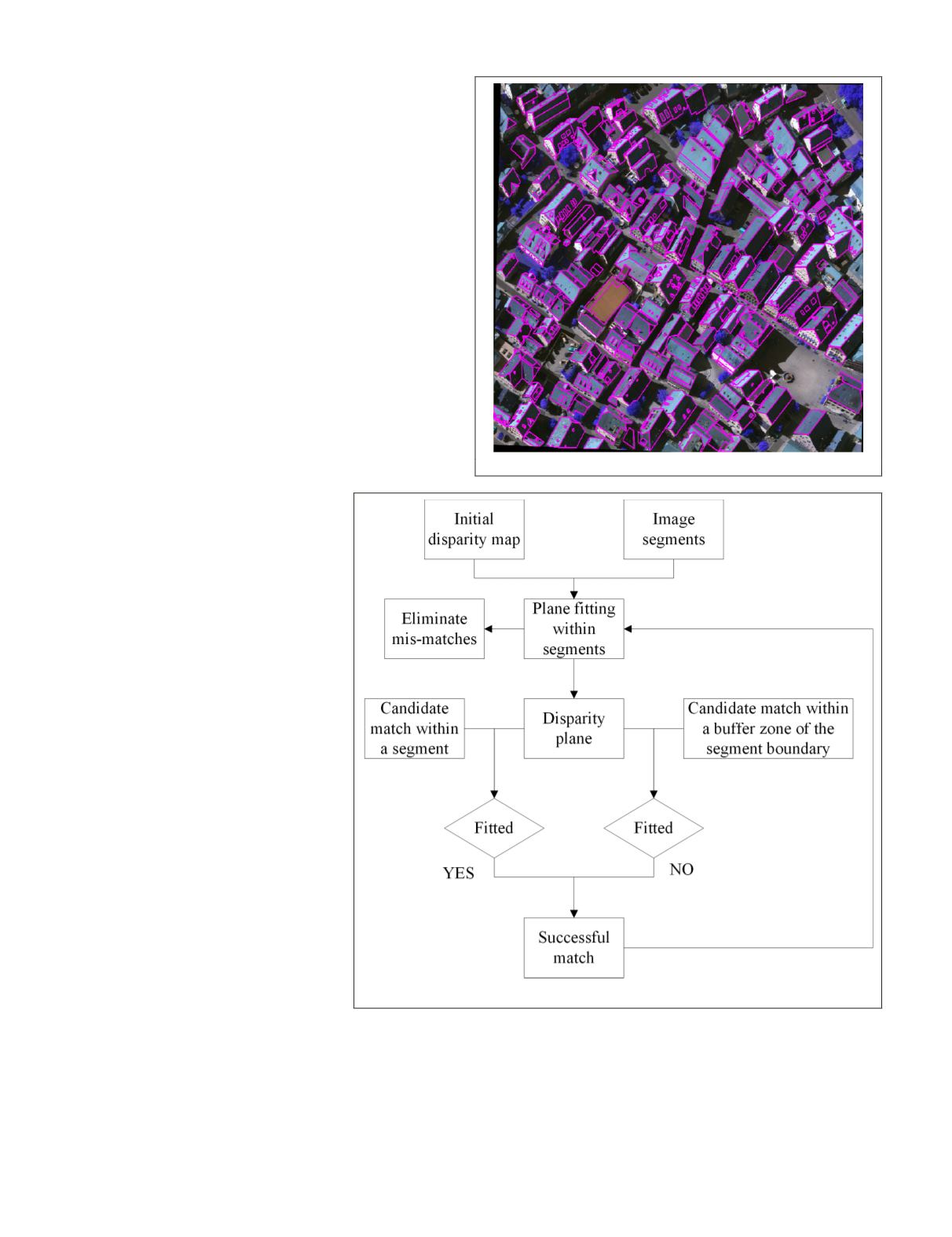

However, our extensive experiments with urban images

suggest that the exclusive use of the above method leads to

significant over-segmentation problems due to the complex

textures in urban images. Therefore, a region growing al-

gorithm (Felzenszwalb and Huttenlocher, 2004) is used to

reduce over-segmentation by merging and growing regions

with similar properties.

Notably, image segmentation is not the emphasis of this

research, and therefore the well-known segmentation algo-

rithms were used. It might be necessary to interactively edit

the automatically obtained segmentation results to ensure

reliability and effectiveness. Figure 2 presents an example of

the segmentation results.

Occlusion Filtering

In stereo vision, the occlusion problem refers to the visibility

of some parts of the scene by one camera but not the other

camera as a consequence of scene and camera geometries.

Urban areas contain many skyscrapers and tall buildings that

present opportunities for occlusion (e.g.,

object self-occlusion and occlusion between

multiple objects) (Xing

et al

., 2009). To

reduce the ambiguities caused by occlusion

problems, we propose an occlusion filter

in the image matching framework based on

previous image segmentation results.

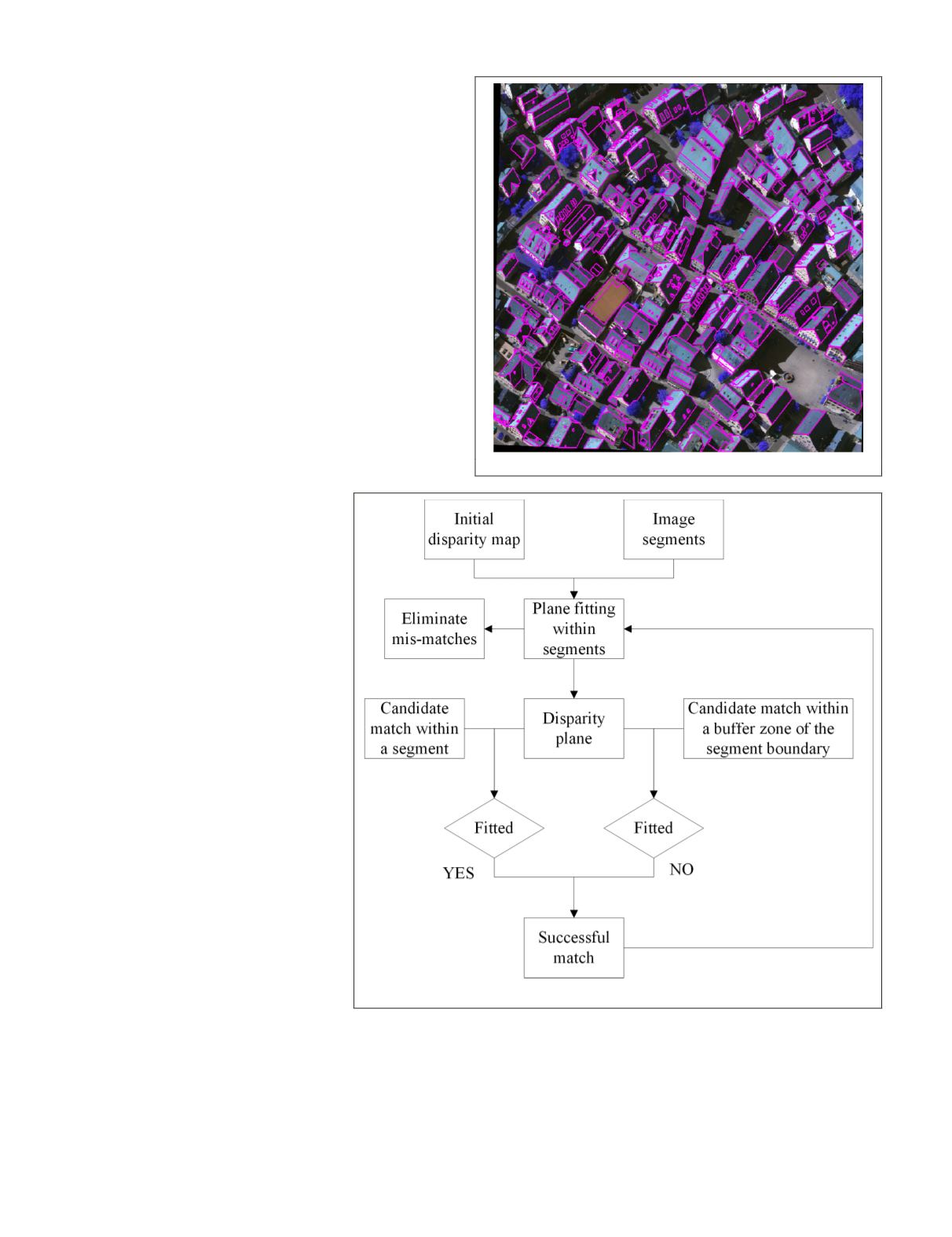

Occlusion filtering assumes that dispari-

ties will change smoothly within the same

plane (either a building roof or facade), but

will change suddenly when the image point

changes from a facade location to a roof

and vice versa. Therefore, two scenarios are

considered specifically: (1) candidate match

within a segment, and (2) candidate match

outside a segment but within a buffer zone

of the segment boundary. Figure 3 shows a

flowchart of the occlusion filtering method.

An initial disparity map is generated based

on feature matching results obtained during

the previous step. The image segmentation

results and

RANSAC

algorithm (Fischler and

Bolles, 1981) are used to fit the disparities

D

and the image coordinates (

u,v

) within

each segment, using a robust plane model

(e.g., for the building roof) or a quadratic

surface model

D

=

S

(

u,v

) (e.g., for building

facades). From the established plane model,

each pixel (

u

p

,v

p

) within the segment will

be able to have an estimated disparity

D

S

P

=

S

(

u

p

,v

p

) based on interpolation. For the

first scenario of candidate match within the

segment, if the disparity from the candidate

match

D

C

P

=

S

(

u'

p

,v'

p

) is within a threshold

range of the interpolated disparity

D

S

P

,

the match is considered successful. Disparities beyond the

threshold range suggest a mismatch possibly due to occlusion

problems that should be excluded. For the second scenario of

candidate match outside a segment but within a buffer zone

of the segment boundary, the disparity of the candidate match

D

C

P

should be outside the threshold range, and a failure to

meet this criterion may also indicate a mismatch. The newly

matched point (

u

p

,v

p

,

D

C

P

) will be collocated, and used to re-fit

the plane dynamically by

RANSAC

, and inserted into the trian-

gulations dynamically to ensure that the plane will gradually

approach the real situation. Notably, occlusion filtering sets

a loose constraint on earlier stages of matching propagation

when the smaller number of matched points reduces the accu-

racies of the disparity planes. These constraints tighten as the

number of matched points increases during the later stages of

the matching propagation.

Figure 2. Example of an image segmentation result.

Figure 3. Flowchart of occlusion filtering.

138

March 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING