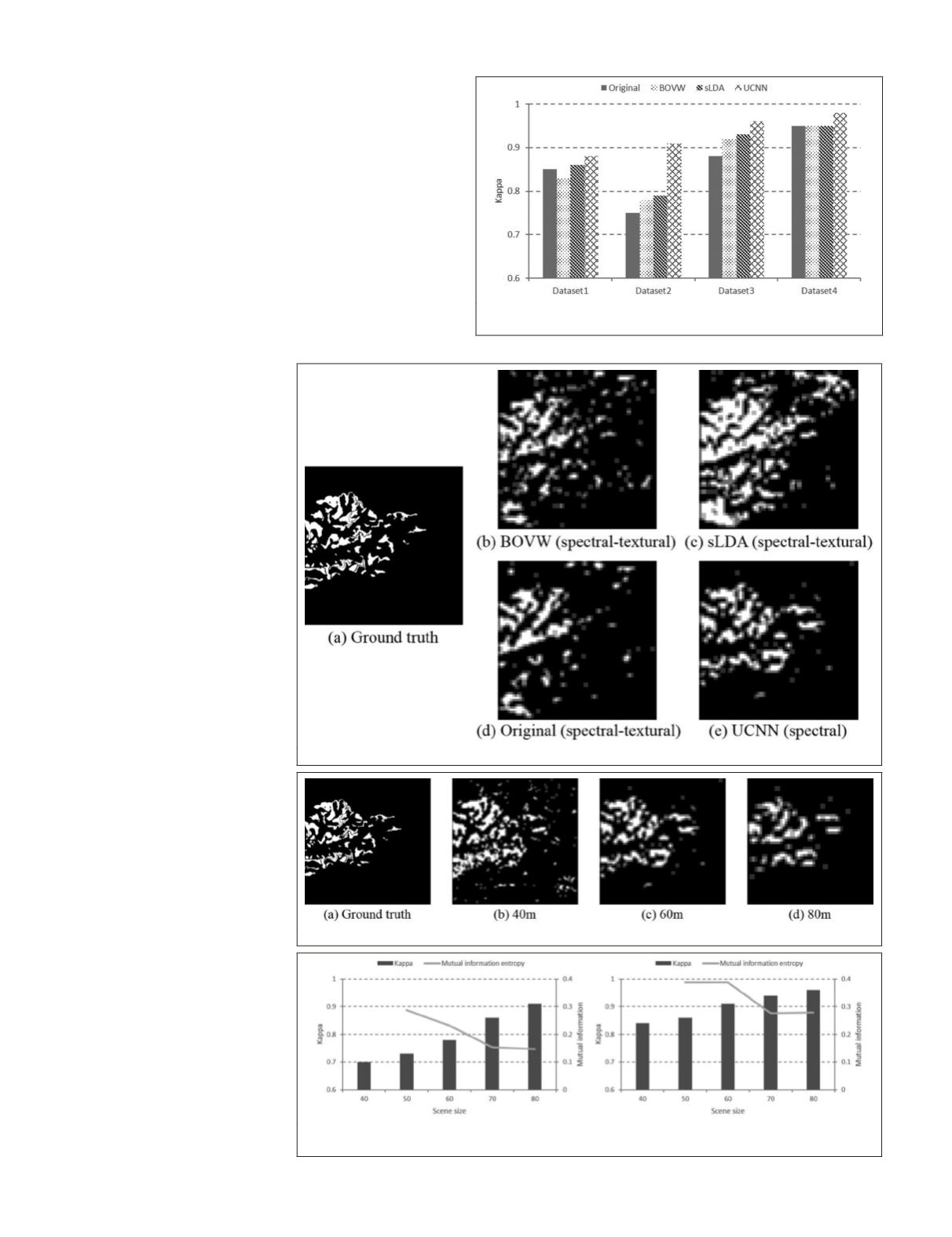

Figure 11. Comparison between the classification maps of the different models in dataset 2.

Figure 12. Comparison between the classification maps of differentscene sizes in dataset 2.

Figure 13. Comparison of the Kappa values and mutual information entropy between

different scene sizes in dataset 2.

entropy sharply increases from 60 m to 70 m. Therefore, in this

study, we selected 60 m as an appropriate scene size to balance

the detection accuracy and mapping detail.

Conclusions

In this paper, we have proposed a scene-based framework to

effectively detect tea gardens from high-resolution remotely

sensed imagery. The proposed framework is made up of three

scene classification models: the bag-of-visual-words model,

supervised latent Dirichlet allocation, and the unsupervised

convolutional neural network. These models achieved a high

accuracy and produced fine classification maps in our study

area. Through discussion and comparison, it was found that

unsupervised feature learning based on the

UCNN

outperformed

the other models in both accuracy assessment and visual

results. Furthermore, it was found that the supplement of the

textural features could significantly

improve the performance of the

topic models, but it had no effect on

the performance of the

UCNN

, since

this model can automatically and

adequately extract the discrimina-

tive features from the raw images.

To the best of our knowledge,

this is the first study of tea garden

detection using remotely sensed

imagery. Since tea plants are the

main cash crop in Chinese rural

areas, particularly in the mountain

villages in the southern provinces,

tea garden detection and monitor-

ing is important for the assessment

and guidance of economic develop-

ment in these areas. In the future,

we plan to implement the proposed

tea garden detection framework at

a larger scale for tea cultivation and

economic assessment.

Acknowledgments

The research was supported by

the National Natural Science

Foundation of China under Grants

41522110 and 41771360, the

Hubei Provincial Natural Science

Foundation of China under Grant

2017CFA029, and the National

Key Research and Development

Program of China under Grant

2016YFB0501403.

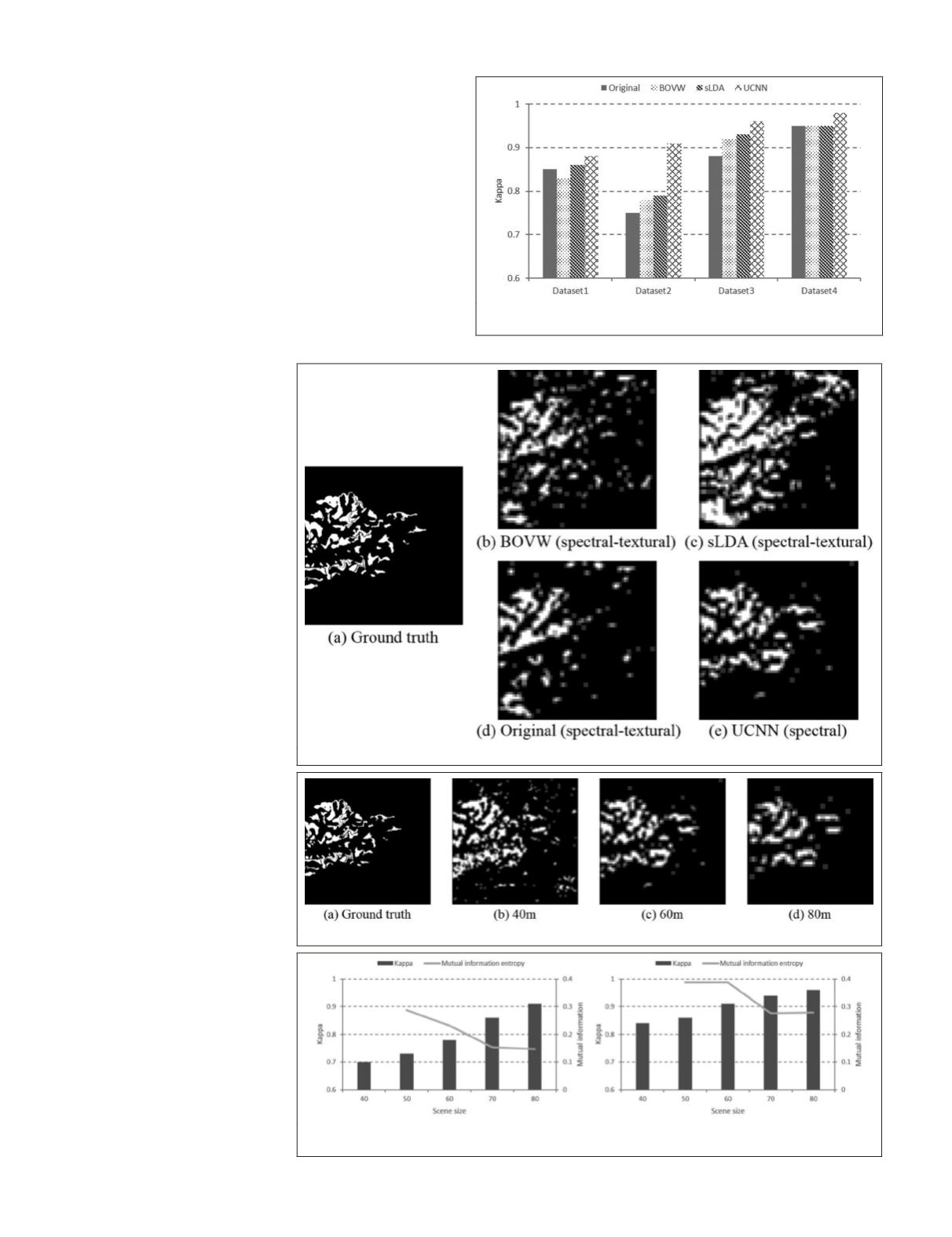

Figure 10. Comparison between the Kappa values of the

different models.

730

November 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING