scene classification (Chong

et al.

, 2009; Putthividhy

et al.

,

2010; Huang

et al.

, 2015).

However, the

BOVW

and topic models rely on the estab-

lishment of empirically designed features to depict the local

patches of the images as visual words. In order to overcome

this limitation, an increasing amount of research has focused

on unsupervised machine learning methods to autonomously

extract adaptive and suitable features from unlabeled input

data. For example, Coates

et al.

(2010) built a single-layer

UCNN

for unsupervised feature learning. They used different

unsupervised learning algorithms to generate local convolu-

tional features (i.e., function bases), and found that

k

-means

clustering, which is an extremely simple learning algorithm,

achieved the best performance. Blum

et al.

(2012) applied the

network proposed in Coates

et al.

(2010) to object recognition

from natural images with depth information. Dosovitskiy

et

al.

(2014) developed a multi-layer

UCNN

to learn feature rep-

resentations from unlabeled images, and the learned features

performed well in natural image classification. Recently,

in order to classify remotely sensed scenes, Li

et al.

(2016)

trained a multi-layer

UCNN

using

k

-means clustering to au-

tonomously mine complex structure features from high-reso-

lution images, and used support vector machine (

SVM

) for the

final scene classification. In this study, the features extracted

by the

UCNN

achieved a better scene classification accuracy

than

BOVW

and sparse coding.

To the best of our knowledge, little research has been

so performed concerning tea garden detection from remote

sensing data. However, this is necessary since tea cultivation

plays an important part in Chinese agriculture, but the current

tea garden monitoring relies on field investigation, which is

time-consuming and labor-intensive. In this context, in the

proposed scene-based framework, high-resolution satellite

images are employed to detect tea gardens, since these im-

ages can provide abundant spatial and textural information.

Considering that a tea garden is a semantic scene composed

of a variety of interrelated objects in a high-resolution image,

we propose to apply scene-based semantic learning methods

for tea garden detection, including the following experimental

configurations: (1)

BOVW

is used to represent the scenes with

spectral and Gabor textural features. An

SVM

classifier is then

employed to classify the representation into tea gardens and

non-tea gardens; (2)

sLDA

is used to extract the topic features

from the

BOVW

representation of the scenes and predict the cat-

egory label of each scene; and 3) A multi-layer

UCNN

is trained

to generate discriminative features from the original spectral

images, and the derived features are also classified by

SVM

.

The rest of this paper is organized as follows. The next sec-

tion introduces the tea garden detection framework, followed

by a description of the datasets and the experimental setup.

The next section presents the detection results and discus-

sion with the different methods and features. The last section

concludes the paper.

Methodology

In this section, we introduce the scene classification meth-

ods employed in this study (i.e.,

BOVW

,

sLDA

, and the

UCNN

).

Subsequently, the proposed scene-based tea garden detection

framework is described in detail.

Topic Scene Classification Models

BOVW

is the basis of the topic models, and thus it is pre-

sented before introducing the

sLDA

model. The

BOVW

model

was derived from a text analysis method which represents

a document by the word frequencies, ignoring their order.

The idea was then applied to images by utilizing the visual

words formed by vector quantizing the visual features. The

BOVW

representation is constructed in two stages, as shown in

Figure 2, i.e., visual word learning and feature encoding. Dur-

ing the visual word learning, the remotely sensed images are

divided into patches, and the spectral or textural information

of these patches is extracted to generate feature vectors which

can describe the patches. We then quantify the spectral and

textural descriptors using the k-means clustering algorithm.

The cluster centers, which are known as “visual words”,

form a dictionary. In the feature encoding, an unrepresented

scene is split into several patches. Each patch is assigned to

the label of the closest cluster center after extracting features

of the patch. In this way, an image can be represented by a fre-

quency histogram of the labeled patches. The histogram can

be regarded as a feature vector for the subsequent classifica-

tion, whose size is equal to the size of the dictionary.

The

BOVW

model represents a scene as a text document by

the frequencies of the visual words. The

LDA

model (Blei

et

al.

, 2003), which is a generative probabilistic model from the

statistical text literature, characterizes the scene as random

mixtures over latent topics, where each topic in turn is de-

scribed by a distribution over the visual words in the dic-

tionary. The process of

LDA

to generate a scene

d

can then be

described as follows (as shown in Figure 3):

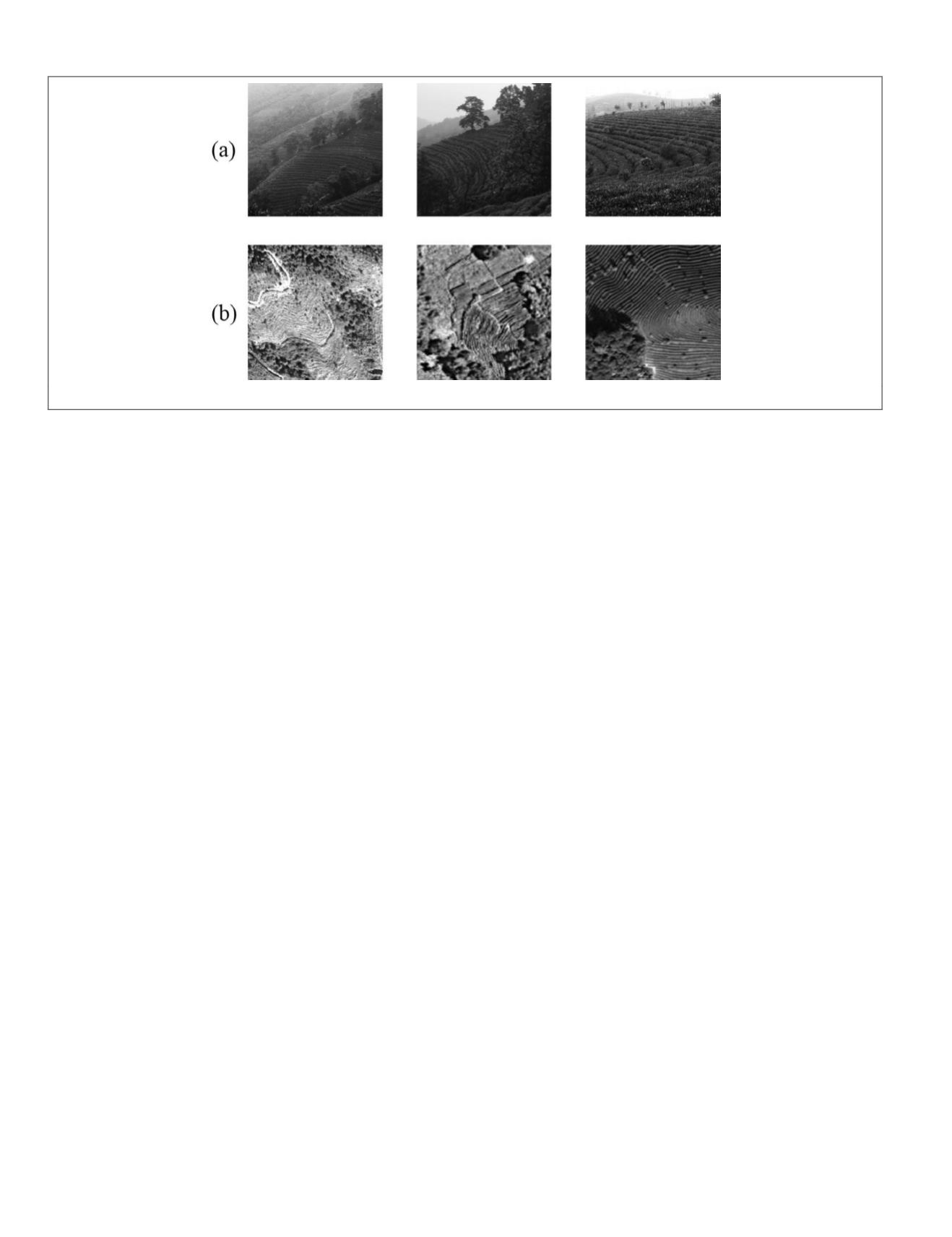

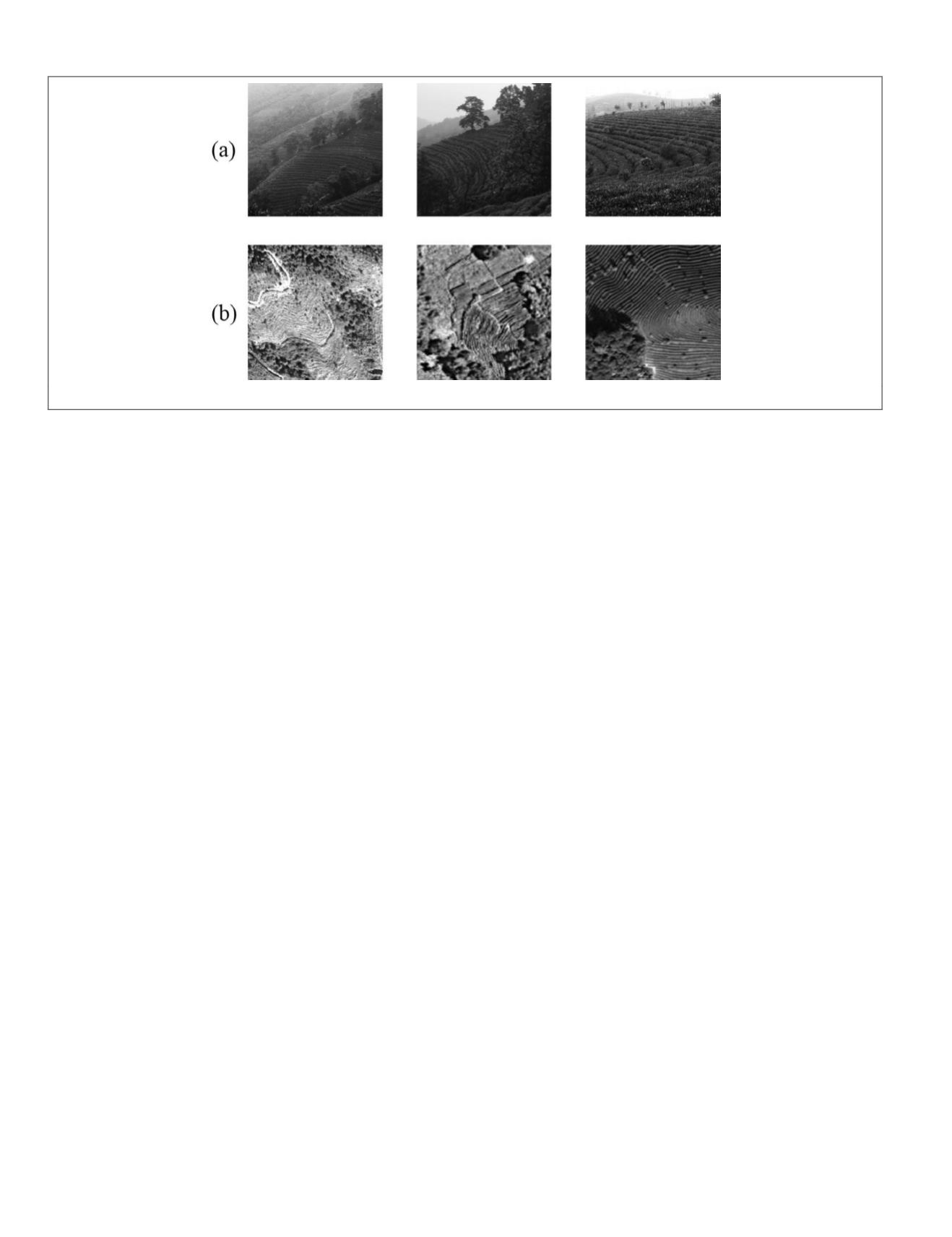

Figure 1. Examples of tea gardens: (a) Digital camera photographs, and (b) Google Earth images.

724

November 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING