block, there are three branches. The right layer contains a 3×3

and 1×1 depthwise separable convolutional layer. Depthwise

separable convolution contains a depthwise convolution and a

1×1 standard convolution, which was proposed by Howard

et

al.

(2017). Depthwise, convolution has fewer parameters com-

pared to standard convolution, and its calculation is as follows:

G

K F

k l n

i j m n k i l j

m

i j m

, ,

, , ,

,

,

, ,

,

=

⋅

+ − + −

∑

1 1

(1)

where

F

k

+

i

–

l

,

j

–

l

,

m

represents the data that are located in (

k

+

i

−1,

l

+

j

− 1) of the

m

th input feature map,

K

i,j,m

is the param-

eter that is located in (

i

−1,

i

−1) of the

m

th convolutional

kernel, and

G

k,l,m

is the data that is located in (

k

,

l

) of the

m

th

output feature map. The middle branch contains 1×1 and 3×3

standard convolutional layers, which are formulized as:

G

K F

k l n

i j m n k i l j

m

i j m

, ,

, , ,

,

,

, ,

,

=

⋅

+ − + −

∑

1 1

(2)

where the variables in Equation 2 have the same meanings as

the depthwise convolution in Equation 1. However, there are

M×N convolutional kernels that create more calculations, and

M and N correspond to the channel number of input feature

maps and output feature maps. The left branch contains a 1×1

standard convolutional layer that aims to pass the features

of the previous layer forward. In addition, the branches are

merged by a maxout pooling layer. Here, all convolutional

layers are followed by an

ELU

activation function (Clevert

et

al.

2015) and a batch normalization layer as proposed in Ioffe

and Szegedy (2015).

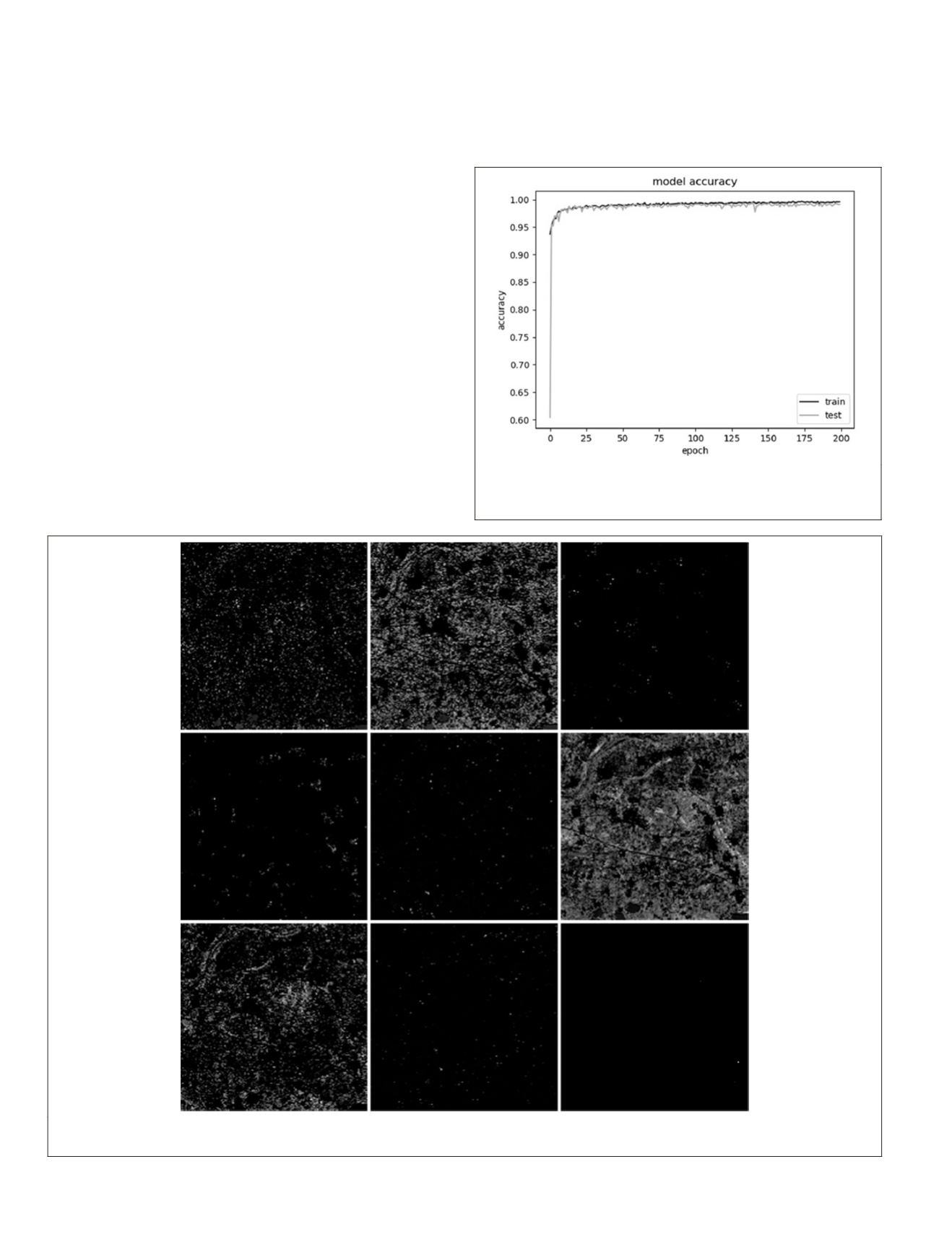

Figure 3. The training process of

LMB-CNN

. The train curve

represents the model accuracy on the training set, and the test

curve represents the model accuracy on the validation set.

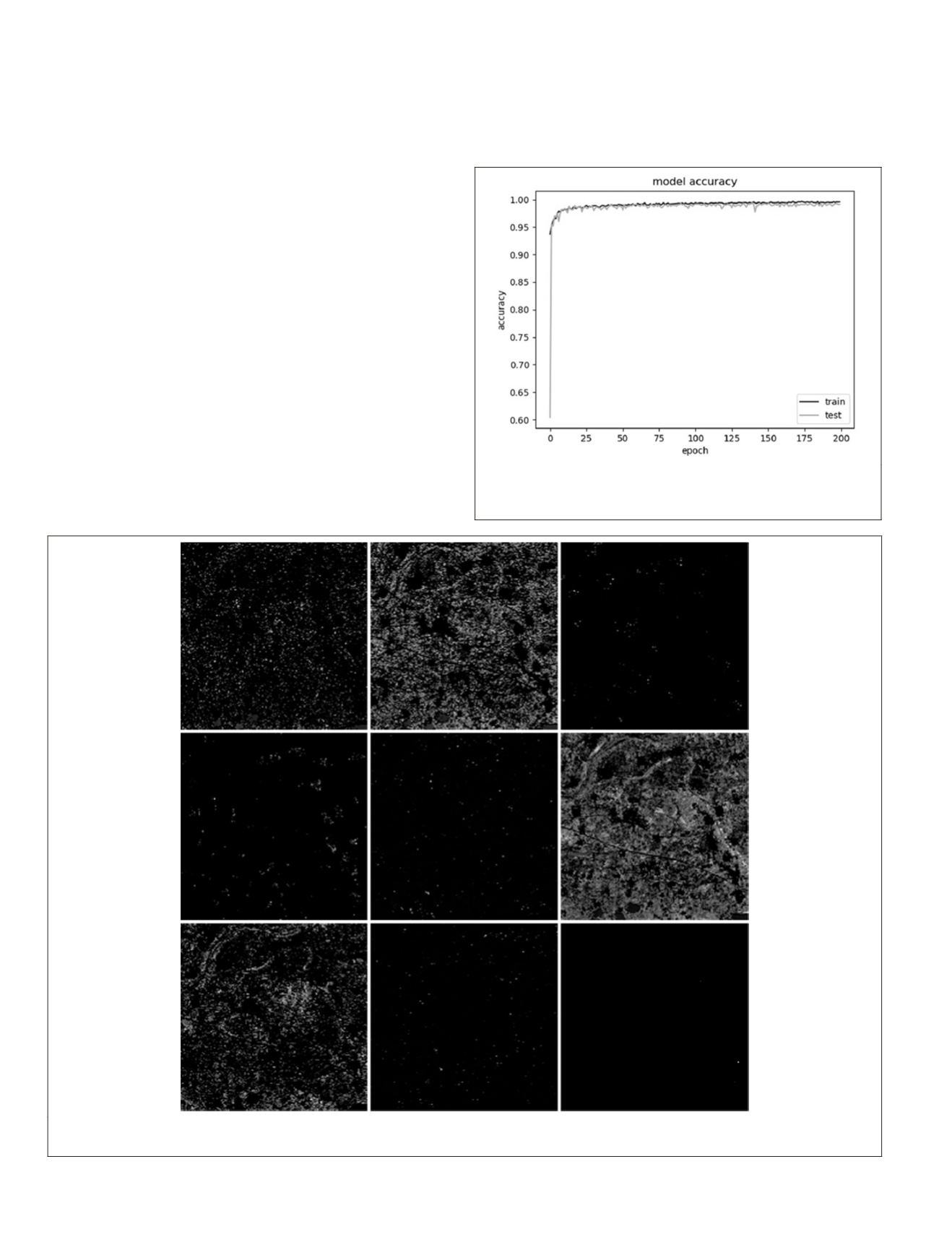

Figure 4. The first nine reconstructed feature maps with size of 160×160 (corresponding to the sample image with 10 240×10

240 pixels), in which the deep features are extracted by

LMB-CNN

.

740

October 2019

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING