Figure 8 shows the optical-

SAR

2 contours detected by a

different canny threshold. The image contours detected by the

canny threshold of 0.1 are too dense and plentiful to reduce

the impact of the increase/decrease and deformation of ter-

rain. By contrast, contours are not enough to use the canny

threshold of 0.3 to extract the terrain shape features, making it

difficult to extract a sufficient number of tie points. Therefore,

the experiments achieve the highest registration accuracies

when the parameters are set to 15×15 pixel template windows

with a canny threshold of 0.2. The remaining experiments are

implemented on the basis of these parameters (include the

results shown in Figure 9, Figure 10, Figure 11, and Table 2).

Table 2 shows that the proposed

SSSF

ncc

has been success-

fully applied to match all data. These tests achieve different

registration accuracies due to terrain and resolution differ-

ences. The registration accuracies of the medium-resolution

and map sets are higher than the high-resolution set. The

registration results have such significant differences that the

medium-resolution set with low resolution contains small

local distortions. The map set data are obtained from simpli-

fied real terrain (classify terrains into one class) containing

small local distortions. High-resolution sets obtain the lowest

registration accuracy due to the increase/decrease and clear

deformation of terrain between two images. However, the

RM-

SEs

of optical-

SAR

1 and optical-

SAR

2 are 0.97 and 1.11 pixels,

respectively. These values are acceptable accuracies for the

registration of high-resolution images.

The number of tie points and the

CMR

have some differ-

ences among the five tests. The optical-

SAR

2 and 4, which

contain urban areas, have more terrain features compared

with optical-

SAR

1 and 3, which contain a large water area.

Therefore, more tie points can be detected in the optical-

SAR

2 and 4 images. As for the map set, detecting too many tie

points is difficult due to the lack of terrain. The CMRs of all

five groups of data are very high. Compared with optical-

SAR

1 and 3, the

CMRs

of optical-

SAR

2 and 4 are higher due to the

similarity and amount of terrain between the reference and

sensed remote sensing images. Therefore, the optical-

SAR

4

achieves the best registration accuracy. In summary, these

registration results show that the

SSSF

ncc

similarity measure is

robust for significant nonlinear grayscale differences among

multisource remote sensing images.

Comparison of SSSF with Other Methods

In this study, the

SSSF

ncc

similarity measur

tie points. The proposed

SSSF

ncc

is compared with the

NCC

,

HOPC

ncc

, and

CSLTP

to illustrate its accuracy in terms of match-

ing multisource remote sensing images.

The histogram of the oriented phase congruency (

HOPC

) (Ye

et al.

2017) based on shape features is successfully used to

match the multisource remote sensing images. This descrip-

tor first divides the image into several blocks, with each

block comprising several “cell” units. The phase consistency

histograms in all cells are counted and linked together to

form the final feature description vector. The basic idea of

phase consistency is that the image features always appear at

the maximum phase overlap of the Fourier harmonic compo-

nents. Then, the correlation coefficient between the feature

vectors (

HOPC

ncc

) is used to match the correspondence points.

However, this descriptor is limited by the structural features

of the image and can be time consuming.

The main ideal of

CSLTP

based on self-similarity is a

rotation invariance description strategy on local correla-

tion surface. The

SIFT

algorithm is applied for local feature

detection and the

CSLTP

descriptor is constructed for each

extracted feature points. Then, a bilateral matching strategy

combined with an outlier removal procedure in the geometric

transformation model are applied for feature registration and

mismatch elimination. This method has successfully matched

various multisource satellite images and results show that it

is inherently rotation invariant and robust to complex inten-

sity differences. This study compares the

HOPC

ncc

and

CSLTP

to

illustrate the reliability of the proposed method.

Five comparison experiments were set to verify the ac-

curacy of

SSSF

ncc

by detecting tie points between multisource

images. First, the same 800 Harris feature points were de-

tected in the reference image. The tie points were extracted

in a search region (20×20 pixels) of the sensed image via a

template matching method using the

SSSF

ncc

,

NCC

,

HOPC

ncc

as

measures, while the

CSLTP

uses a bilateral matching strategy to

match tie points. The most appropriate parameters were set to

three similarity measures to analyze the registration accuracy

of these three similar measures. For example, the

HOPC

ncc

was

set with the parameters of

β

= 8 orientation bins, 3×3 cell

blocks of 4×4 pixels cells, and a

α

= 1/2 block width overlap.

A global consistency check method was then used to remove

the mismatched tie points.

Table 2 lists the matching performance of all the five tests,

including the total matches, matched points, CMRs, match-

ing times, and

RMSEs

. The times, CMRs, and

RMSEs

are applied

to assess the registration accuracy of the proposed method.

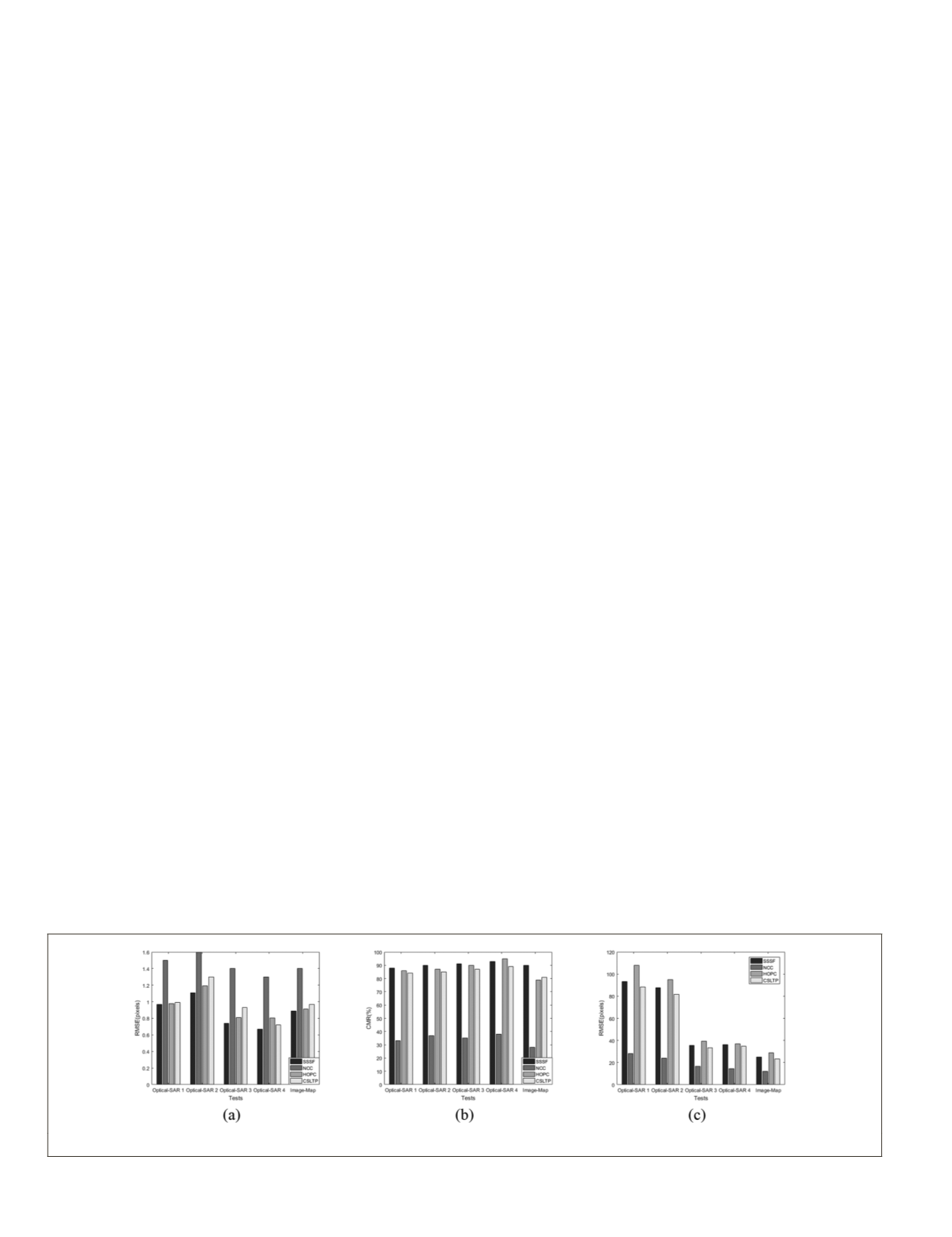

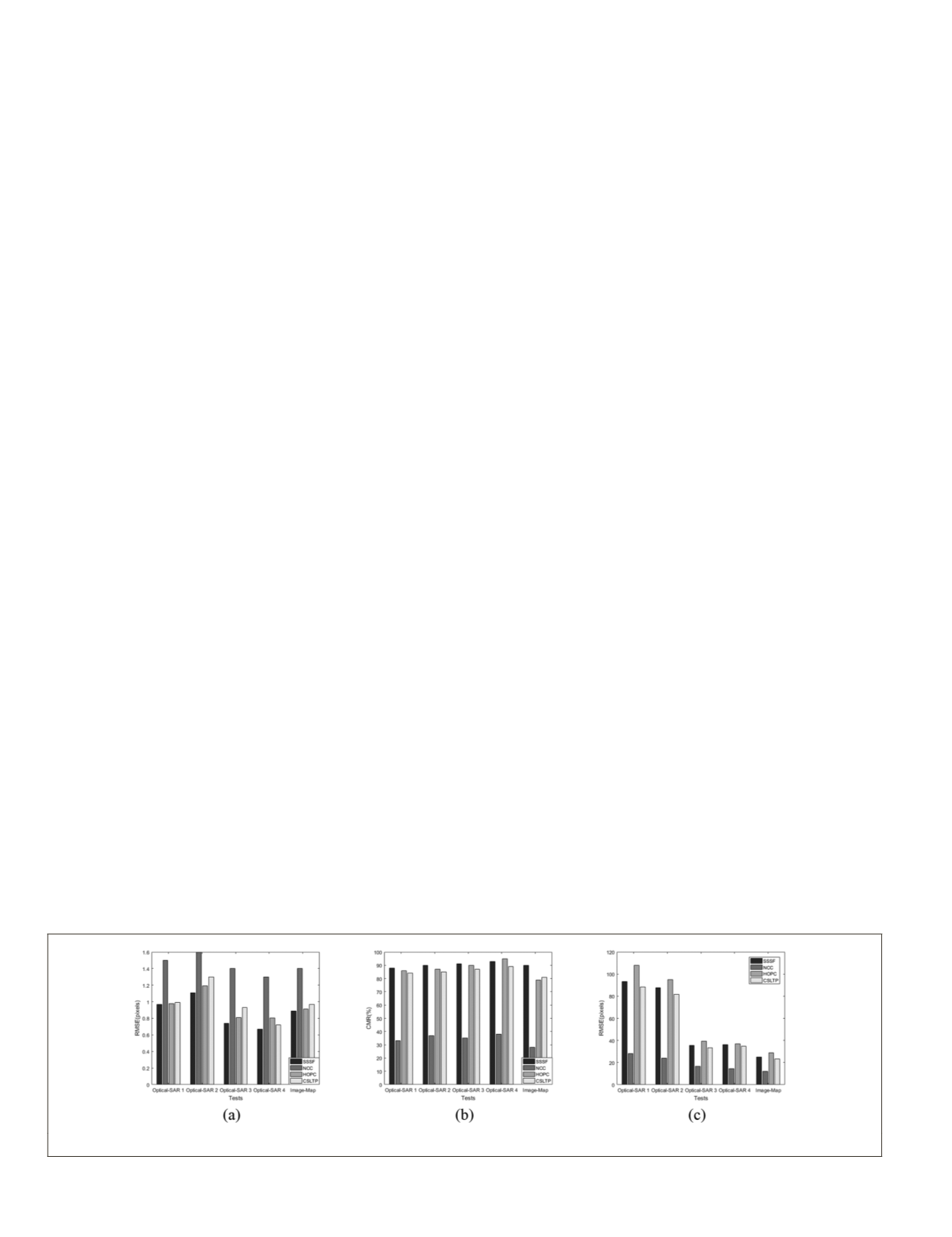

Figure 13 shows the times,

RMSEs

, and CMRs of these three

similar measures.

As shown in Figure 13 and Table 2, the

RMSEs

and CMRs of

SSSF

ncc

show better results compared with the other meth-

e analysis is as follows: The

NCC

is

ty patterns rather than the complex

erences across multisource images.

s good registration outcome. How-

ever, the

CMRs

and

RMSEs

of

HOPC

ncc

are slightly lower than

the proposed method, because the

HOPC

ncc

is defined by the

single amplitude and orientation of phase congruency (Ye

et

al.

2017), whereas the

SSSF

ncc

is based on the relative position

of the vector from the center of the area to the other points.

The accuracy of

CSLTP

is lower than

SSSF

ncc

and

HOPC

ncc

may be

because it is inherently rotation invariant rather than robust

to the local deformation caused by high buildings and river

Figure 13. (a)

RMSEs

, (b) CMRs, and (c) Times of three similarity measures.

734

October 2019

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING