techniques designed for spherical panoramic images all yield

better results than those designed for planar images. It is

largely due to techniques designed for planar images, which

based on affine invariance do not consider the deformations

introduced by the equirectangular projection, thus deteriorat-

ing matching performance. Most of the failures occur in the

area close to the poles of the sphere.

PR+SIFT

and PR+

SURF

,

which specifically tackle spherical image distortion, yield

second high repeatability in this case. This method rotates

a spherical image to generate several subsidiary equirectan-

gular images and only extracts features in the less distorted

regions of each rotated image for matching. The matching

performance would be marginally interfered by its number

of division images. On the other hand, spherical

SIFT

drops

in repeatability when the image appearances are changed by

rotation. In contrast, regardless of the rotation angle

ω

, the

proposed method either combined with

SIFT

or

SURF

main-

tains a satisfactory repeatability score. Notably, when the

image is rotated with 180° that results in a reversed image

with the same distortion. The proposed rectified matching

achieved a perfect repeatability in regulating the rotation

discrepancy. Also, the experiment reflects the adaptability of

our method to be combined with other feature descriptors.

Figure 6 illustrates the acquired distribution of point corre-

spondences, in which dots indicate the feature locations with

index numbers. Compared to the results of

SURF

, the rectified

spherical matching finds more matches up to 24 percent and

yields better distribution as well.

As the proposed rectification explicitly generates recti-

fied image patches for each feature point based on the in-

herent characteristics of a spherical panoramic image, the

computational cost increases linearly with a number of points.

Currently, all algorithms used in this study are coded in Mat-

lab

®

and realized on a laptop Windows

™

system. The com-

putational overhead increases about 10 percent compared to

the operation time of

SURF

built in this study. Yet, the perfor-

mance of spherical image matching is improved dramatically.

Matching Between Spherical Imagery and Heterogeneous Data

The proposed spherical rectification converts distorted areas

into a similar perspective view to acquire reliable feature

descriptions. It offers the potential for applications, such as

employing hybrid camera networks for intelligent control,

surveillance, or engineering purposes. Thus, we use a

RICOH

THETA

panoramic camera and a smart cell phone camera to

capture images with different imaging geometry, scale, and

resolution in an indoor environment, and we assess the

matching performance between heterogeneous data sources.

The sizes of the panoramic and planar images are 5376 × 2688

and 3024 × 5376, respectively. We directly implement

SURF

and the rectified matching with identical parameter configura-

tion for fair comparison. In order to acquire strong features

and reliable matches, rigorous thresholds for the Fast-Hessian

detector and the ratio test are set as 1600 and 0.5, respec-

tively. As shown in Figure 7, we obtain 36.4 percent matches

from 324 detected features and the correct rate is up to 99

percent in this case, which is verified with a manual check.

As it can be observed in Figure 7(b), points (number 62 to

71) located near the pole of the sphere still can find correct

conjugate points in the planar image even though these points

are deteriorated by distortion and scale discrepancy. However,

when we loosen the thresholds as suggested in the literature,

the matching rate increases to 68 percent, but the correct rate

is down to 23 percent. Incorrect matching is largely a result

from repeated patterns of the texture within this scene, which

should be removed by a following outlier removal process.

Furthermore, the rectified spherical matching would assist

to establish the correspondence between image and lidar data

for further imagery investigation, such as image-based self-

localization and absolute image pose estimation. The com-

mon way is to convert discrete lidar point cloud into range/

intensity images and leverage image process techniques to

explore corresponding features within several planar images

automatically. This work may involve segmentation, inter-

polation, and iterative searching in terms of the point cloud,

requiring a high computational cost. Therefore, in this paper,

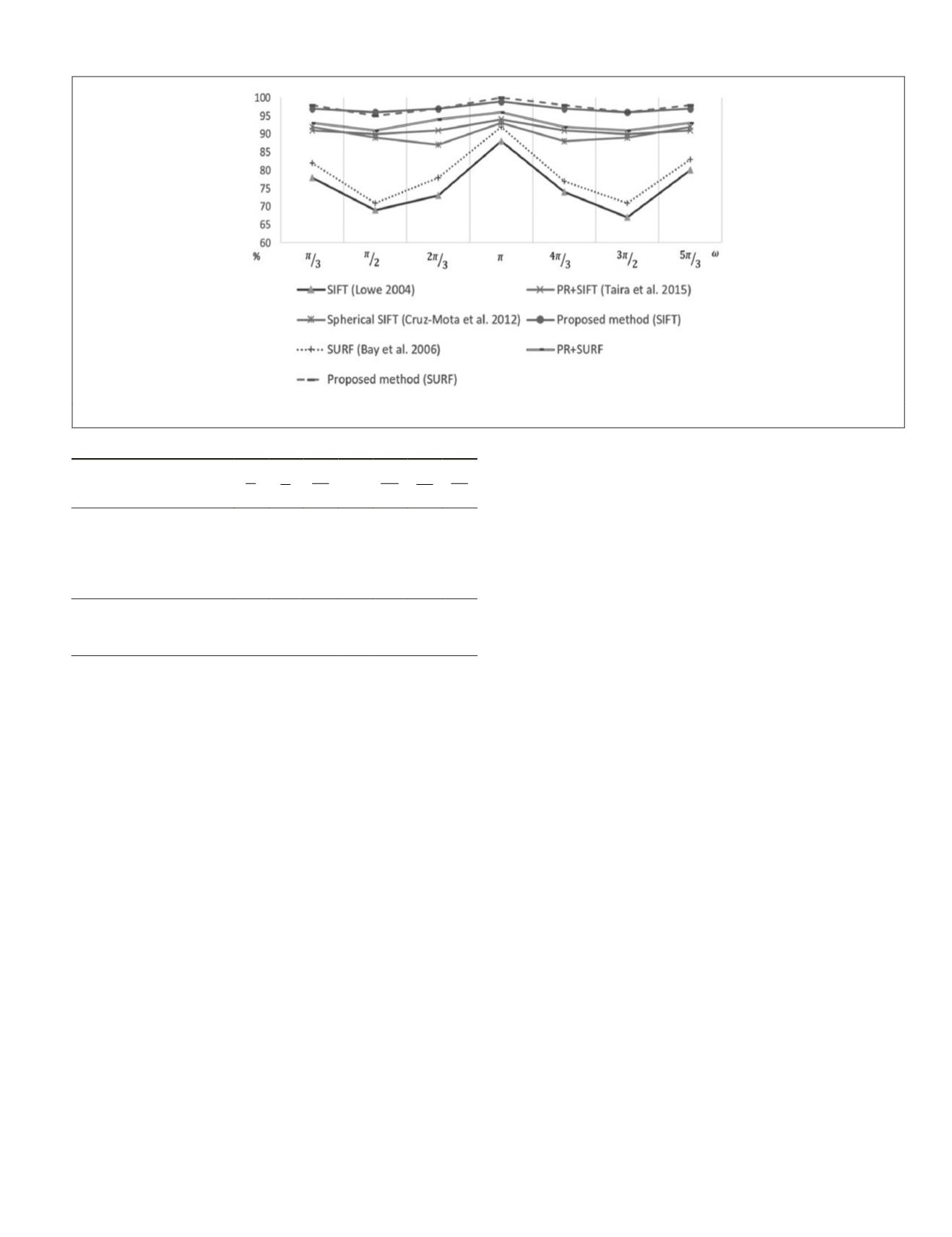

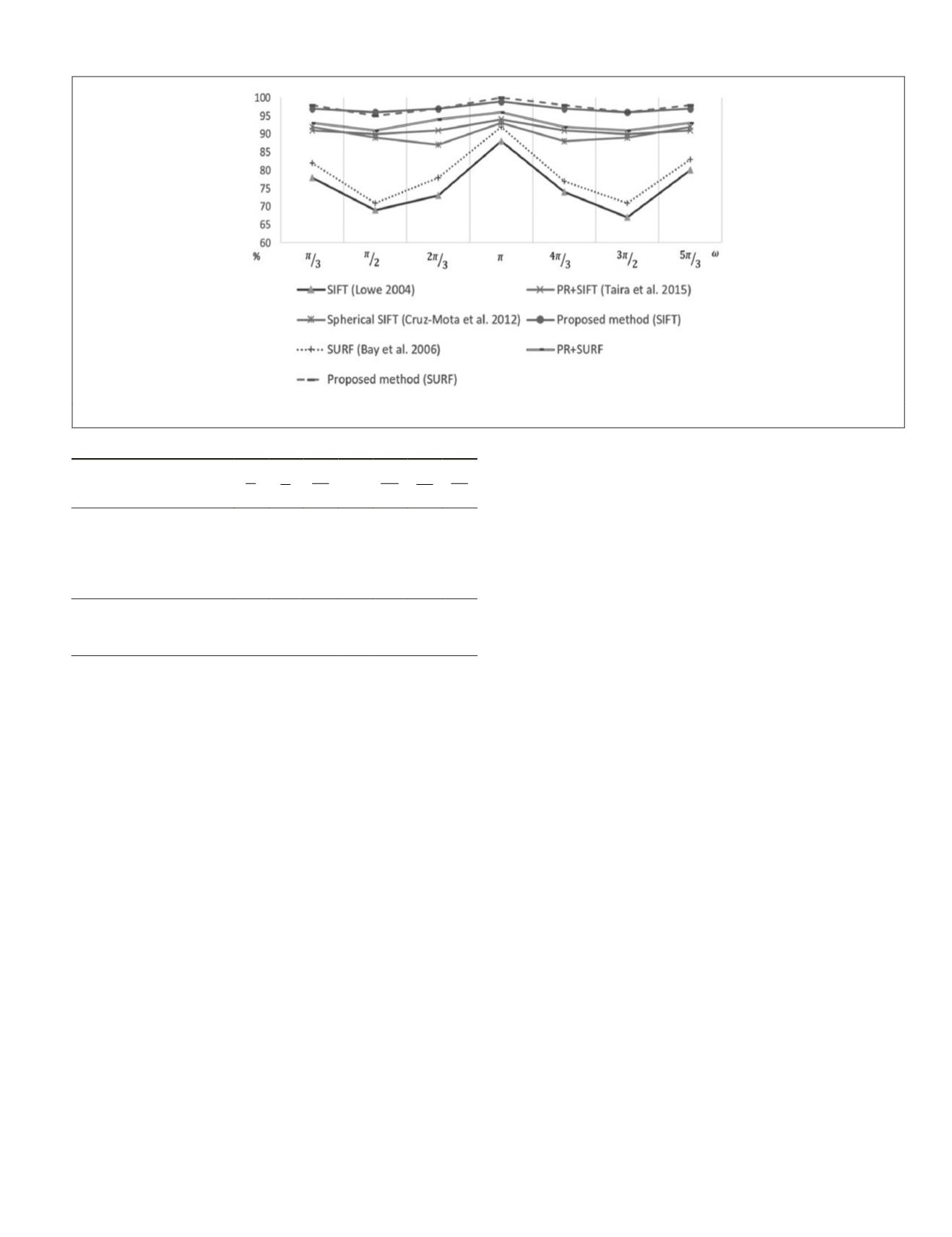

Figure 5. Trends of repeatability under

ω

rotation.

Table 2. Repeatability scores (%).

Rotating angle

ω

π

3

π

2

2

3

π

π

4

3

π

3

2

π

5

3

π

SIFT

(Lowe, 2004)

78 69 73 88 74 67 80

PR+SIFT

(Taira

et al.

, 2015) 91 90 91 94 91 90 91

Spherical

SIFT

(Cruz-Mota

et al.

, 2012)

92 89 87 93 88 89 92

Proposed method (

SIFT

)

97 96 97 99 97 96 97

SURF

(Bay et al., 2006)

82 71 78 92 77 71 83

PR+SURF

93 91 94 96 92 91 93

Proposed method (

SURF

)

98 95 97 100 98 96 98

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

January 2018

29