image-based and featured forward looking stereo cameras

with horizontal baselines. In order to cover a wider field-of-

view up to a full 360° coverage, more recent systems feature

either multiple stereo cameras (Cavegn and Haala, 2016; Meil-

land

et al

., 2015) or hybrid configurations consisting of stereo

cameras and panorama cameras in combination with lidar

sensors (Paparoditis

et al

., 2012).

Specific 360° stereovision mobile mapping systems

include the Earthmine Mars Collection System (Earthmine,

2014) with a vertical mast and four pairs of cameras, each pair

forming a vertical stereo base. In contrast, Heuvel

et al

. (2006)

presented a mobile mapping system with a single 360° camera

configuration, following the virtual stereo base approach.

Since stereo bases are calculated based on vehicle movement,

its accuracy strongly depends on the relative orientation be-

tween two different camera positions. However, virtual stereo

bases cannot achieve the same accuracy as rigidly mounted

and well pre-calibrated ones. Noteworthy examples among

the many 360° camera systems in robotics include Meilland

et al

. (2015), who introduced a spherical image acquisition

system consisting of three stereo systems, mounted either in a

ring configuration or in a vertical configuration. The vertical

configuration is composed of three stereo pairs mounted back-

to-back, whereby each triplet of cameras is assumed to have

a unique center of projection. This allows using standard

stitching algorithms when building the photometric spheres,

but sacrifices geometric accuracy. Lui and Jarvis (2010) intro-

duced alternative 360° stereo systems based on catadioptric

optics. With the rapid evolution of virtual reality headsets and

360° videos on platforms such as Youtube

®

, we see a rapidly

growing number of 360° stereo cameras from consumer grade

to high end (e.g., Nokia Ozo). While providing 360° stereo

coverage, the featured stereo baselines are typically small and

are thus not suitable for large-scale measuring applications.

For mobile mapping systems with high accuracy demands,

moving from standard stereo systems with their proven cam-

era models, calibration procedures and measuring accuracies

(Burkhard

et al.

, 2012) to 360° stereo configurations with mul-

tiple fisheye cameras poses some new challenges. Abraham

and Förstner (2005) provide a valuable summary and discus-

sion of camera models for fisheye cameras and respective

epipolar rectification methods. They introduce two fisheye

models with parallel epipolar lines: the epipolar equidistant

model and the epipolar stereographic model. Kannala and

Brandt (2006) further introduce a general calibration model

for fisheye lenses, which approximates different fisheye mod-

els with Fourier series.

There are numerous works on stereo processing and 3D

extraction using panoramic and fisheye stereo. These include

investigations on the generation of panoramic epipolar images

from panoramic image pairs by Chen

et al

. (2012). Schneider

et

al

. (2016) present an approach for extracting a 3D point cloud

from an epipolar equidistant stereo image pair and provide

a functional model for accuracy pre-analysis. Luber (2015)

delivers a generic workflow for 3D data extraction from stereo

systems. Krombach

et al.

(2015) provide an excellent evalu-

ation of stereo algorithms for obstacle detection with fisheye

lenses, e.g., with a particular focus on real-time processing.

Finally, Strecha

et al

. (2015) perform a quality assessment of

3D reconstruction using fisheye and perspective sensors.

Despite the widespread use of mobile mapping systems,

there are relatively few systematic studies on the relative and

absolute 3D measurement accuracies provided by the different

systems. A number of studies investigate the precision and ac-

curacy of mobile terrestrial laser scanning systems (e.g., Barber

et al

., 2008; Haala

et al

., 2008; Puente

et al

., 2013). Barber

et

al

. (2008) and Haala

et al

. (2008) demonstrate 3D measurement

accuracies under good

GNSS

conditions in the order of 3 cm.

Only few publications investigate the measurement accuracies

of vision-based mobile mapping systems. Burkhard

et al

.

(2012) obtained absolute 3D point measurement accuracies of

4 to 5 cm in average to good

GNSS

conditions using a stereovi-

sion mobile mapping system. Eugster

et al

. (2012) demon-

strated the use of stereovision-based position updates for a

consistent improvement of absolute 3D measurement accura-

cies from several decimeters to 5 to 10 cm for land-based mo-

bile mapping even under poor

GNSS

conditions. Cavegn

et al

.

(2016) employed bundle adjustment methods for image-based

georeferencing of stereo image sequences and consistently ob-

tained absolute 3D point position accuracies of approx. 4 cm.

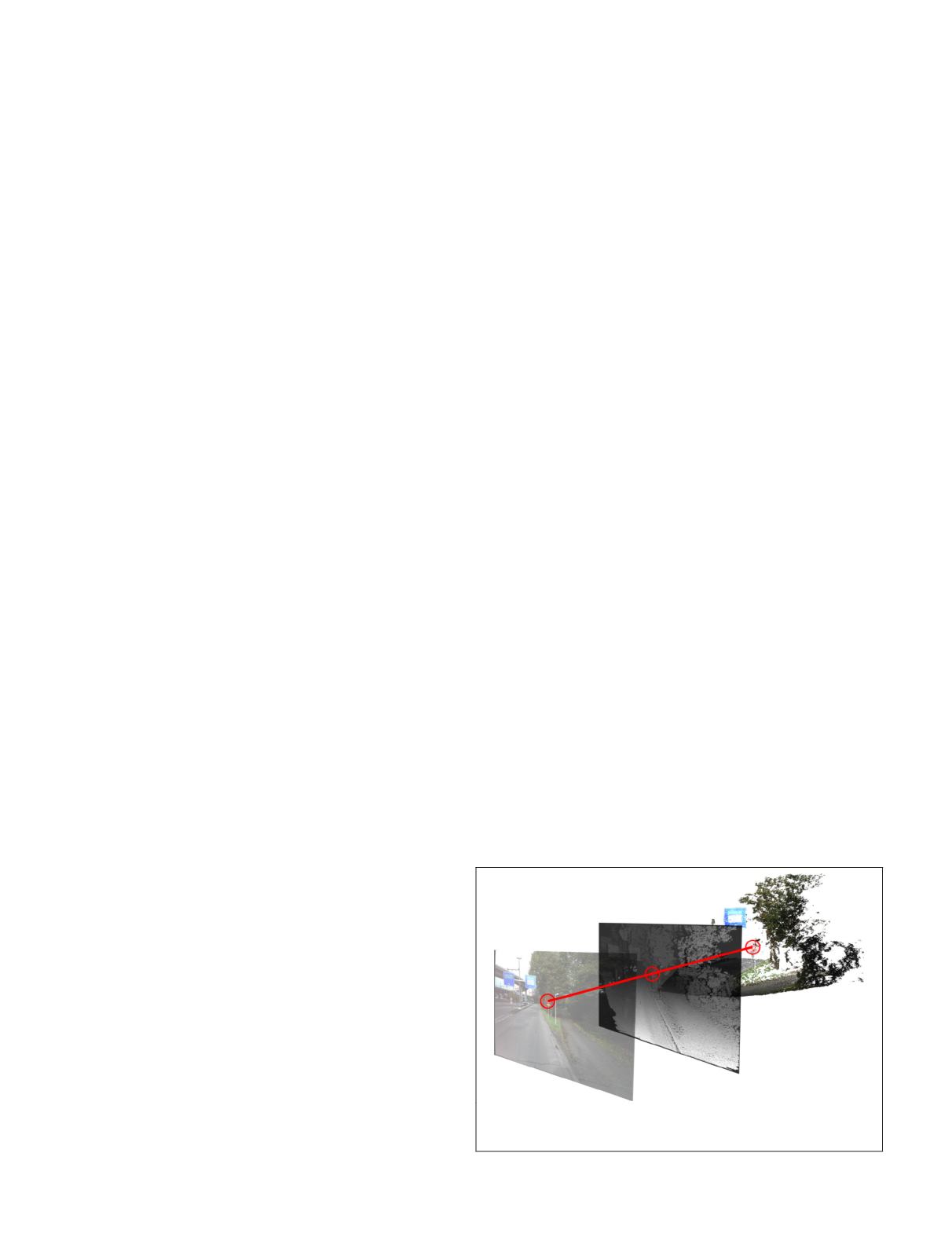

Geospatial 3D Imagery

In our earlier publication (Nebiker

et al

., 2015), we proposed

to treat geospatial 3D imagery or “Geospatial 3D Image Spac-

es” as a new type of a native urban model, combining radio-

metric, depth, and other spectral information. This concept

is technology-independent with regard to depth generation.

In this contribution, we exploited dense stereo image match-

ing for reconstruction of the depth information. Nevertheless,

other technologies (e.g., range imaging or lidar) with their

respective strengths and weaknesses could be used for image

depth generation. We prefer the more general term 3D imagery

to

RGB-D

imagery, since the concept is applicable to any com-

bination of spectral channels, including near infrared (

NIR

) or

thermal infrared (

TIR

) imagery. We furthermore postulated that

such a model should fulfill the following requirements:

• It shall provide a high-fidelity metric photographic rep-

resentation of the urban environment, which is easy to

interpret and which can be augmented with existing or

projected GIS data;

• The RGB and the depth information shall be spatially and

temporally coherent, i.e., the radiometric and the depth

observation should ideally take place at exactly the same

instance (this could also be expressed as WYSIWYG =

what you see is what you get);

• The depth information shall be dense, ideally providing a

depth value for each pixel of the corresponding radiomet-

ric image;

• Image collections are usually ordered, e.g., in the form of

image sequences, for simple navigation and shall effi-

ciently be accessed via spatial data structures;

• The model shall support metric imagery with different

geometries, e.g., with perspective, panoramic or fisheye

projections; The model shall be easy-to-use and shall at

least support simple, robust and accurate image-based 3D

measurements using enhanced 3D monoplotting (see Figure

2); and the model shall provide measures to protect privacy

Figure 2. Principle of a geospatial 3D image with

RGB

image

(left), co-registered dense depth map (middle) and its represent-

ation in object space as a colored 3D point cloud (right).

348

June 2018

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING