The evaluation protocol is defined in great detail by Balntas

et al.

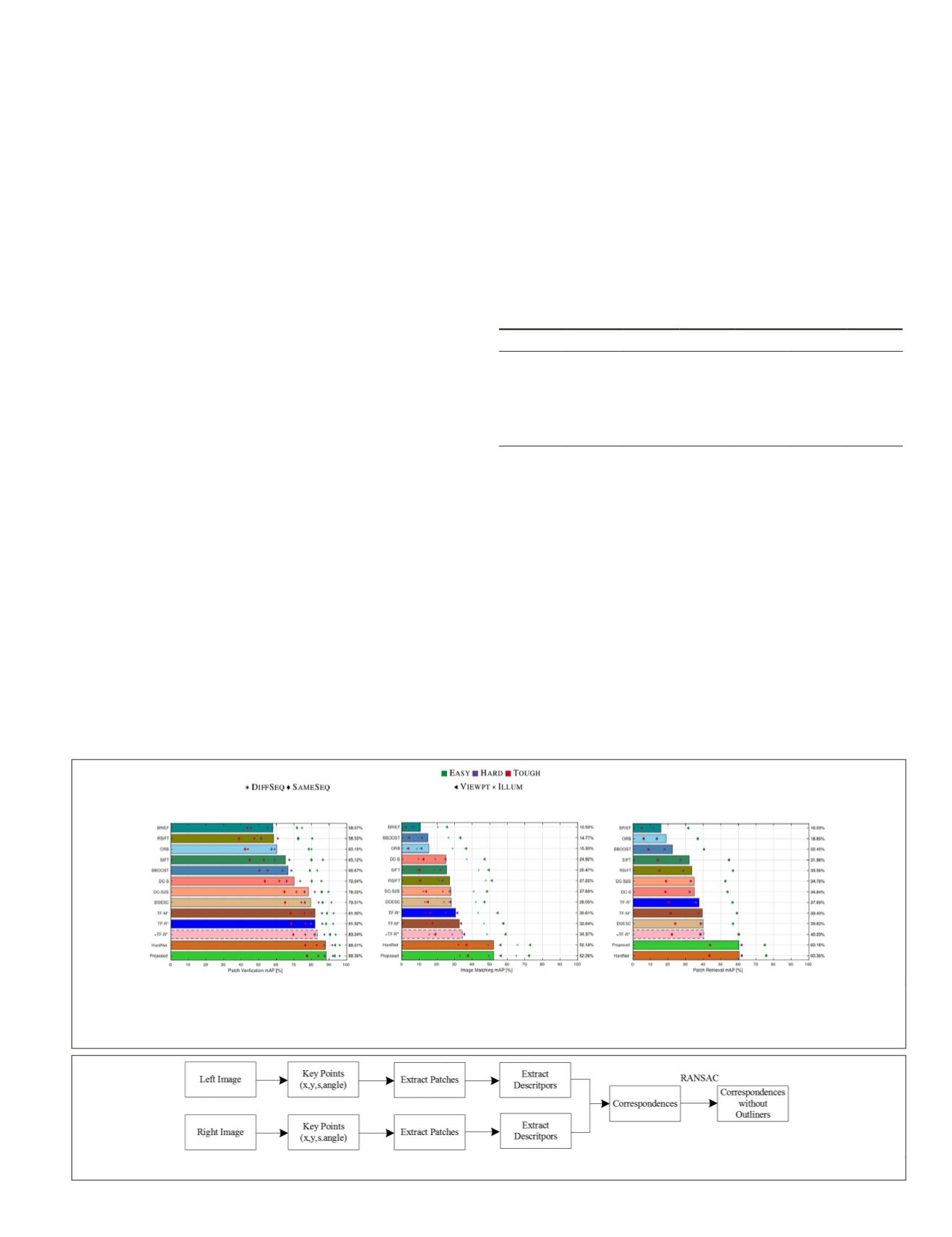

(2017). As shown in Figure 6, the proposed

PPD

descrip-

tor has the top performance among all handcrafted and deep

descriptors in the verification, matching, and retrieval tasks,

except slightly lower than HardNet in the retrieval task.

Except for the HardNet approach, the proposed

PPD

descrip-

tor outperforms other descriptors by a large margin in image

matching and patch retrieval tasks.

Real Data Set

Although the proposed descriptor

PPD

has achieved top

performance on the two benchmarks mentioned above, it

needs to be shown that it can be applied to feature matching

in a real aerial image data set. In this section, we use pairs of

aerial images to test the performance of the learned descrip-

tor. In addition, we also test the effectiveness of the proposed

descriptor in two extreme cases, including illumination and

viewpoint changes.

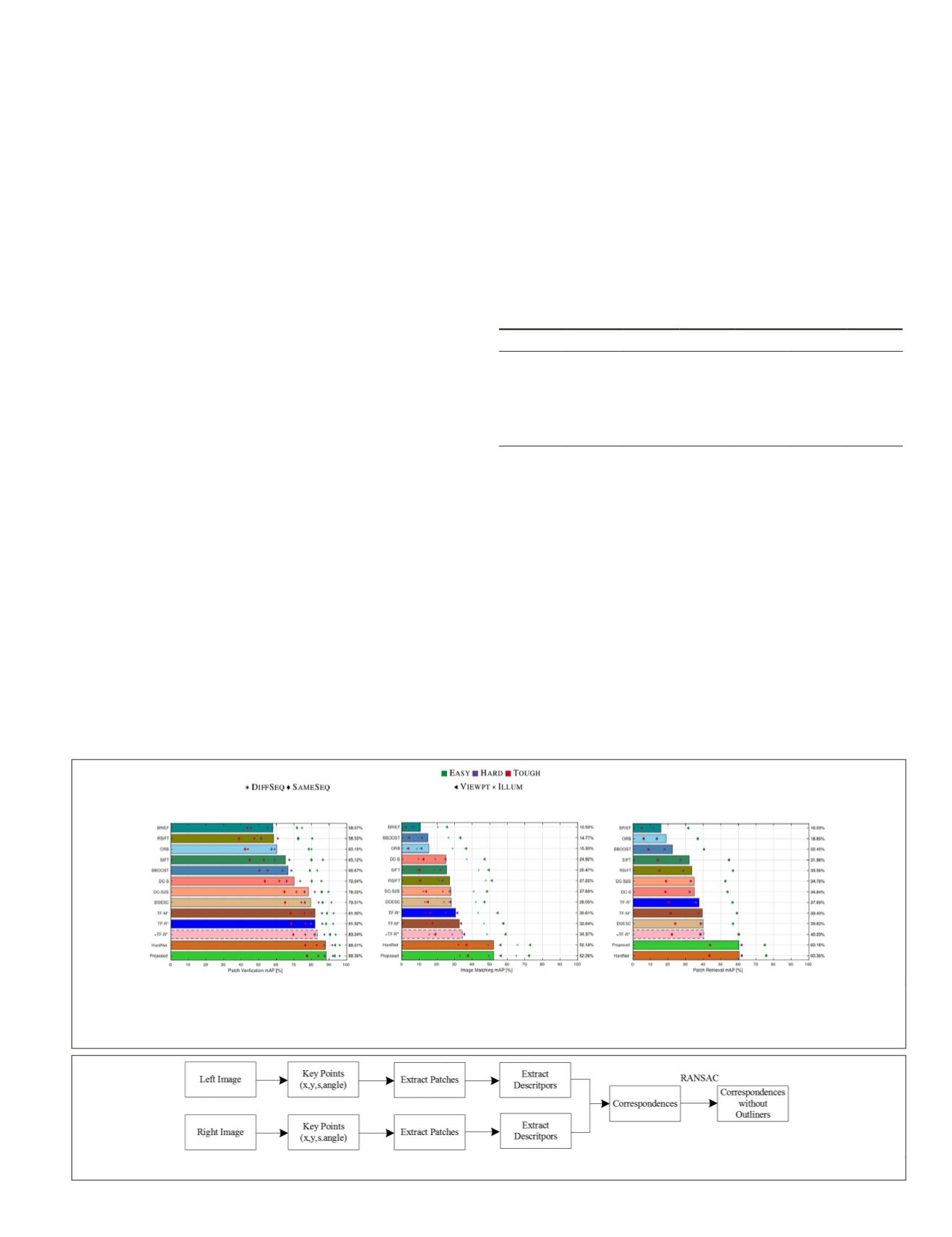

Figure 7 shows the workflow of obtaining correspon-

dences from two images. First, the i

key point location (

x,y

), scale

s

, and

detected using a number of feature

tection algorithm. Then rectified im

from the regions around the interest points according to the

scale

s

. The rectified image patch Patch

′

can be obtained from

the original image patch according to the angle

θ

:

Patch =Patch

cos sin

sin cos

′

⋅

=

⋅

−

Rot Patch

θ

θ

θ

θ

(12)

Following this simple rectification, the descriptors of the

interest points are detected using the proposed

PPD

descriptor.

We find corresponding features by comparing the distances

between the descriptors using either Hamming distance or L2

distance.

By comparing the distances between two descriptors, the

correspondence of a given key point can be found when the

ratio between the shortest distance and the second-shortest

distance is smaller than a given threshold. Since the initial

correspondences usually contain incorrect matches, the

RANSAC algorithm is used to remove the outliers from the

correspondences.

Point Matching for Aerial Images

Three aerial image pairs with a resolution of 2000×1498 pix-

els captured by a Phase One aerial camera in Wuhan, China,

are used to evaluate the performance of the proposed

PPD

descriptor. Those images are ultra-high-resolution aerial im-

ages that cover a different type of objects, including buildings,

vegetation, and roads. Table 4 shows detailed information on

the interest points extracted in the three image pairs by the

BRISK

(Leutenegger

et al.

2011),

SIFT

,

ORB

,

SURF

, and

AKAZE

detecting algorithms.

Table 4. The basic information of the patches (i.e., the number

of key points) in the aerial image data set using different

feature detectors including BRISK, SIFT, ORB, SURF and

AKAZE. −L and −R represent the left and right image in that

image pair, respectively.

Algorithm Pair1-L Pair1-R Pair2-L Pair2-R Pair3-L Pair3-R

BRISK 80 737 80 320 87 846 82 228 76 303 84 040

35 458 29 317 29 828 32 050

80 000 80 000 79 603 80 000

39 089 39 017 35 549 39 082

AKAZE 21 708 19 965 25 024 23 142 17 602 20 571

Figures 8, 9, and 10 show the linked matching points using

different detectors and descriptors in the real aerial image

data set. The number of correspondences is listed in Table 5.

We observed that the number of correspondences computed

using the learned descriptor

PPD

outperforms the learned de-

scriptor TFeat and the traditional handcrafted descriptors, in-

cluding

BRISK

,

SIFT

,

ORB

,

SURF

, and

AKAZE

. The performance of

learned descriptor TFeat is better than that of

BRISK

and

SIFT

,

similar to that of

ORB

and

AKAZE

, but worse than that of

SURF

.

All experiments indicate that the proposed

PPD

is robust and

highly discriminative no matter which detection algorithm

is used to extract the image patches. Especially in Pair1 and

Pair2, the number of correspondences extracted by proposed

descriptor

PPD

is about twice that of

SIFT

and

BRISK

.

Point Matching for Images Under Viewpoint and Illumination Variation

Feature matching is a technique widely used in remote

sensing for image registration and building 3D models of the

Figure 6. Verification, matching, and retrieval results on HPatches benchmark. The color of the marker indicates the level of

geometrical noise in three different data sets:

Easy

,

Hard

, and

Tough

. The type of the marker corresponds to a different setting

of the experiments.

DIFFSEQ

and

SAMESEQ

indicate that negative patch pairs are from different sequence and same sequence,

respectively.

VIEWPT

and

ILLUM

represent the type of sequence.

Figure 7. The workflow of obtaining correspondences from two images.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

September 2019

679