PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

July 2020

409

SECTOR

INSIGHT:

.

com

E

ducation

and

P

rofessional

D

evelopment

in

the

G

eospatial

I

nformation

S

cience

and

T

echnology

C

ommunity

Mozhdeh Shahbazi, PhD, PEng, University of Calgary

How Good is that Gear? Drones versus Surveyors!

The integration of three-dimensional (3D) vision in drones or

unmanned aerial vehicles (UAVs), has contributed a great deal

to improving fine-scale mapping and monitoring applications.

Passive imaging systems have been the most popular technol-

ogies used in this regard. This is mainly due to the availabil-

ity of off-the-shelf, low cost, and light-weight digital cameras.

Advancements in photogrammetry and computational stereo

vision have also fostered this popularity (Abdullah, 2019 ).

As a survey engineer, a photogrammetric engineer, and a com-

puter-vision scientist, I have given and received many debatable

comments about these technologies. A question that it is still be-

ing debated by many stakeholders is this: can drone-photogram-

metry result in survey-grade topographic products? The answer

to this question cannot be summarized in a single word as each

term used in this question is itself interpretable in several ways.

In this column, we take a closer look at this question.

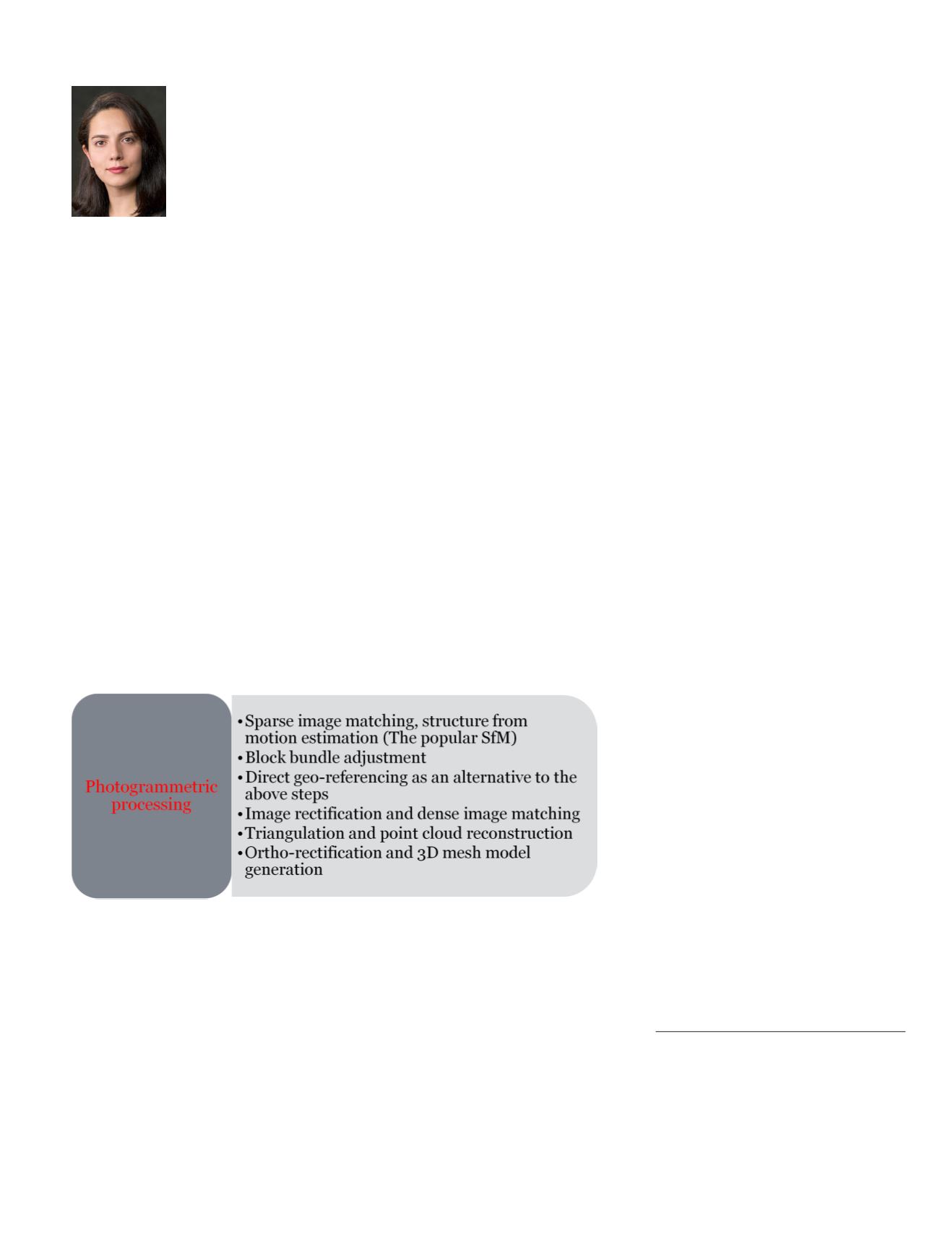

First, we review the main steps involved in the procedure of

turning images into 3D topographic products (Figure 1). This

workflow is more or less the backbone of any black-box com-

mercial software or open-source solution available.

Drone Platforms

A conventional drone system for geospatial applications can

be broken down into three discussable components: the plat-

form, the navigation system, and the imaging sensor. Regard-

ing the platform, the minimum specifications to consider are

the payload capacity, endurance, degree of autonomy, ease

of operation, and, last but not least, compliance with various

regulations.

Navigation Sensors

GNSS-aided inertial navigation sensors are commonly de-

ployed in drone-photogrammetry systems for two purposes: au-

to-piloting the platform and, optionally,

georeferencing

the

images. In most systems, an independent navigation system is

dedicated to the latter. Georeferencing means determining the

external orientation parameters of the images resolved in the

mapping reference coordinate system. It can be performed in

three ways: indirect georeferencing (InDG), direct georeferenc-

ing (DG), and integrated sensor orientation (ISO).

In InDG, georeferencing is performed by adding the obser-

vations of ground control points (GCPs) to the block bundle

adjustment. Essential factors in the success of this method

include the quality of the GCPs, their number, and their

geometric distribution. The accuracy of GCPs dictates the

achievable georeferencing accuracy; the georeferencing ac-

curacy cannot supersede the average GCP accuracy. Geo-

referencing accuracy should not be confused with the recon-

struction accuracy explained below. The only way to measure

the georeferencing accuracy is to establish a fair amount of

well-distributed ground checkpoints. Comparing their abso-

lute measured coordinates with their photo-estimated coor-

dinates yield a measure of georeferencing accuracy. In some

commercial software, e.g. Pix4D Mapper, a

variable is reported after initial processing,

known as GCP error. It is worth mention-

ing that GCP error simply summarizes the

difference between the observed coordinates

and adjusted coordinates of the GCPs. High

GCP errors can indicate either a gross error

or an issue with the block bundle adjust-

ment. Thus, a low GCP error should by no

means be interpreted as high georeferencing

accuracy. This is, unfortunately, a common

mistake made by service providers when dis-

cussing their data quality.

In traditional airborne photogrammetry, the best configuration

for GCPs is to set full control points at the corners and along the

borders of the site, and height control points every 4-6 models

and every 2-4 strips (Figure 2). However, in drone photogram-

Figure 1. Steps in photogrammetric processing.

Photogrammetric Engineering & Remote Sensing

Vol. 86, No. 7, July 2020, pp. 409–410.

0099-1112/20/409–410

© 2020 American Society for Photogrammetry

and Remote Sensing

doi: 10.14358/PERS.86.7.409