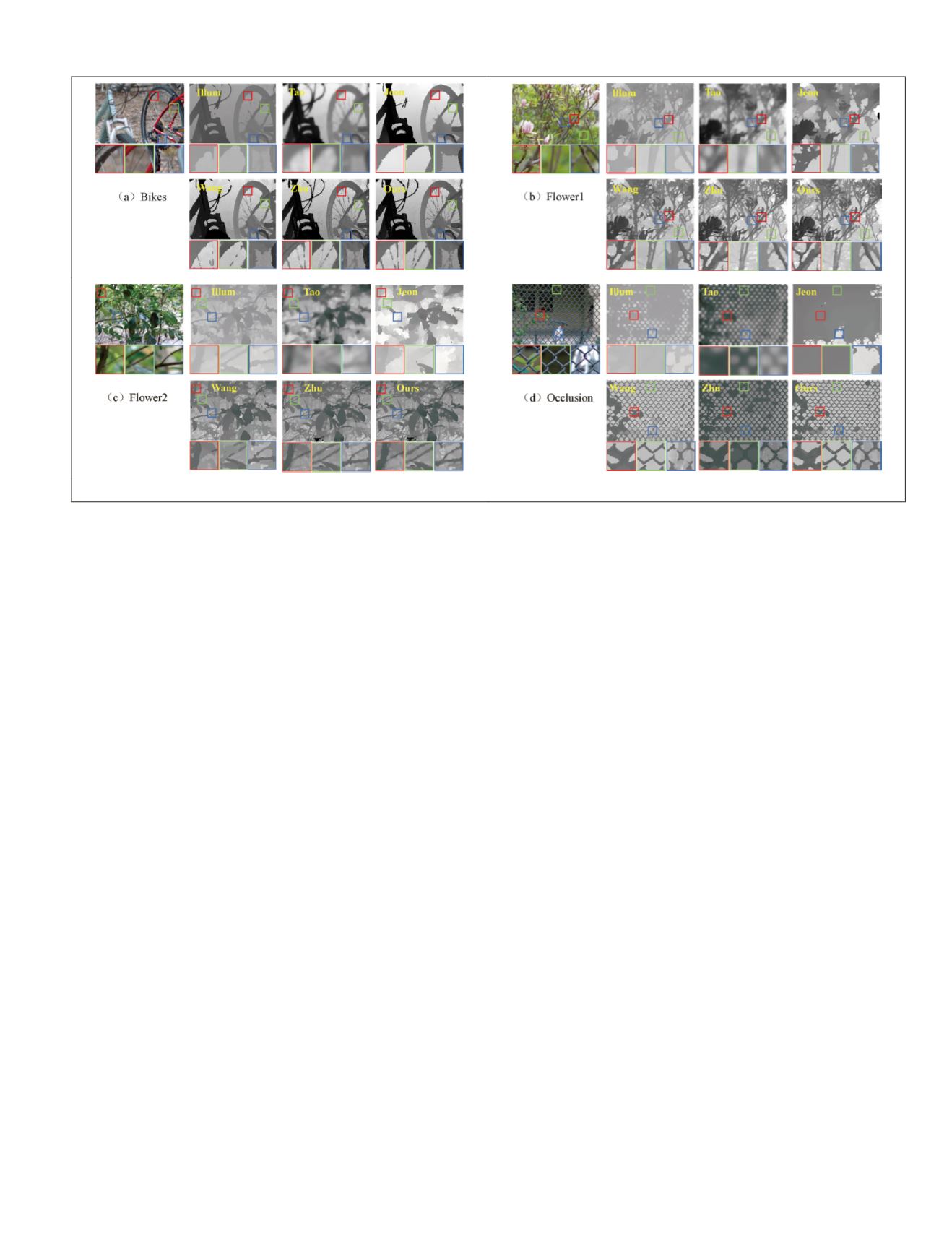

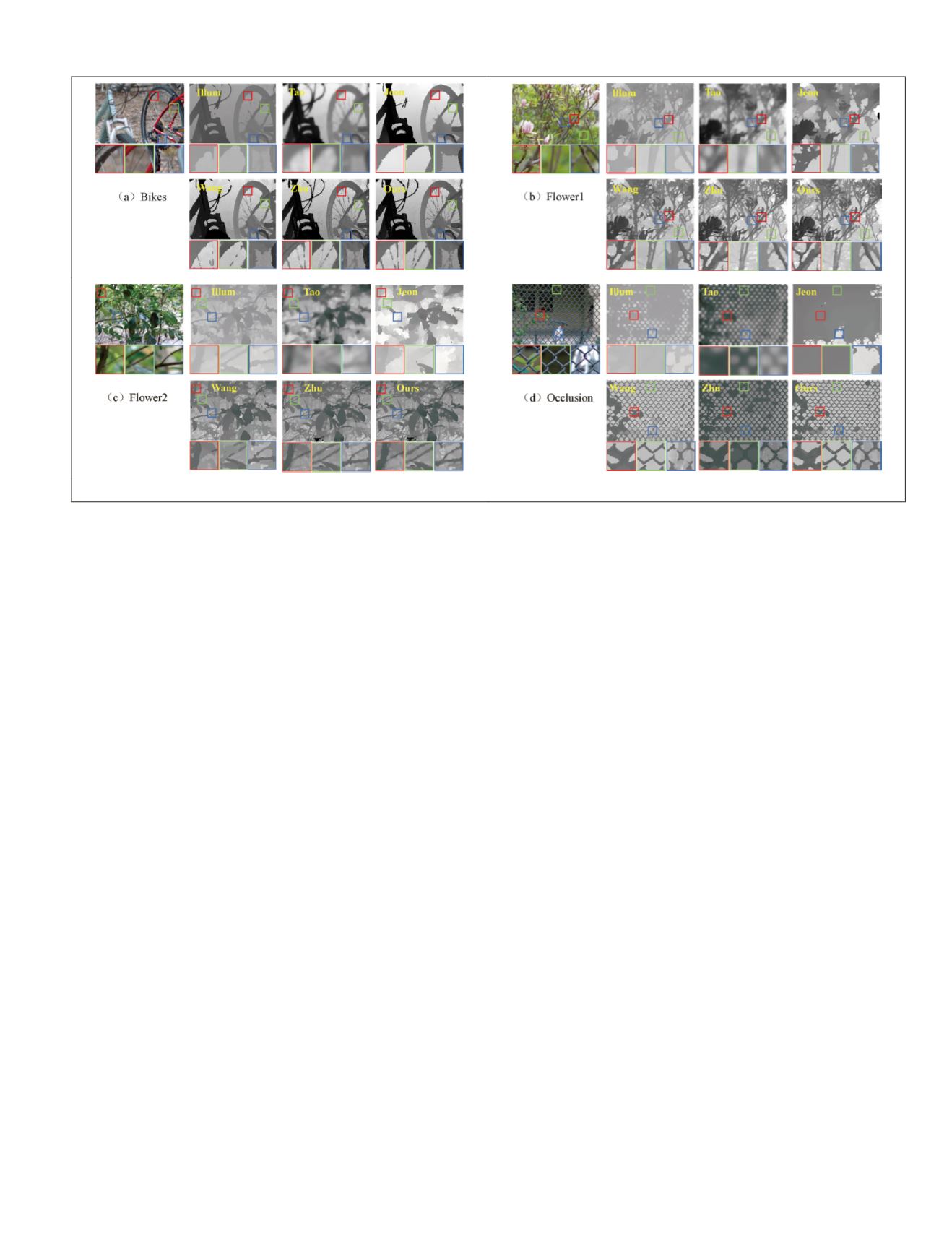

the Lytro Illum camera. The methods of Tao

et al.

and Jeon

et al.

still provide severely oversmooth results and fail to

extract the occlusion boundaries. On the whole, the depth

maps obtained by the Lytro Illum software and the methods of

T.-C. Wang

et al.

and Zhu

et al.

are similar to the depth map

obtained by our method; the depth of some heavy occlusion

areas is also scaled up to illustrate the advantages of our

method. From the enlarged close-up images, it can be seen

that our algorithm can restore subtler boundaries compared

with other methods. For example, the Lytro Illum software

and the method of T.-C. Wang

et al.

reconstruct only a few

spokes of the bicycle (Figure 24a); the method of Zhu

et al.

reconstructs more spokes, but fewer than our method. The Ly-

tro Illum software fails to accurately restore the shape of the

wire mesh (Figure 24d); the methods of T.-C. Wang

et al.

and

Zhu

et al.

can reconstruct the shape of the wire mesh, but the

T.-C. Wang

et al.

method breaks some intersections of wires,

and the Zhu

et al.

method provides oversmooth results, and

some wires cannot be distinguished from the background cor-

rectly. In comparison, our proposed method provides a more

accurate depth map.

Conclusion

In this article, we proposed an algorithm to handle complex

occlusion in depth estimation of a light field. The occluded

pixels are effectively identified using a refocus method. For

each occluded pixel, the unoccluded views are accurately

selected using an adaptive unoccluded-view identification

method. Then the initial depth map is obtained by computing

the cost volumes in the unoccluded views. The final depth

is regularized using an

MRF

with occlusion cues. The advan-

tages of our proposed algorithm are demonstrated on various

synthetic data sets as well as real-scene images compared

with the conventional state-of-the-art algorithms. Compared

with the four algorithms—Tao

et al.

(2013), Jeon

et al.

(2015),

T.-C. Wang

et al.

(2016), and Zhu

et al.

(2017)—the

RMS

error

decreases by about 15% with our proposed method on the

synthetic data sets and by about 9% percent on real-scene

images. Compared with the top-ranked conventional method,

OBER

-cross+

ANP

, our method is a little higher in term of mean

square error. Compared with the top-ranked deep-learning

methods, our method is inferior. However, our method is a

good choice if you do not have a graphics processing unit

to train a network and want to obtain your disparity map as

soon as possible. Because a light-field camera can reconstruct

detailed three-dimensional models, it can be used in the

comprehensive inspection of industrial mechanical parts, life

medicine, face recognition, and the establishment of three-

dimensional models in games and movies. The proposed

method can be valuable in these applications.

Acknowledgments

This research is funded by the National Key Research and De-

velopment Program of China (project 2018YFB1305004) and

the National Natural Science Foundation of China (project

41471388).

References

Anzai, Y. 2012. Pattern Recognition and Machine Learning. Boston:

Elsevier.

Boykov, Y., O. Veksler and R. Zabih. 2001. Fast approximate energy

minimization via graph cuts.

IEEE Transactions on Pattern

Analysis and Machine Intelligence

23 (11):1222–1239.

Chen, C., H. Lin, Z. Yu, S. B. Kang and J. Yu. 2014. Light field stereo

matching using bilateral statistics of surface cameras. Pages

1518–1525 in

2014 IEEE Conference on Computer Vision and

Pattern Recognition

, held in Columbus, Ohio, 23–28 June 2014.

Edited by J. Editor. Los Alamitos, Calif.: IEEE Computer Society.

Dong, F., S.-H. Ieng, X. Savatier, R. Etienne-Cummings and R.

Benosman. 2013. Plenoptic cameras in real-time robotics.

The

International Journal of Robotics Research

32 (2):206–217.

Dudoit, S. and J. Fridlyand. 2002. A prediction-based resampling

method for estimating the number of clusters in a dataset.

Genome Biology

3 (7):research0036.1.

Frey, B. J. and D. Dueck. 2007. Clustering by passing messages

between data points.

Science

315 (5814):972–976.

Figure 24. The depth map for real-scene images.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

July 2020

455