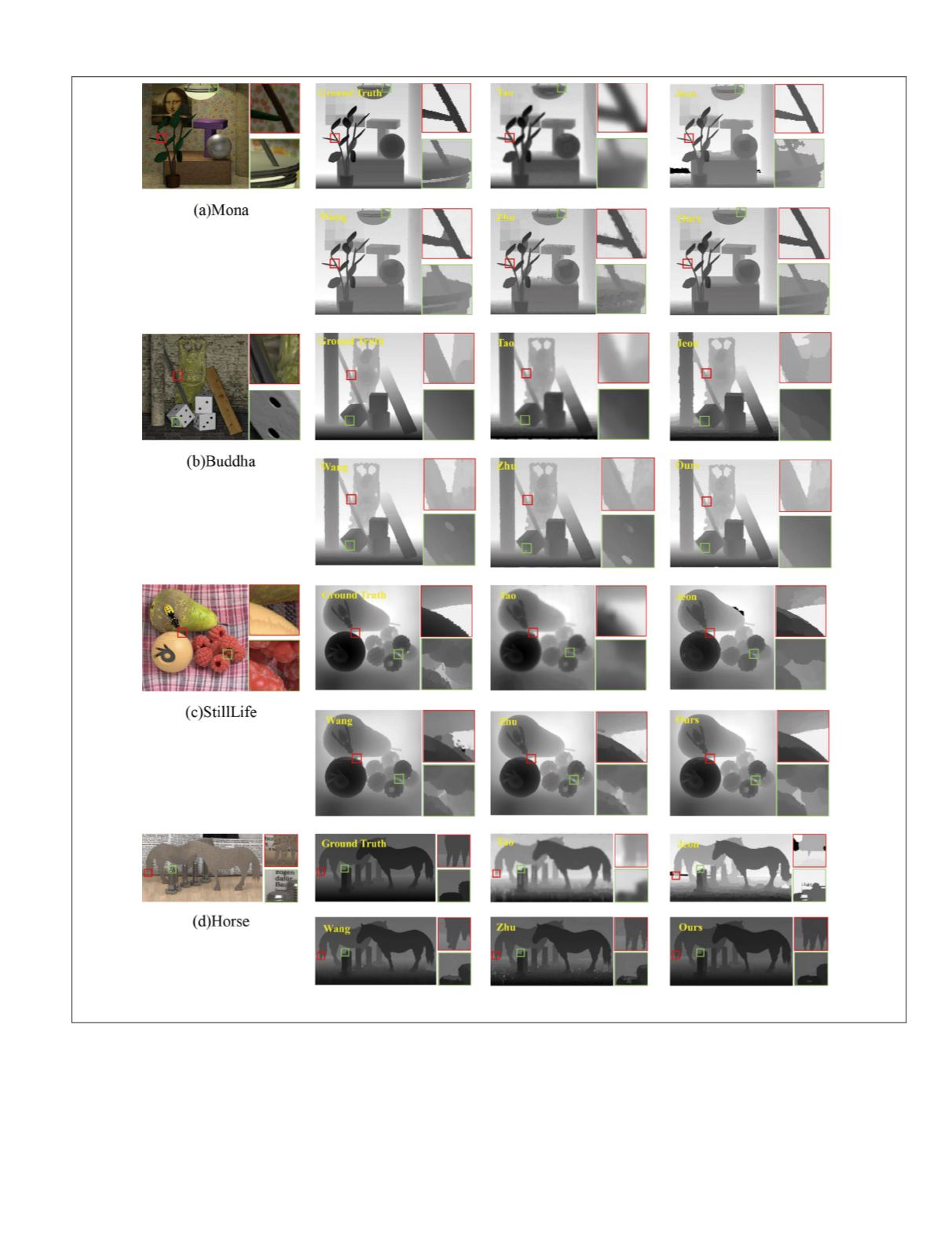

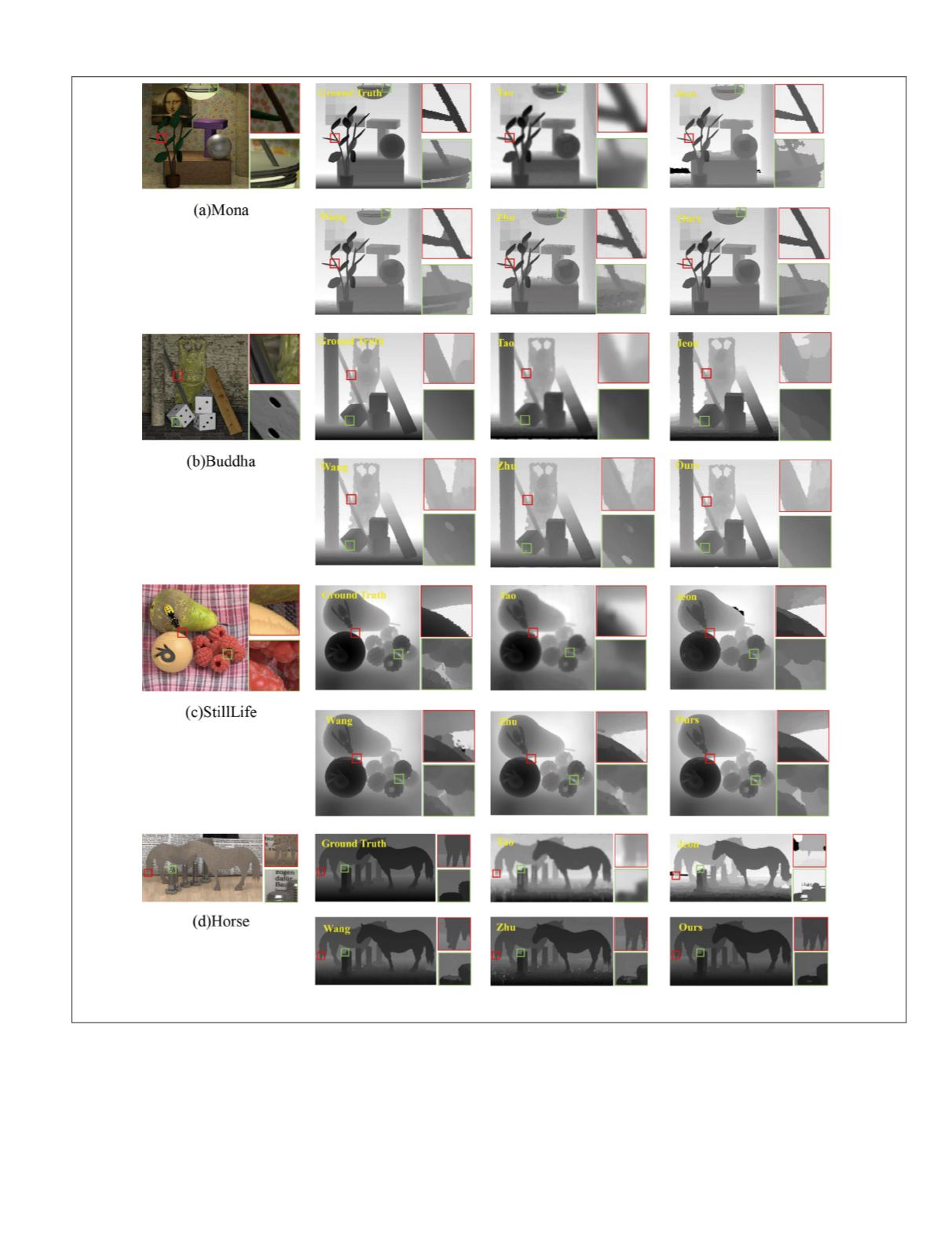

occluded pixels in other algorithms; only the selected oc-

cluded views of the occluded pixels are used to estimate

depth, which leads to a reduction in depth accuracy, whereas

the edge pixels are removed from the occluded pixels in

our method, so all views are used to estimate depth, which

improves the depth accuracy. In addition, our method selects

more accurate unoccluded views and eliminates the aliasing

influence of edge pixels in the angular patch by removing the

views corresponding to the edge pixels in the spatial patch.

Experimental results show that our proposed method can

effectively deal with complex occlusions (the branches in

Figure 18a and the close-up of the red box in Figure 18c).

The quantitative comparisons of the depth map on the

Wanner

et al.

data sets are shown in Figure 19. As we can see

from the line charts, the error distribution concentrates toward

smaller error, except for the method of Tao

et al.

(2013), and

that trend is most obvious using our method. In addition, the

number of large errors in the error line charts obtained by our

Figure 18. The depth maps of synthetic data sets: (a) Mona, (b) Buddha, (c) StillLife, and (d) Horse.

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

July 2020

451