sensor artifact removal, and atmospheric compensation and

reflectance conversion (Kurz

et al

., 2013; Okyay

et al

., 2016).

For this purpose, in-house preprocessing routines were de-

veloped using

MATLAB

2016b (The Mathworks, Inc.). The dark

current signal was removed using an average frame obtained

from the dark image collected during data acquisition. Along

with the manufacturer provided non-uniformity correction

(

NUC

), dark frame subtraction removed most of the systematic

striping in the imagery. Image artifacts associated with the

poor performance of individual elements in the 2D sensor ar-

ray and push-broom scanning were identified on each image

band and replaced by the mean of adjacent lines in the spatial

domain. Brightness gradient caused mainly by the cylindri-

cal geometry of the panoramic images were corrected for each

spectral band as outlined in Okyay

et al

. (2016). For atmo-

spheric compensation and reflectance conversion, Empirical

Line Calibration (

ELC

) (Smith and Milton, 1999) was used

employing two calibration panels with ~3% and ~99% re-

flectance. Some of the image artifacts caused by bad pixels on

the sensor array can only be seen after the reflectance conver-

sion. Thus, following the reflectance conversion, bad pixels

were identified in the spectral domain and corrected using

linear interpolation. Subsequently, prior to spatial image co-

registration the

VNIR

image was down-sampled, i.e., the size of

the

VNIR

image was decreased, to match the dimensions of the

SWIR

image.

Spatial Image Co-registration

The homologous points between the ground-based hyperspec-

tral images needed for spatial co-registration were automati-

cally extracted using a feature-based image matching algo-

rithm: Scale-invariant Feature Transform (

SIFT

) (Lowe, 2004).

The implementation of

SIFT

used in this study (Wu, 2007) is

derived from the original work of Lowe (2004). The

SIFT

al-

gorithm has been widely used in remote sensing applications

due to its robustness to variable geometric and radiometric

conditions (Khan

et al

., 2011; Wessel

et al

., 2007). Although

a brief overview of the

SIFT

algorithm is provided, it is by no

means an exhaustive discussion. Thus, for further details of

the

SIFT

algorithm and parameters the reader is referred to the

original work of Lowe (2004 and 1999) which is considered to

be the best material on

SIFT

.

SIFT

initially identifies potential interest points (keypoints)

on input images separately. This is achieved by searching

local extrema over Difference of Gaussians (

DoG

) obtained

by differencing adjacent images progressively blurred with

Gaussians. However, not all keypoints will be stable such as

those have low contrast or are poorly localized (i.e., along

edges). If the intensity of a keypoint is less than a thresh-

old, called

contrast threshold

, it is considered as potentially

unstable and rejected. Similarly, if the ratio between the

principal curvatures of a keypoint is greater than a threshold,

called

edge threshold

, it is considered poorly localized and

rejected. While these parameters are rather arbitrary, as per

Lowe (2004), a

contrast threshold

of 0.03, an

edge thresh-

old

of 10, and a

nearest neighbor ratio

of 0.8 were used in

this study. Each stable keypoint is then assigned a principal

orientation based on local image gradient directions. Subse-

quently, an invariant keypoint descriptor which is essentially

a spatial histogram based on image gradient magnitude and

orientation and represented as a 128-dimensional feature

vector is created at each keypoint. Following their deriva-

tion, distinctive keypoints in individual images are matched

based on minimum Euclidean distance between the invariant

descriptors (i.e., Nearest Neighbor search). However, not all

keypoints will have correct matches and thus, the keypoints

with incorrect matches need to be discarded. For this, ratio of

distances to closest match and second-closest match, called

nearest neighbor ratio

is calculated. If the ratio is greater than

a threshold, the match is considered incorrect and eliminated.

Lowe (2004) has shown that a

nearest neighbor ratio

thresh-

old at 0.8 eliminates 90% of incorrect matches while elimi-

nating only less than 5% of correct matches. Any outliers, i.e.,

incorrect matches, which may still remain within matching

points identified in

SIFT

was further eliminated using the Ran-

dom Sampling and Consensus (

RANSAC

) algorithm (Fischler

and Bolles, 1981) leaving only the inlier points.

RANSAC

is an

iterative algorithm to estimate a mathematical model from a

data set containing outliers. As

RANSAC

works by identifying

the outliers in a data set, it could also be interpreted as an

outlier detection method (Strutz, 2011).

Although the

SIFT

algorithm is robust to radiometric

changes, it could further benefit from the use of images

acquired at similar wavelengths like any other feature-based

matching algorithm (Schwind

et al

., 2014; Sima and Buck-

ley, 2013). However, the low signal-to-noise ratio of the

VNIR

image bands within the overlapping spectral range (briefly

discussed below) causes speckles in the images, which sub-

sequently affect keypoint identification and image matching.

In attempt to evaluate the effect of input image selection on

image matching, four independent runs of

SIFT

were per-

formed using the same parameters but different input images.

Once the homologous points were extracted, the spatial image

co-registration was performed in ENVI version 5.3 (Harris

Geospatial, Boulder, Colorado) using both affine and first-

order polynomial transformations. The affine transformation

accounts for rotation, scaling, and translation (

RST

) but does

not allow for shearing, whereas the polynomial transforma-

tion accommodates image shearing in addition to rotation,

scaling, and translation (ENVI User Guide, 2017). Thus, in

order to account for potential shearing in the images, first-

order polynomial transformation is used in addition to affine

transformation.

Spectral Concatenation

Proper spatial co-registration of the ground-based hyperspec-

tral images, in theory, should provide continuous

VNIR

+

SWIR

spectra that allow a more complete spectral analysis. Unfor-

tunately, in reality this is an overly optimistic expectancy

due mainly to non-optimal sensor properties. Dissimilar

spectral performance of the hyperspectral cameras hampers

the spectral concatenation of

VNIR

and

SWIR

images. A pre-

liminary analysis of image spectra showed a large increase

in noise towards the longer end of the

VNIR

camera spectrum

caused by decreasing sensitivity of the camera sensor (Figure

2). The noise in wavelengths longer than approximately 920

nm becomes more prominent and potentially preventative

for spectral analysis (

in Figure 2); therefore, image bands

of these wavelengths were excluded from further analysis (

in Figure 2). In addition, due to the relatively poor spectral

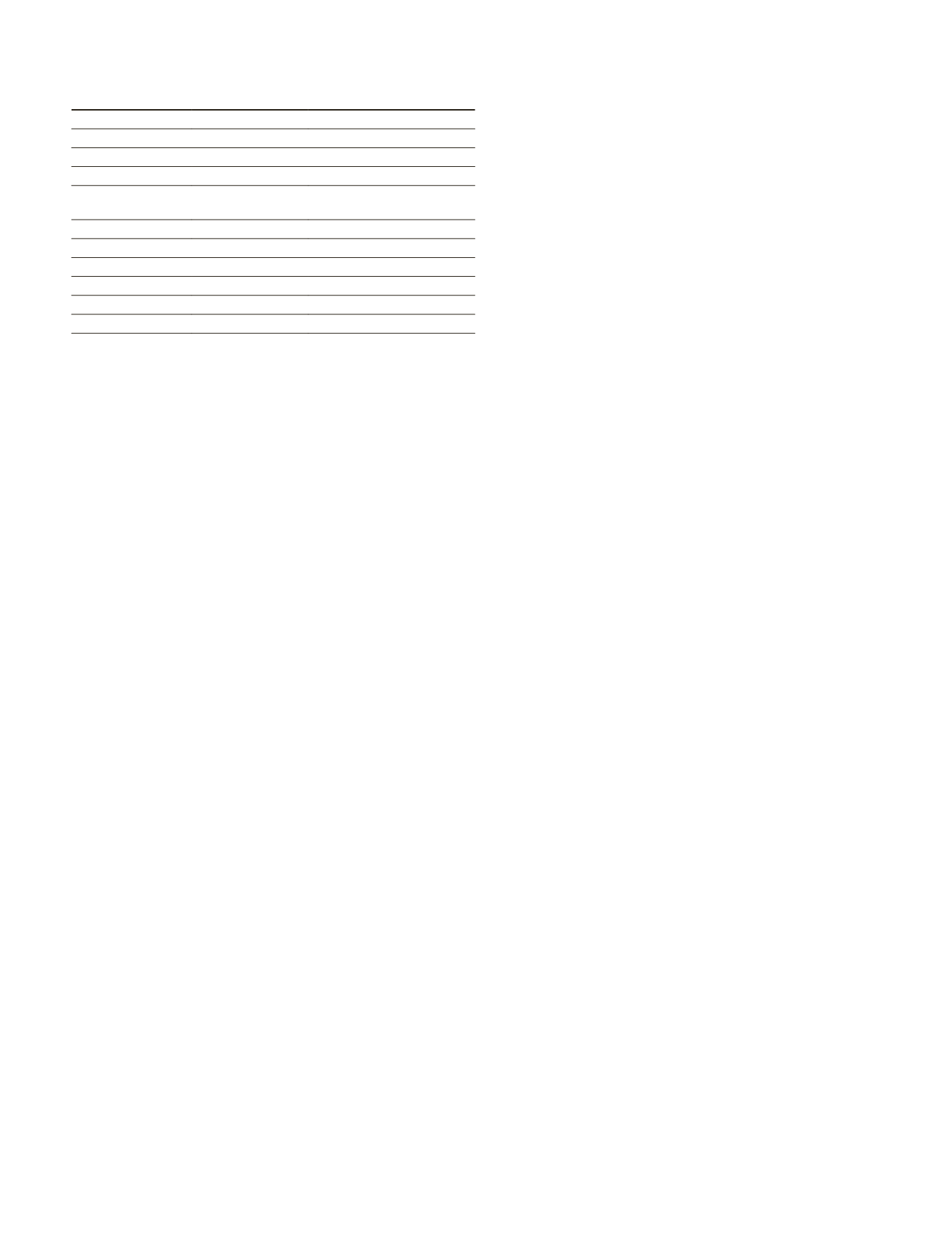

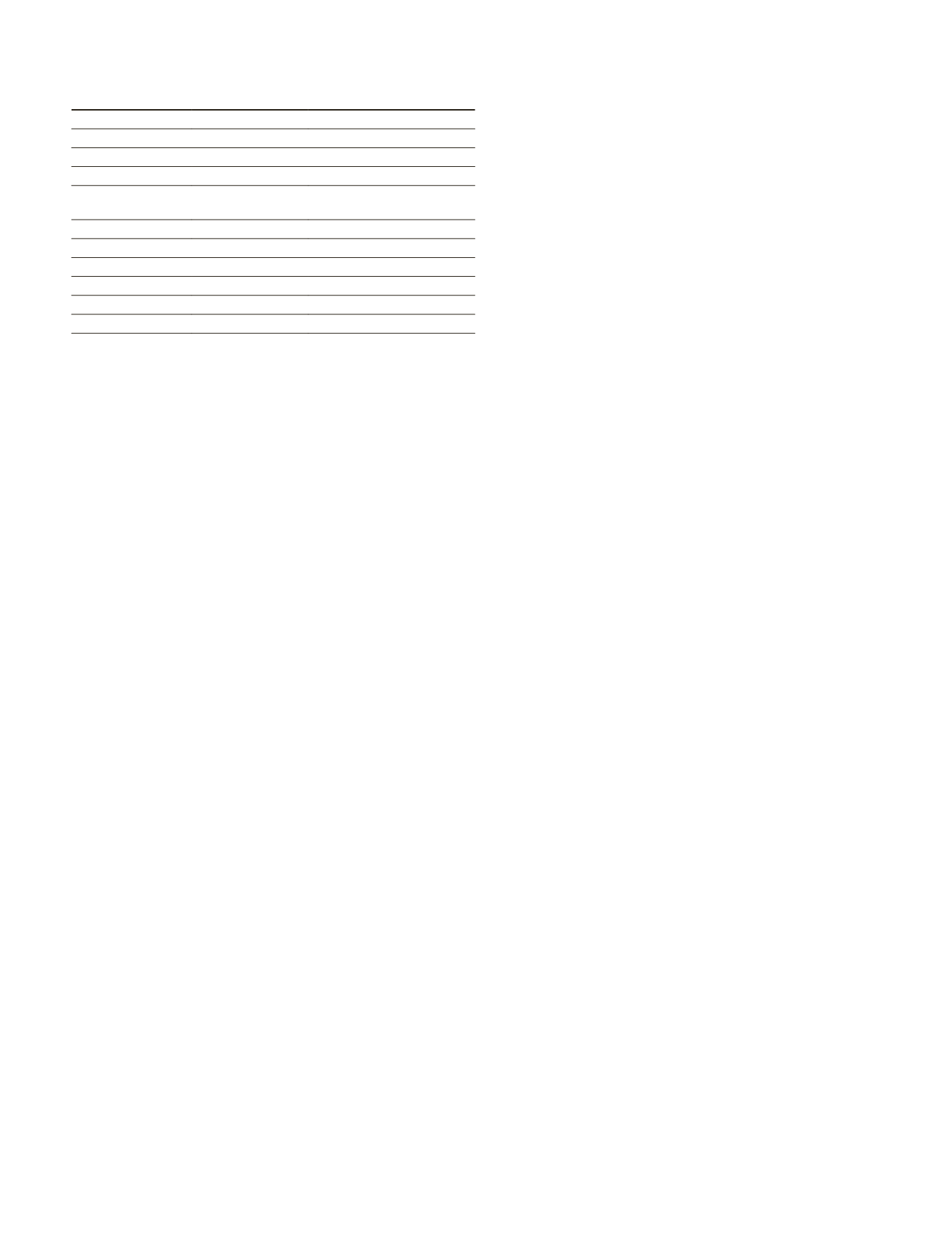

Table 1. Specifications of the

VNIR

and

SWIR

cameras from

SpecIm, Finland.

VNIR Camera

SWIR Camera

Spectrograph ImSpector V10E

ImSpector N25E

Spectral range

400 – 1000 nm

900 – 2500 nm

Spectral Sampling 0.72 – 5.8 nm

1

6.3 nm

Sensor

HgCdTe (MCT)

Charged-couple

device (CCD)

Spatial dimension up to 1600 pixels

2

320 pixels

Spectral dimension up to 840 pixels

1

256 pixels

Pixel pitch

7.4 µm

30 µm

Digitization

12-bit

14-bit

Frame rate

up to 120 fps

up to 100 fps

1

Adjustable by spectral binning;

2

Adjustable by spatial binning

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

December 2018

783