approaches commonly rely on either pose-graph optimiza-

tion (Kümmerle

et al.

2011) or bundle adjustment (Lourakis

and Argyros 2009) to minimize reprojection errors across

frames. Dai

et al.

(2017) proposed a method called BundleFu-

sion, in which a bundle adjustment (

BA

) from local to global

was carried out and a sparse-to-dense alignment strategy was

implemented to generate a dense 3D model from a coarse

one. However, the previously mentioned

SLAM

developments

have been most frequently considered in computer vision

applications, and few studies have applied the

RGB-D

SLAM

to 3D mapping applications, mainly because of their limited

measuring scopes and lower data quality. Steinbrucker

et al.

(2013) proposed a large-scale multiresolution method to gen-

erate indoor mapping reconstruction from

RGB-D

sequences.

Chow

et al.

(2014) introduced a mapping system that inte-

grated a mobile 3D light detection and ranging system with

two Kinect sensors and an inertial measurement unit to map

indoor environments. Tang

et al.

(2016) carried out precise

calibration of the

RGB-D

sensor to ac

ping. However, these improvements

and were not related to measuring s

improve the feasibility and practica

sors, the measuring scope should be

Our work fills this gap.

The

SfM

method is an image-based algorithm used to

estimate the camera poses, scene geometry, and orientation

from

RGB

sequences (Snavely

et al.

2008; Westoby

et al.

2012).

In

SfM

, these estimations are solved simultaneously using

a highly redundant iterative

BA

procedure based on a set of

features that are automatically extracted from a set of mul-

tiple overlapping images (Snavely 2008). Because

SfM

does

not rely on depth information from sensors, the valid range

is typically greater than that of

RGB-D

sensors. A variety of

SfM

strategies have been proposed, including incremental (Frahm

et al.

2010), hierarchical (Gherardi

et al.

2010), and global ap-

proaches (Crandall

et al.

2011). Moreover, many well-known

open-source programs, such as VisualSfM (Wu 2011), Bundle

(Snavely

et al.

2006), and

COLMAP

(Schonberger and Frahm

2016), can be used to implement

SfM

and

MVS

. However, in

addition to requiring the input images to have extreme over-

lapping regions in the stereo pairs,

SfM

must contain enriched

textures in these regions to ensure the quality of the geometry

structures. Moreover, a 3D visual model from

SfM

is scale-free

and cannot be used directly on mapping issues.

Recent advances in deep learning have provided promising

results for resolution of the related issues. Such innovations

include the SE3-Nets (Byravan and Fox 2017), 3D image inter-

preter (Wu

et al.

2016), depth convolutional neural network

(CNN) (Garg

et al.

2016), and

SfM

-Nets (Vijayanarasimhan

et al.

2017). Although the robustness of

SfM

and the quality

of the results have been improved by those state-of-the-art

approaches, a gap remains to obtaining further mapping

properties. To carry out a deep learning method, we require a

large number of samples for training and a highly configured

device (LeCun

et al.

2015). To account for the properties of

offline computing, we introduce additional depth information

from the

SLAM

results into the

BA

to improve

SfM

. We then

carry out a scale-adaptive registration to merge the point

clouds from the depth sensor in short ranges and from the

RGB

image sequences in distant ranges to generate enhanced and

extended 3D models.

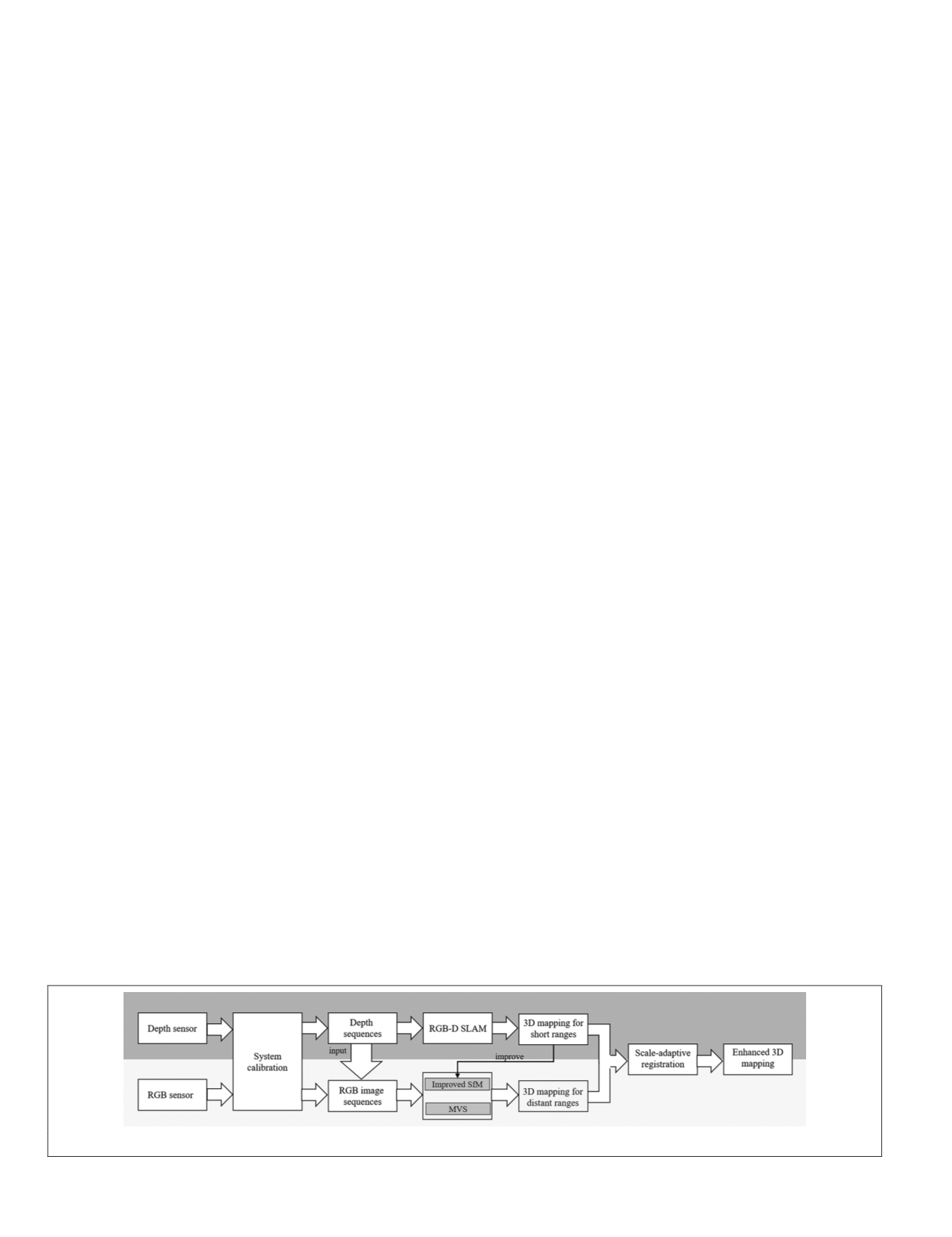

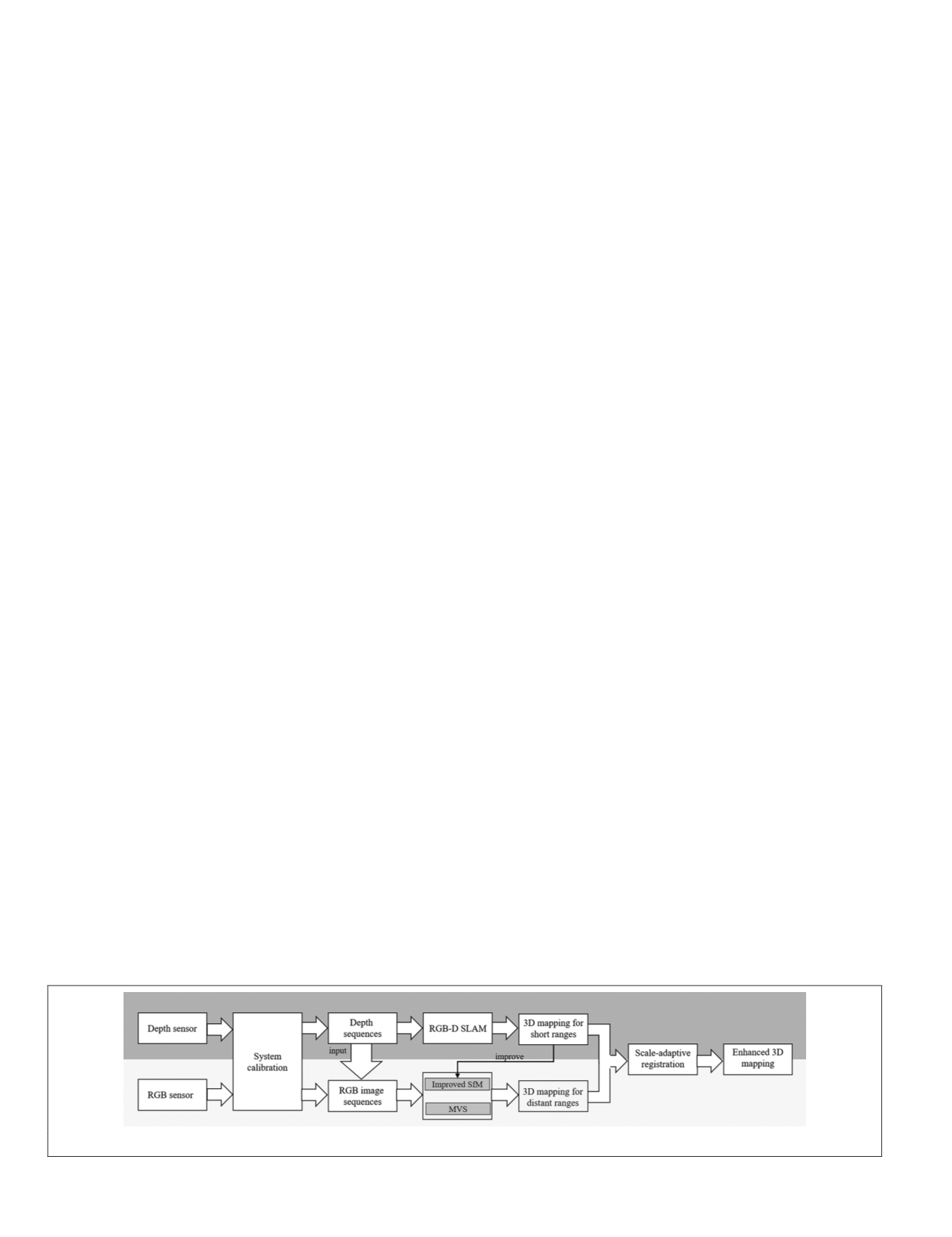

Enhanced 3D Mapping by Integrating Depth Measurements and

Image Sequences

Overview of the Proposed Approach

A calibration process must be carried out on the

RGB-D

sen-

sor before starting the 3D mapping tasks. We implemented a

precise calibration (Tang

et al.

2016) on the

RGB-D

sensor to

determine the precise spatial relationship between the

RGB

and

IR

(depth) cameras. We carried out the state-of-the-art

online

SLAM

method—i.e., BundleFusion (Dai

et al.

2017)—to

generate dense 3D mapping results in short ranges using a

commodity depth sensor. We then introduced the information

, point-to-pixel) into the

SfM

system

r and Frahm 2016) to carry out con-

scale is imported in the improved

epth constraints, distortions may

occur during the

SfM

and

MVS

. Therefore, we further imple-

mented scale-adaptive registration on those two-point clouds

to generate enhanced and extended 3D mapping results. Fig-

ure 1 shows an overview of the proposed approach.

3D Mapping from the RGB-D Sensor in Short Ranges

The BundleFusion (Dai

et al.

2017) was used for

SLAM

and

3D mapping in short ranges in our approach. The main dif-

ference between the BundleFusion and other approaches

is that the former is a fully parallelizable sparse-to-dense

global pose optimization framework. Sparse

RGB

features are

detected by the scale-invariant feature transform (

SIFT

) detec-

tor (Lo and Siebert 2009; Wu

et al.

2012), and then sparse

correspondences are carefully established between the input

frames, and then used to carry out a coarse global alignment.

Mismatches are detected and removed to avoid false loop

closures between all input frames. That is, detected keypoints

are matched against all previous frames and carefully filtered

to remove mismatches. After that, the coarse alignment is

refined by optimizing for dense photometric and geometric

consistency. We implement a hierarchical local-to-global

strategy to optimize the camera tracking and orientations. On

the first hierarchy level,

n

continuous frames (e.g.,

n

= 10)

compose a chunk, and in each chunk a keyframe is defined

and then other frames in the chunk are matched to the key-

frame. Based on this, the program executes a local

BA

. On the

second hierarchy level, all of the chunks are collected and

the algorithm implements a global

BA

. The coarse global pose

optimization ensures that the subsequent fine alignments can

converge to a promising solution. In the fine global optimiza-

tion step, the program also implements the same hierarchical

strategy as that used in the coarse step. After executing the

SLAM

program, a dense 3D mapping can be obtained.

Figure 1. Workflow of the proposed approach.

634

September 2019

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING