method is not suitable for the nos. 24–31 panoramic image

registration because the skyline pixels and points mismatch.

Weakness and Future Work

We use the feature points of road lamp and lane for panoram-

ic image registration. Road lamp feature points are evenly

distributed in the middle/upper part of the panoramic image,

and road lane feature points are evenly distributed in the

middle/lower part of the panoramic image. These distribu-

tions are an advantage of the registration accuracy. However,

because the feature points are close to the road, the registra-

tion accuracy will decrease with an increase in distance (e.g.,

our registration accuracy was 5.88 and 10.45 pixels using

different feature points to evaluate). Therefore, adding other

feature points to improve the robustness and accuracy of reg-

istration is important. Furthermore, our registration method

is limited by road scenes, such as small inner streets without

road lanes. In such a case, the following methods can be used:

(1) Take the intersection of road lamp (poles) and road surface

as feature points, which can replace the road lane feature

points. We use only the vertex of road lamp in this article, but

the bottom of the road lamp can be used when there is a lack

of road lanes. (2) Take advantage of the road curb, which is a

linear feature. Unlike a perspective image, the road curb in a

panoramic image is a curve similar to a parabola; considering

the stitching error between the adjacent horizontal lenses, it

is difficult to express this curve with a strict mathematical

model, and how to make better use of ro

research. (3) The feature points should h

bility. Road lamp and lane are the comm

roads, but it is still limited by road scen

to extract different feature points according to road scenes,

such as building corners, trees feature points, and so on. The

feature points need to be extracted from the panoramic image

sequence and

LiDAR

points simultaneously; this is the focus of

our future research. Finally, the panoramic view with well-

stitched images can improve the registration accuracy, which

needs to eliminate the stitching errors as much as possible.

We cannot ensure that the stitching effect by itself is better

than that of camera software, but we have researched the pan-

oramic stitching and will continue to do so.

Conclusions

In this article, we selected the feature points of road lamp

and lane for registration. One reason for this selection is that

road lamp and lane are the common appendages of roads;

these spatial distributions are regular, making our registration

method widely applicable and highly precise. Another reason

is that, compared with other road objects, the feature points of

road lamp and lane are easy to extract from

LiDAR

points and

panoramic images. They can be extracted by a simple method,

such as height constraints. Therefore, we first proposed using

the feature points of road lamp and lane for registration of

LiDAR

points and panoramic image sequence in

MMS

, includ-

ing the extraction and matching of road lamp and lane feature

points. In the experiments, 31 panoramic images (4000 × 8000

pixels) were used to verify the applicability and accuracy of

our registration method. The original registration method and

skyline-based method were used to compare the registration

accuracy, and the results showed that our registration method

is effective and that the registration accuracy is less than 10

pixels. Moreover, the average of our registration accuracy is

5.84 pixels, which is much less than the 56.24 pixels obtained

by original registration method.

Acknowledgments

The authors would to thank Prof. S.J. from Wuhan University,

Wuhan, China, for help with providing the data set. We thank

the reviewers for their comments.

References

Abayowa, B. O., A. Yilmaz, and R. C. and Hardie. 2015. Automatic

registration of optical aerial imagery to a LiDAR point cloud for

generation of city models.

ISPRS Journal of Photogrammetry

and Remote Sensing

106:68–81.

.

isprsjprs.2015.05.006

Barnea, S. and S. Filin. 2013. Segmentation of terrestrial laser

scanning data using geometry and image information.

ISPRS

Journal of Photogrammetry and Remote Sensing

76: 33–48.

10.1016/j.isprsjprs.2012.05.001

Brown, M., D. Windridge, and J. Y. Guillemaut. 2015. Globally

optimal 2D-3D registration from points or lines without

correspondences. Pages 2111–2119 in

Proceedings of the IEEE

International Conference on Computer Vision

.

org/10.1109/ICCV.2015.244

Cabo, C., A. Kukko, S. García-Cortés, H. Kaartinen, J. Hyyppä, and

C. Ordoñez. 2016. An algorithm for automatic road asphalt

edge delineation from mobile laser scanner data using the line

clouds concept.

Remote Sensing

:8:740.

rs8090740

Canny, J. 1986. A computational approach to edge detection.

IEEE

Transactions on Pattern Analysis and Machine Intelligence

8:679–698.

Che, E. and M. Olsen. 2019. An efficient framework for mobile lidar

ction and Mo-norvana segmentation.

Remote

e, F. Ganovelli, R. Gherardi, A. Fusiello, and

3. Fully automatic registration of image sets

on approximate geometry.

International Journal of Computer

Vision

102:91–111.

Cui, T., S. Ji, J. Shan, J. Gong and K. Liu. 2017. Line-based

registration of panoramic images and LiDAR point clouds for

mobile mapping.

Sensors (Switzerland)

17:–E70X.

.

org/10.3390/s17010070

Fangi, G. and C. Nardinocchi. 2013. Photogrammetric processing

of spherical panoramas.

Photogrammetric Record

28:293–311.

Gong, M., S. Zhao, L. Jiao, D. Tian and S. Wang. 2014. A novel

coarse-to-fine scheme for automatic image registration based on

SIFT and mutual information.

IEEE Transactions on Geoscience

and Remote Sensing

52:4328–4338.

TGRS.2013.2281391

Kaminsky, R. S., N. Snavely, S. M. Seitz and R. Szeliski. 2009.

Alignment of 3d point clouds to overhead images. Pages 63–70

in 2009

IEEE Conference on Computer Vision and Pattern

Recognition

, CVPR.

Kumar, P., P. Lewis and T. McCarthy. 2017. The potential of active

contour models in extracting road edges from mobile laser

scanning data.

Infrastructures

2:X–X16.

/

infrastructures2030009

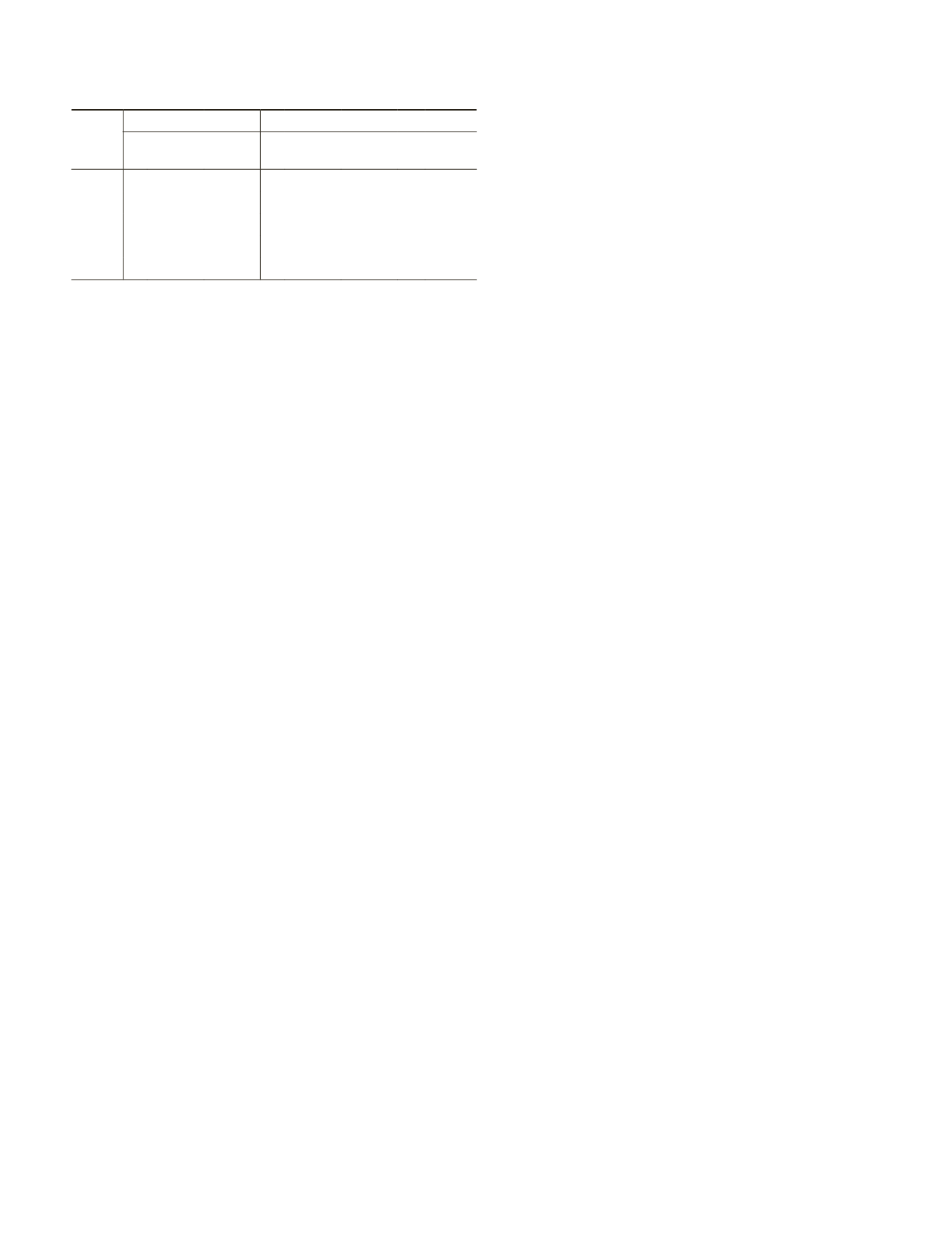

Table 4. Registration accuracy analysis compared with Zhu

et

al.

(2018) (pixels).

No.

Zhu

et al.

(2018)

Our Paper

n

Method

I

Skyline

Method

n

Method

I

Method

II

n

Method

II

4/

N

− 2 38 19.41 9.31 12 35.64 6.24 38 11.69

5/

N

− 1 38 23.52 9.20 13 44.16 6.07 38 10.80

6/

N

38 29.04 8.70 11 40.62 6.40 38 15.10

7/

N

+ 1 38 37.30 11.77 10 51.24 4.40 38 7.17

8/

N

+ 2 38 46.54 16.44 11 54.00 6.30 38 7.48

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

November 2019

839