The experimental results are as follows:

In Table 14, the research reaches a similar conclusion that

the mean localization accuracy by

WTLS

is equal to that of par-

allaxBA or

TLS

and higher than that of

BA±LS

and

BA±LM

. From

the fourth column, the mean relative localization accuracies

based on

WTLS

,

TLS

,

BA±LS

,

BA±LM

, and parallaxBA are 1.86%,

1.88%, 1.92%, 1.90%, and 1.86%, respectively. Meanwhile,

the localization accuracy is clearly higher than that in the

previous Section, which indicates that the effect of the errors

in the coordinate system transformation framework degrades

the positioning accuracy.

Conclusions

In this paper, a new localization algorithm for a planetary

rover is proposed. This algorithm is based on the weighted

total least squares adjustment, which considers error in both

the coefficient matrix and observation vector instead of

OLS

.

It includes two parts: (1) estimation of the epipolar geometry

of binocular cameras, and (2) planetary rover localization.

Rigorous analysis has been presented regarding why the new

planetary rover’s localization algorithm is superior to the

existing

OLS

and

BA

methods.

Various experimental results from the dataset of China’s

first lunar rover demonstrate the following: (1) the proposed

relative orientation algorithm based on

WTLS

has higher preci-

sion than

OLS

does in the epipolar geometry estimation of the

binocular cameras; (2) the weight matrix of the 3D coordi-

nate observations is more effective than the equal-precision

observations for the rover’s visual localization; and (3) the

proposed algorithm has equal accuracy and better efficiency

and convergence property than

BA

+

LS

, parallaxBA and

BA

+

LM

.

The main reason is that the aforementioned defects merely

caused by errors in the observation vector and dependency

on the initial parameters in existing

OLS

and

BA

algorithms

can be avoided in the proposed algorithm, resulting in more

theoretical rationality and direct convergence. In the actual

task, the rover’s localization system on the earth control

center read real-time data exactly and ran steadily using both

our method and

BA

algorithms. These methods strengthen the

effectiveness and credibility of the conclusions of the pose

estimation analysis by mutual authentication.

However, the proposed algorithm cannot guarantee con-

vergence to the true minimum under all conditions. Further

investigation is needed for the robustness of

WTLS

for the

planetary rover’s visual localization with respect to outliers.

Furthermore, the adaptive image matching and target tracking

algorithms in varying stations with the “front-back” overlap-

ping

FOV

are also very interesting themes for further research

of the planetary rover’s visual

SLAM

and automatic navigation.

Acknowledgments

Ma YouQing, Liu ShaoChuang and Peng Song are supported

by the National Natural Science Foundation of China (No.

41601494). Thanks to the reviewers and the associate editor

for providing the comments and hard work on our manu-

script.

References

Achtelik, M., M. Achtelik, S. Weiss, and R. Siegwart, 2011. Onboard

IMU and monocular vision based control for MAVs in unknown

in-and outdoor environments,

Proceedings of the 2011 IEEE

International Conference on Robotics and Automation (ICRA),

pp. 3056-3063.

Alexander, D.A., R,G. Deen, P.M. Andres, P. Zamani, H.B. Mortensen,

A.C. Chen, and C.L. Stanley, 2006. Processing of Mars

Exploration Rover imagery for science and operations planning,

Journal of Geophysical Research: Planets

, 111(E2).

Alismail, H., B. Browning, and S, Lucey, 2016. Photometric bundle

adjustment for vision-based SLAM,

Proceedings of the Asian

Conference on Computer Vision

, pp.324-341.

Bay, H., T. Tuytelaars, and L. Van Gool, 2006. Surf: Speeded up robust

features,

Computer Vision–ECCV 2006

, pp. 404-417.

Blanco, J.L., J. González-Jiménez, and J.A. Fernández-Madrigal,

2013. Sparser relative bundle adjustment (srba): Constant-time

maintenance and local optimization of arbitrarily large maps,

IEEE International Conference on Robotics and Automation

(ICRA),

(pp. 70-77).

Bouguet, J.Y., 2000. Matlab camera calibration toolbox,

CalTech

Technical Report

.

Davison, A.J., I.D. Reid, N.D. Molton, and O. Stasse, 2007.

MonoSLAM: Real-time single camera SLAM,

IEEE Transactions

on Pattern Analysis and Machine Intelligence

, 29(6).

Di, K., F. Xu, J. Wang, S. Agarwal, E. Brodyagina, R. Li, and L.

Matthies, 2008. Photogrammetric processing of rover imagery

of the 2003 Mars Exploration Rover mission,

ISPRS Journal of

Photogrammetry and Remote Sensing

, 63(2):181-201.

Engel, J., T. Schöps, and D. Cremers, 2014. LSD-SLAM: Large-

scale direct monocular SLAM,

Proceedings of the European

Conference on Computer Vision

, Springer, pp. 834-849.

Engel, J., V. Koltun, and D. Cremers, 2016. Direct sparse odometry,

arXiv preprint arXiv

:1607.02565.

Engels, C., H. Stewénius, and D. Nistér, 2006. Bundle adjustment

rules,

Photogrammetric Computer Vision

,

2

:124-131.

Fang, X, 2013. Weighted total least squares: necessary and sufficient

conditions, fixed and random parameters,

Journal of Geodesy

,

87(8), pp. 733-749.

Fraser, C.S, 1997. Digital camera self-calibration,

ISPRS Journal of

Photogrammetry and Remote Sensing

, 52(4):149-159.

Fusiello, A., and F. Crosilla, 2015. Solving bundle block adjustment

by generalized anisotropic Procrustes analysis,

ISPRS Journal of

Photogrammetry and Remote Sensing

, 102 :209-221.

Igual, L., X. Perez-Sala, S, Escalera, C. Angulo, and F. De la Torre,

2014. Continuous generalized procrustes analysis,

Pattern

Recognition

,

47

(2):659-671.

Indelman, V., A. Melim, and F. Dellaert, 2013. Incremental light

bundle adjustment for robotics navigation,

2013 IEEE/RSJ

International Conference on Intelligent Robots and Systems

(IROS),

pp. 1952-1959).

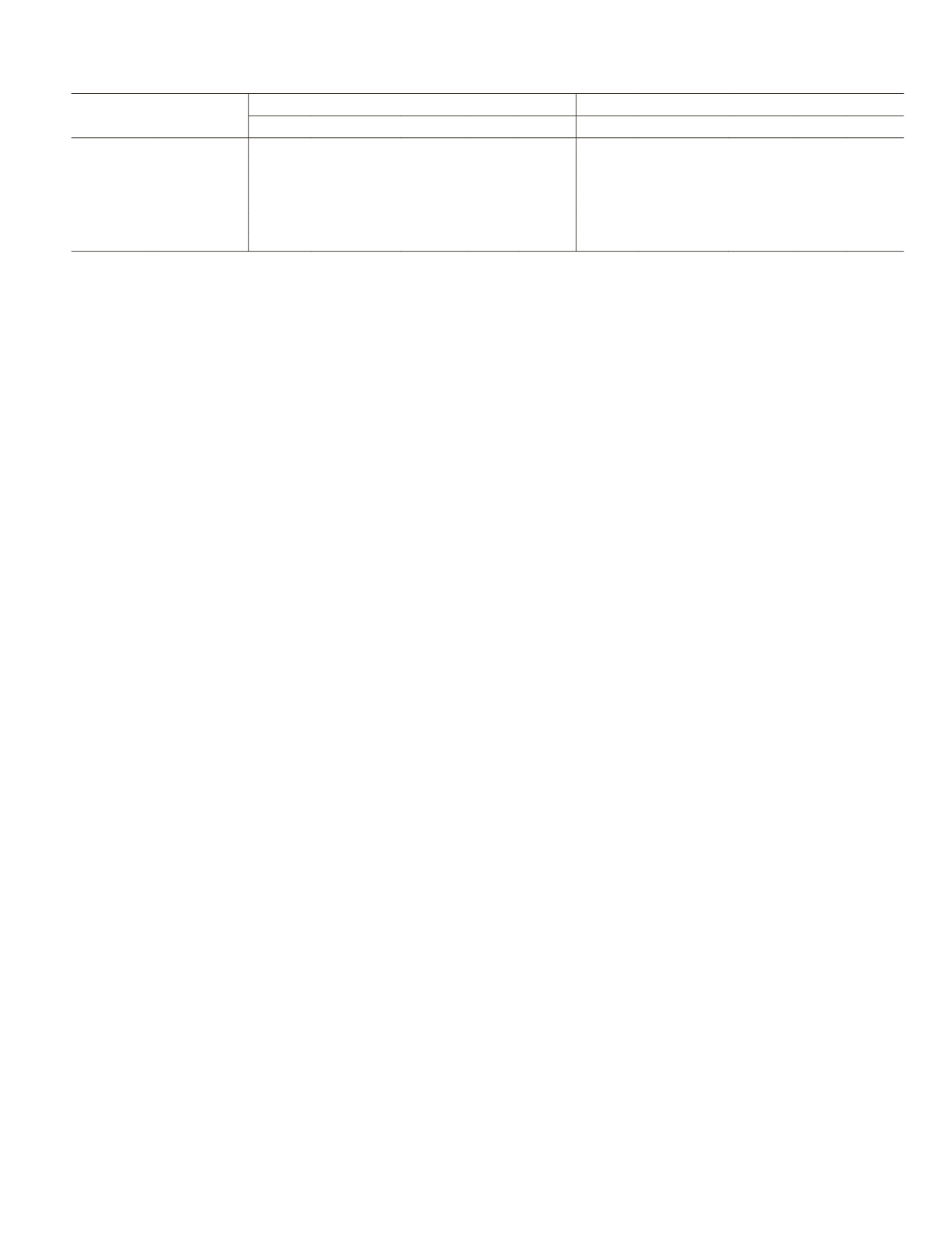

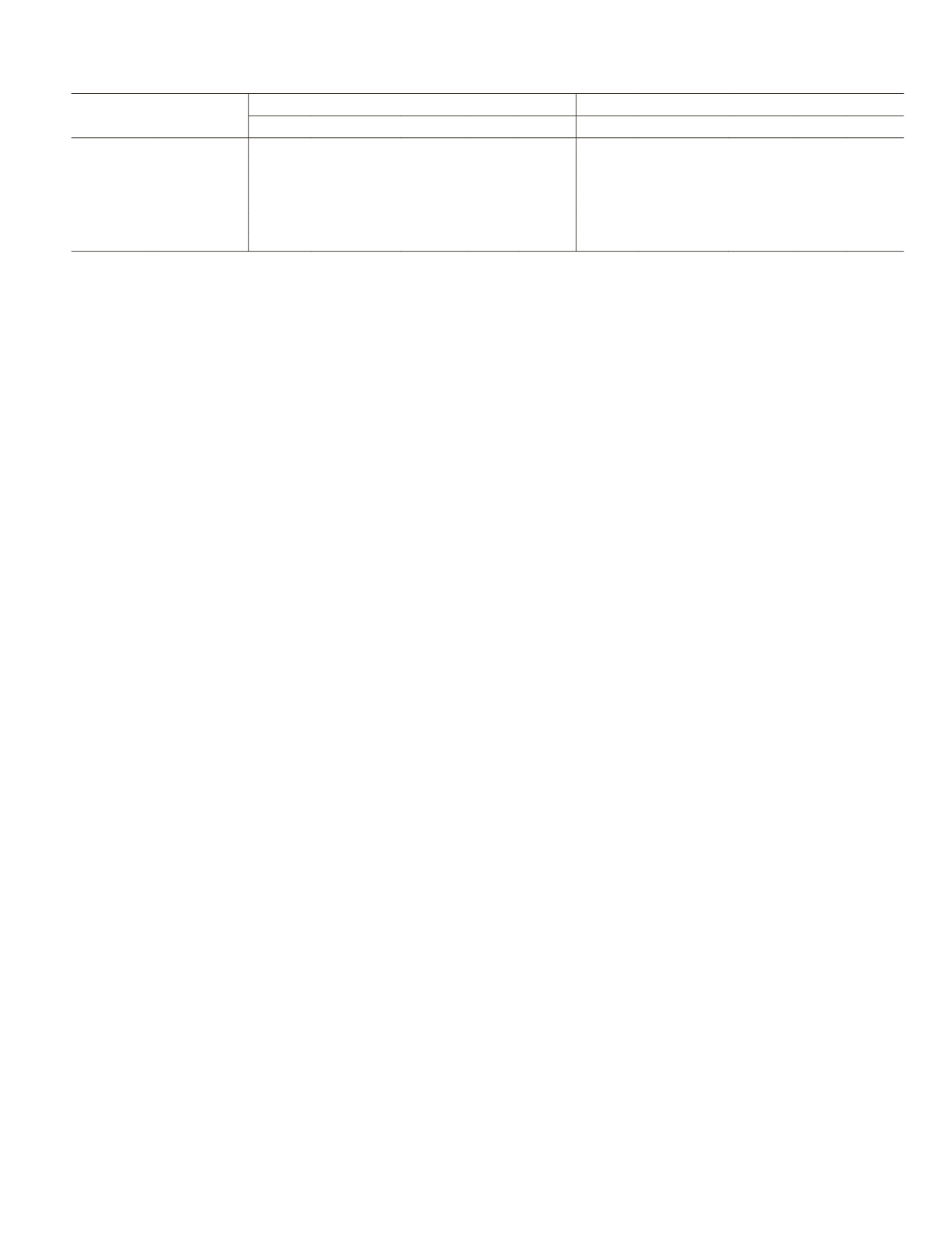

Table 14. Summary localization results of the simulated stereo camera system in the test field.

Number

Relative

distance (m)

Absolute localization accuracy (mm)

Relative localization accuracy (%)

BA+LS parallaxBA BA+LM TLS WTLS BA+LS parallaxBA BA+LM TLS WTLS

S1-S2

25.1

743.0

730.4

743.0 735.5 732.9 2.96

2.91

2.96 2.93 2.92

S2-S3

25.1

351.7

344.1

349.2 349.2 344.1 1.40

1.37

1.39 1.39 1.37

S3-S4

25.9

344.0

320.7

336.3 338.8 325.9 1.33

1.24

1.3

1.31 1.26

I1-I2

26.3

300.1

276.4

289.6 281.7 279.1 1.14

1.05

1.1

1.07 1.06

I2-I3

22.4

615.1

606.2

608.4 606.2 601.7 2.75

2.71

2.72 2.71 2.69

I3-I4

39.8

760.2

740.3

760.2 740.3 736.3 1.91

1.86

1.91 1.86 1.85

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

October 2018

617