and the denoised image, which obtained metric Q values of

0.0020 and 0.0023, respectively.

Enhanced Discriminative Feature Description near Missing Regions

To validate the function of the

VIS

image gap inpainting for

the classification, five single spatial features and the multiple-

feature

VS

approach were utilized, respectively. Table 7 re-

cords the classification accuracy results, and the quantitative

assessments for each class are shown in Figure 8.

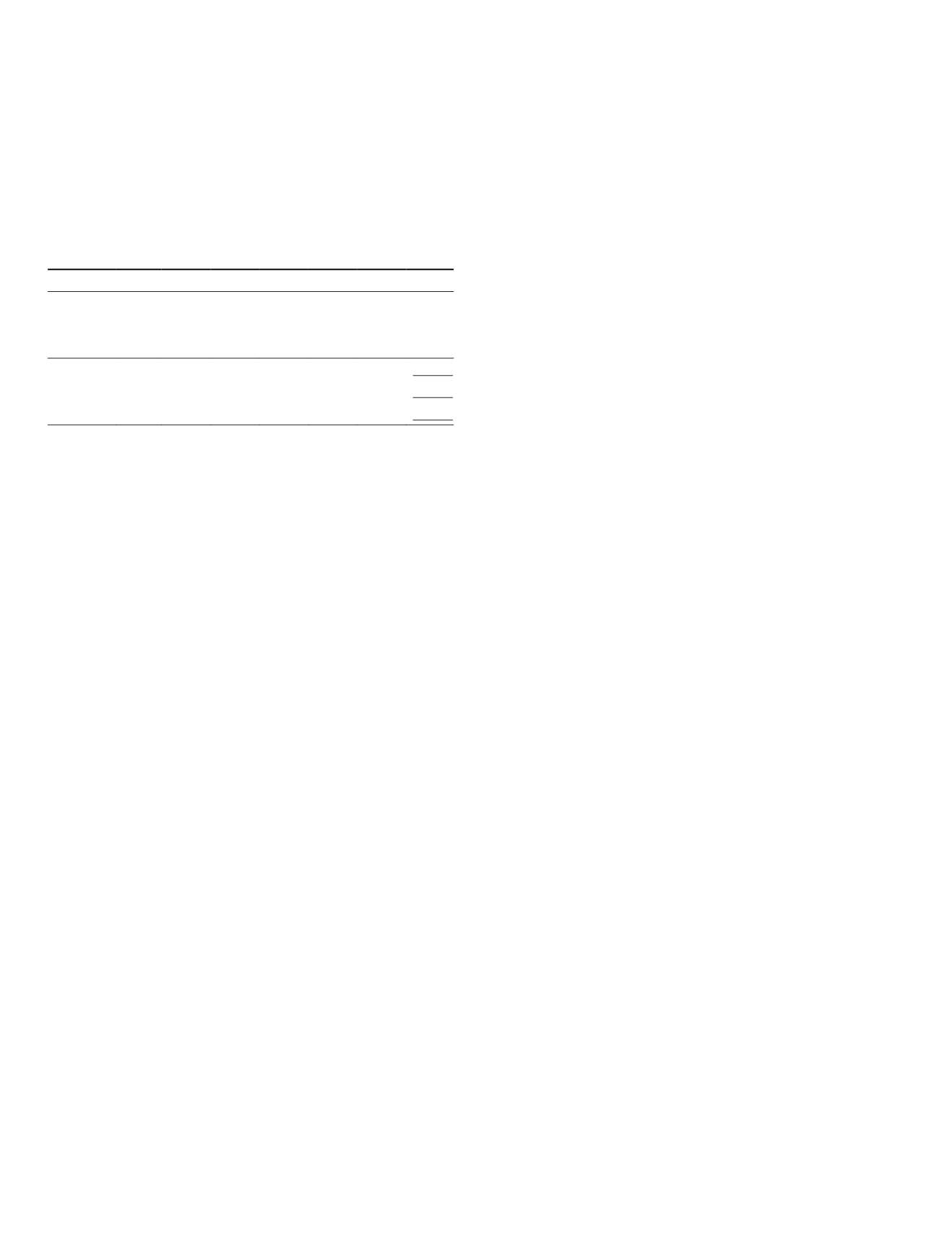

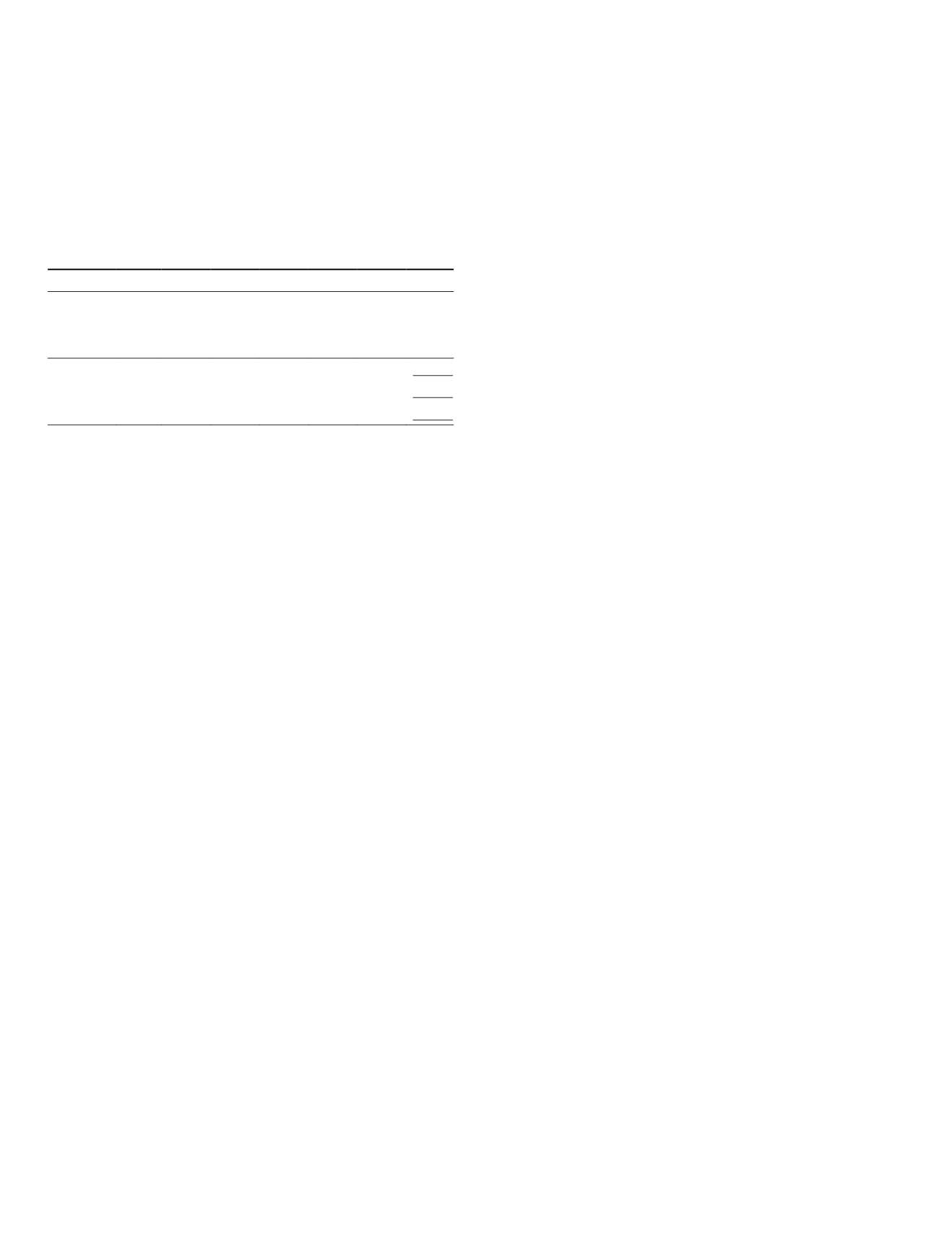

T

able

7. C

lassification

A

ccuracy

on

the

E

dge

S

ub

-P

art

of

the

S

econd

T

est

S

et

for

E

ach

S

patial

F

eature

R

elated

A

pproach

for

the

R

emaining

C

lass

C

lassification

of

the

S

tudy

A

rea

, B

efore

and

A

fter

I

mage

I

npainting

Index Area SD DB MI

GLCM

VS

With

inpainting

OA 0.9096 0.9092 0.9103 0.8960 0.9127

0.9350

AA 0.8819 0.8628 0.8856 0.8430 0.9322

0.9448

κ

0.8790 0.8778 0.8800 0.8604 0.8843

0.9133

Without

inpainting

OA 0.8935 0.8388 0.8950 0.8951 0.9080 0.9274

AA 0.8721 0.8138 0.8807 0.8321 0.9293 0.9365

κ

0.8572 0.7881 0.8596 0.8590 0.8781 0.9031

In Table 7, it can be seen that in all the comparison pairs, es-

pecially the

SD

pair, the image gap inpainting can improve the

classification accuracy. In Figure 8, the classification omis-

sion, agreement, and commission (Li

et al.

, 2014) are shown

as the sub-bars for each category in a group, and the detailed

number in each sub-bar denotes the associated proportion.

Overall, the classification performance of each category has

been improved by the image gap inpainting, as shown in Fig-

ure 8. Specifically, comparing

VIS-VS

with In-

VIS-VS

, it can be

seen that the omission decreases for the vegetation, concrete

roof, and red roof classes, and the agreement for tree pixels

increases. For the commission, similar observations can be

made, which further confirm the effectiveness of the simple

inpainting step.

Discriminative Ability Analysis for each Class

To illustrate the discriminability between the land-use and

land-cover types, the classification omission, agreement, and

commission for the global test set are shown as the sub-bars

for each class in a group in Figure 9. Here, it can be first seen

that the road discriminability of the

TI-HSI

data is superior to

that of the

VIS

data, although the spatial features are repre-

sented in the

VIS

data. It should be mentioned that the pro-

posed

OOIFA

approach can achieve the goal of road extraction,

and can also alleviate the omission error of the other classes.

The remaining classes consist of bare soil, vegetation, trees,

and buildings (i.e., red roof, concrete roof, and gray roof).

For these classes, it is believed that their spatial description

is more useful. For the first three class types in Figure 9 (i.e.,

bare soil, vegetation, and concrete roof), it is suggested that

the contextual feature is superior, as each of these classes has

a specific contextual pattern, which can be seen in Figure 1a.

It can also be observed that the

VS

approach can maintain the

superiority of the contextual description, to conduct the clas-

sification task. For the three building (roof) classes in Figure

9, it can be seen that a single feature cannot describe the dis-

criminative characteristic satisfactorily, and the

VS

approach

utilizes the complementary spatial descriptions to improve

the classification accuracy.

Conclusions

The study of the newly released multi-sensor, multi-spectral-

spatial resolution, and multi-swath width

TI-HSI

and

VIS

datasets is a challenging topic, and this paper presents a

multi-level fusion approach for discriminative information

mining to achieve urban land-use and land-cover classifica-

tion. The specific superiorities of the

TI-HSI

and

VIS

datasets

are integrated to improve the classification accuracy, which

was confirmed by a quantitative assessment. In particular, a

novel image gap inpainting method for the

VIS

data with the

guidance of the

TI-HSI

data is applied to deal with the swath

width inconsistency and facilitate accurate spatial feature

extraction, thereby improving the overall classification ac-

curacy. In summary, it is suggested that utilizing the

TI-HSI

data together with the

VIS

data for urban surface exploitation

is both promising and meaningful.

Acknowledgments

The authors would like to thank Telops, Inc. (Canada) for

providing the data used in this study, and the

IEEE GRSS

Image

Analysis and Data Fusion Technical Committee for organizing

2014 Data Fusion Contest. Thanks are also due to the handling

editor and the anonymous reviewers for their insightful and

constructive comments. The authors would also like to thank

the supporting from the National Basic Research Program of

China (973 Program) under Grant 2011CB707105, the National

Natural Science Foundation of China under Grants 41571362

and 41431175, and the Key Laboratory of Agri-informatics,

Ministry of Agriculture, P.R.China..

References

Boser, B.E., I.M. Guyon, and V.N. Vapnik, 1992. A training algorithm

for optimal margin classifiers,

Proceedings of the Fifth Annual

Workshop on Computational Learning Theory

, 1992, pp.

144–152.

Comaniciu, D., and P. Meer, 2002. Mean shift: A robust approach

toward feature space analysis,

IEEE Transactions on Pattern

Analysis and Machine Intelligence

, 24(5):603–619.

Congalton, R., 1991. A review of assessing the accuracy of

classifications of remotely sensed data,

Remote Sensing of

Environment

, 37(1):35-46.

Dalla Mura, M., J.A. Benediktsson, B. Waske, and L. Bruzzone, 2010.

Morphological attribute profiles for the analysis of very high

resolution images,

IEEE Transactions on Geoscience and Remote

Sensing

, 48(10):3747–3762.

Fan, R.-E., K.-W. Chang, C.-J. Hsieh, X.-R. Wang, and C.-J. Lin, 2008.

LIBLINEAR: A library for large linear classification,

The Journal

of Machine Learning Research

, 9:1871–1874.

Foody, G.M., 2004. Thematic map comparison: Evaluating the

statistical significance of differences in classification accuracy,

Photogrammetric Engineering & Remote Sensing

, 70(5):627–634.

Ghamisi, P., M. Dalla Mura, and J.A. Benediktsson, 2015. A survey

on spectral-spatial classification techniques based on attribute

profiles,

IEEE Transactions on Geoscience and Remote Sensing

,

53(5):2335–2353.

Guo, M., H. Zhang, J. Li, L. Zhang, and H. Shen, 2014. An online

coupled dictionary learning approach for remote sensing

image fusion,

IEEE Journal of Selected Topics in Applied Earth

Observations and Remote Sensing

, 7(4):1284–1294.

Hay, G.J., and T. Blaschke, 2010. Special issue: Geographic object-

based image analysis (GEOBIA),

Photogrammetric Engineering &

Remote Sensing

, 76(2):121–122.

Huang, X., and L. Zhang, 2011. A multilevel decision fusion

approach for urban mapping using very high-resolution multi/

hyperspectral imagery,

International Journal of Remote Sensing

,

33(11):3354–3372.

Huang, X., and L. Zhang, 2013. An SVM ensemble approach

combining spectral, structural, and semantic features for the

classification of high-resolution remotely sensed imagery,

IEEE

Transactions on Geoscience and Remote Sensing

, 51(1):257–272.

910

December 2015

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING