conflicting activities were detected by our

GP

regression

models as previously discussed. If the observed value (blue

bar) of any activities is larger than its predicted value (green

curve and circle) as 1.96σ (red curve and cross), it is judged as

a conflict activity against the others. The abnormally acting

agents are marked by red boxes. They are analyzed in detail

as following (all the related atomic activities are founded in

Figure 6).

Figure 12a shows a police car driving conversely. This

counter flow induces the value of activities 3 and 17 in the

former clip, activities 3 and 19 in the latter clip.

A fire engine cuts off the vertical flow (see Figure 12b) and

causes the activity for much stronger than the prediction.

In Figure 12c the activity is abnormal because of a vehicle

making an illegal U-turn.

Some falsely detected abnormalities are shown in Figure

13. The red double-decker bus is detected as a U-turn agent

due to its big size. In Figure 13c, a conflict activity is detected

occurring in the right bottom of the camera scene, because in

this state, there should not be a leftward traffic flow. However,

this alarm is a misunderstanding by our models because of

the bad video clip segmentation. This clip contains the state

transition and is classified as the left and right turn state.

Therefore, the

GP

regression models thought the activity con-

flicting with others in this state and judged it as an abnormal

event. Actually, this activity occurred when the state has

already changed into the state of leftward flow.

Figure 12 shows the falsely detected abnormal events by

the

GP

regression models, and Figure 13 shows examples of

missing detected abnormal events.

Figure 14 shows some missing detected abnormal events.

Because our method is beyond detecting, the categories of ac-

tivity agents are not considered. For example, if a pedestrian

is walking along the path of vehicles, it will not be detected as

an abnormality, as shown in Figure 14a. In Figure 14b before

the fire engine drives into the camera scene, all vehicles have

stopped and wait for its pass. Therefore, there is no activ-

ity in conflict with the fire engine. The scene is classified as

leftward state. Because of its previous state is the sate left and

right turn, this transition is legal. That is why this emergency

was undetected. A car is making an illegal U-turn in Figure

14c. However, its activity seems identical with others in the

leftward state. Hence, it is also not identified as an abnormal

activity. The detection and tracking-based approaches would

perform better in this case.

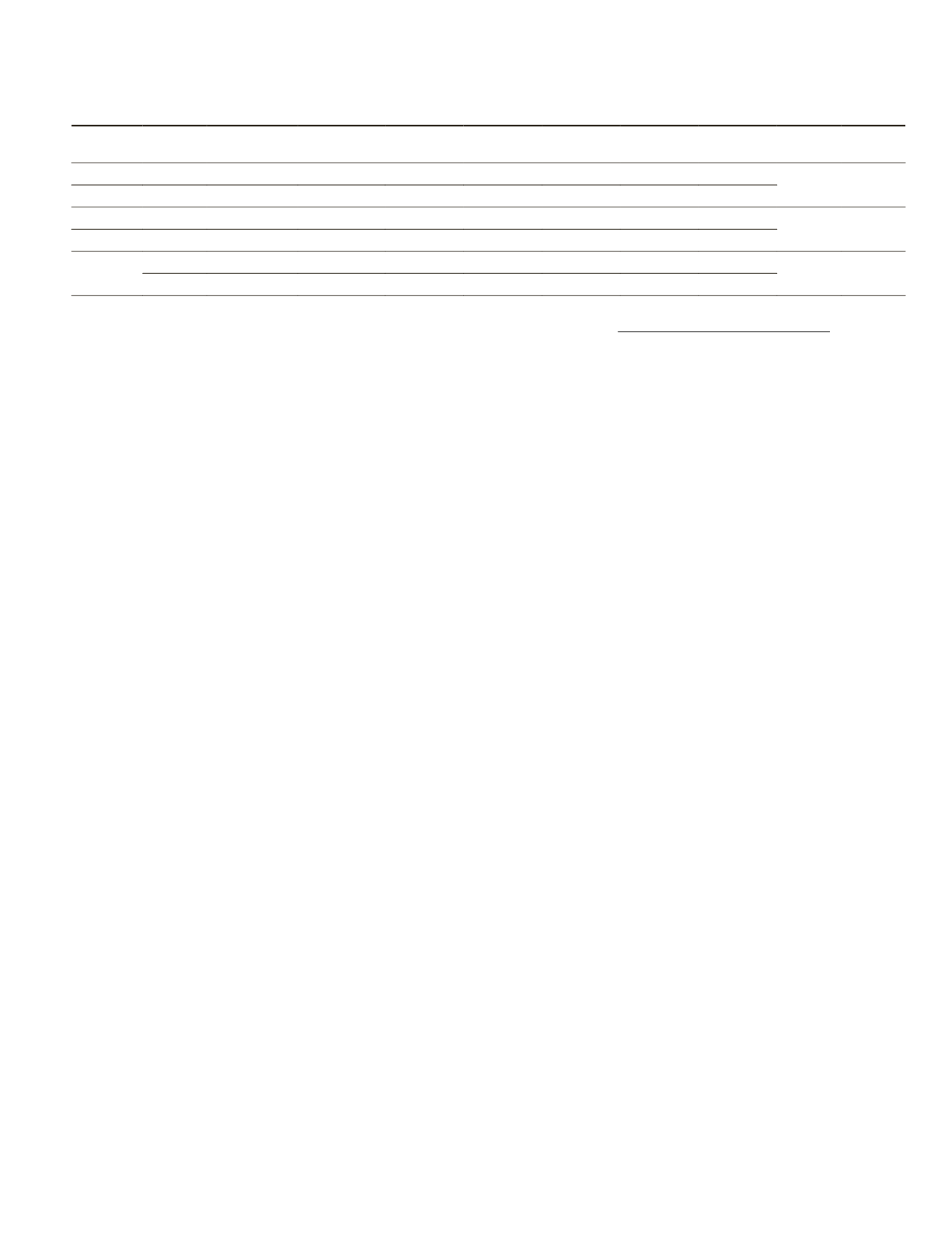

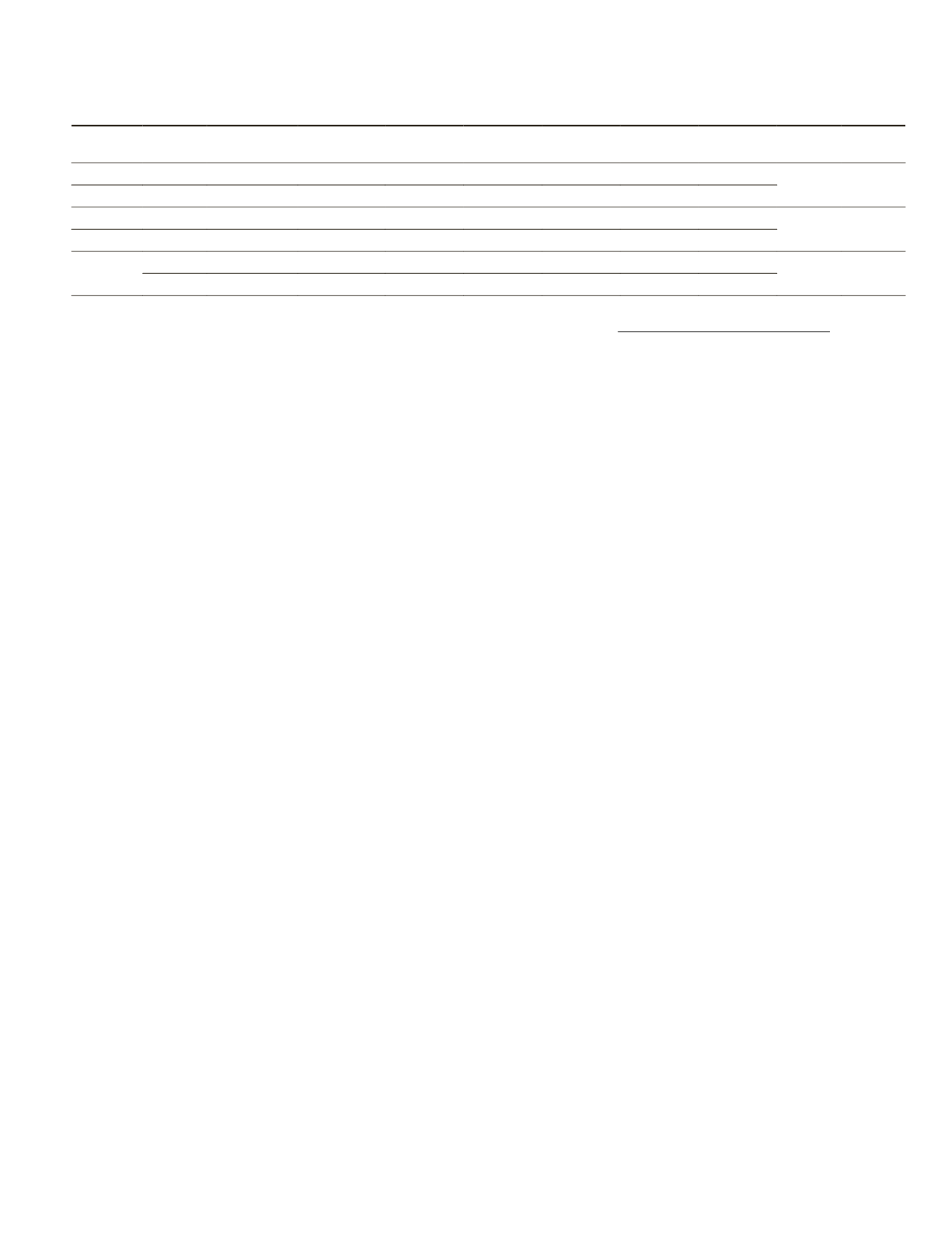

We provide a manually interpreted summary of the cat-

egories of abnormal events of each dataset in Table 4. Notice

that, each entire abnormal event is counted as one event, no

matter how many clips it spans. The false detection means

that, a clip is detected as an abnormal clip, but there is not

any abnormal event of interest. The overall false positive rates

is defined as:

FPR

=

Number of falsely detected clips

Number of test clips

(35)

From the summary of experimental results, we can see

that our method successfully detected most of the abnormal

traffic events while causes low overall false positive rates

in the three benchmark datasets. However, it seems weak in

detecting “improper region” because the proposed method

is beyond object detection. In other words, it is the abnormal

motions of any agent in specific case cause the anomaly alarm

rather than the category of agent. A concrete example is given

in Figure 14a. Moreover, in the experiments, we find that our

trained model own the ability of working in real time beyond

the computation bottleneck of optical flow.

Conclusions

In this paper, a novel unsupervised learning framework has

been proposed to model the activities and interactions, to

recognize global interactions and to identify abnormal events

in crowded and complicated traffic scenes. Through combin-

ing the advantages of both generative models (

HDP

models)

and discriminative ones (

GP

models), the formulated approach

provides an effective solution to the problems of high-level

video events recognition and abnormal events detection.

First, owing to its computation efficiency as well as compara-

tive reliability in the far-field surveillance data, the quantized

optical flow is adopted in this work as the low-level motion

features. Then, a non-parametric generative

HDP

model is

utilized to analyze the input video and learn the main activi-

ties and interactions in a unsupervised way. Next, each of the

learned activities and interactions are represented as a combi-

nation of the local motions and the combination of activities

respectively. Finally, each activity and interaction, a

GP

model

is trained using the aforementioned representation for classifi-

cation tasks and anomaly detection. The experimental results

demonstrate that the approach outperforms other popular ap-

proaches in both classification accuracy and computation ef-

ficiency. In particular, the improved

GP

classifier is capable to

correct the falsely-classified clips by the original

GP

classifier.

There are many exciting avenues for future research. First, it

will be interesting to incorporate the segmentation methods

[28]

into our proposed framework. Second, we will test the pro-

posed algorithm using high-resolution remote sensing images,

where the visual features are clear and informative

[29]

and

[30]

Third, we would also like to compare the performance of our

model to the recent CNN model

[31]

. Finally, the current model

only takes into account the simple temporal dependencies

within a clip in detecting conflicting activities. It could result

in poor performances of abnormality detection in scenes, of

which the traffic state is quite obscure because of the absence

of traffic lights. One possible solution is to use additional GIS

data to enhance the classification task and anomaly detection.

Table 4. Summary of discovered abnormal events in different datasets. Overall true positive and false positive rates are also

given. The “\” symbol indicates that there is not such event in the dataset. “

Gt

”, “

TPR

”, and “

FPR

” mean ground truth, truth

positive rate and false positive rate respectively.

Dataset

Results Jaywalking Emergency

Illegal

turning

Near

collision

Strange

driving

Improper

region

False

detection

Overall

TPR

Overall

FPR

QMUL

GT

19

4

10

2

1

2

\

66% 2.6%

junction Ours

11

3

7

2

2

0

18

QMUL

GT

21

\

/

2

4

\

\

63% 2.1%

junction2 Ours

14

\

/

2

1

\

7

MIT

GT

14

\

34

\

1

13

\

65.7% 2.9%

Ours

7

\

28

\

1

5

43

PHOTOGRAMMETRIC ENGINEERING & REMOTE SENSING

April 2018

213